Bytedance Seedance 2.0 Automates Professional Filmmaking with Coherent Narratives

Bytedance’s Seedance 2.0 delivers multi-shot narrative coherence and native audio, raising the bar for AI filmmaking.

February 9, 2026

A seismic shift is underway in the landscape of generative artificial intelligence, signaled by the limited release of Bytedance’s latest AI video model, Seedance 2.0. The new iteration builds upon the already formidable capabilities of its predecessor, pushing the boundaries of what is possible in automated video production and intensifying the global race for dominance in this transformative technology. Early access users, operating on Bytedance's Jimeng AI video platform, have reported outputs that demonstrate a remarkable leap forward in cinematic quality, narrative coherence, and technical control, suggesting that the model is rapidly closing the gap between AI-generated content and professional filmmaking standards. The model’s rollout is not merely an incremental update; it represents a comprehensive overhaul designed to automate complex, multi-shot sequences with an unprecedented level of visual and temporal consistency, addressing the key technical hurdles that have historically plagued AI video.

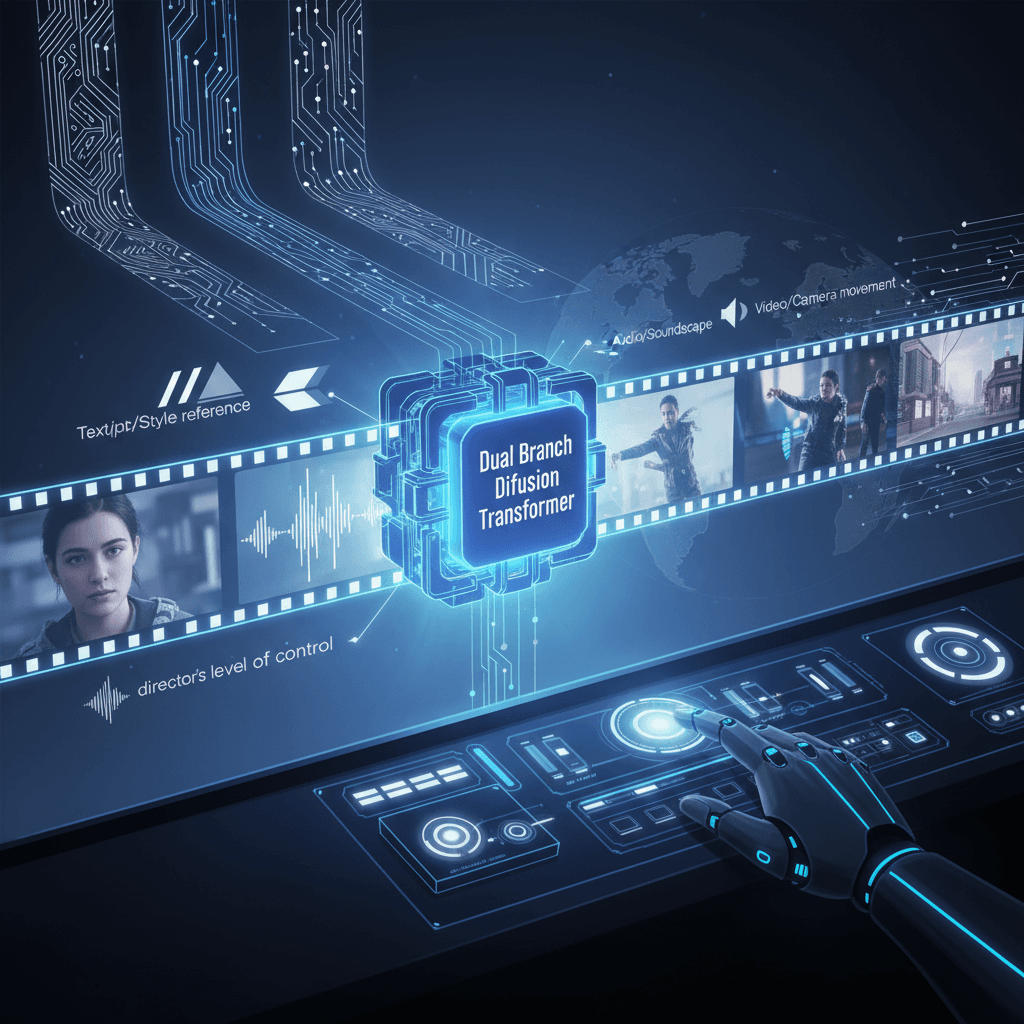

The most significant technological leap in Seedance 2.0 lies in its sophisticated architecture and its multimodal input capabilities. Unlike earlier text-to-video models that struggled with maintaining consistency beyond a few seconds, Seedance 2.0 is built on a Dual Branch Diffusion Transformer framework that allows for the simultaneous processing of up to four input types: text, images, video, and audio.[1][2][3] This multimodal approach grants users a director’s level of control, allowing them to set the visual style with a reference image, specify complex camera work with a video clip, guide the rhythm with an audio file, and shape the narrative with a detailed text prompt.[4][5] The model's capacity for "Multi-Shot Storytelling" is a headline feature, enabling it to generate continuous, cohesive narratives that maintain character identity, lighting conditions, and aesthetic style across multiple distinct scenes in a single generation process.[1][6][7][2] Furthermore, the model has dramatically improved its fundamental output quality, delivering native 2K cinematic resolution, a significant step up from the 1080p standard of previous models.[1][7][3] This high-fidelity output, which reportedly renders videos 30% faster than some competing models, is crucial for professionals who require a crisp, detailed master file suitable for larger screens and commercial distribution.[8][9][3]

Seedance 2.0’s advancements in audio-visual integration mark another critical breakthrough in closing the realism gap. The model is capable of generating high-fidelity audio, including dialogue, ambient soundscapes, and sound effects, concurrently with the video, not merely as a post-production layer.[6][7][3] This native synchronization includes phoneme-level lip-sync across multiple languages, ensuring that generated characters’ speech aligns precisely with the visual movement of their mouths.[1][6] This level of integration is essential for creating compelling narrative content, as mismatched audio and video has long been a telltale sign of AI-generated work. The underlying technology also exhibits a deeper understanding of physical laws, with "Natural Motion Synthesis" that produces fluid movements and interactions, from the subtle shift of facial expressions to the physics-based realism of objects reacting to momentum and gravity in action sequences.[1][6][5] This precise simulation of the real world is a direct challenge to the photorealism benchmarks set by other leading models in the field.

The launch of Bytedance’s latest tool immediately repositions the competitive dynamics within the global AI race, particularly against Western tech giants and other major Chinese competitors. Bytedance, the parent company of TikTok and the Chinese equivalent Douyin, leverages its deep experience in video processing and vast library of video data to train its models, a key advantage cited by analysts.[10][11] By specializing in coherent multi-scene sequences and audio-video synchronization, Seedance 2.0 is staking a claim in the narrative and commercial content creation space, differentiating itself from rivals such as OpenAI's Sora, which has been lauded for its physics realism, and Kuaishou’s Kling model, which is noted for its motion control.[1][4] The intensification of this competition among Chinese tech firms, particularly after the unveiling of Kling 3.0, has sent ripples through the financial markets, with the stock prices of Chinese media and AI application companies rallying on the news of Seedance 2.0’s impressive outputs.[10][11] This market enthusiasm underscores the belief that AI video generation is on the cusp of fundamentally transforming traditional filmmaking, advertising, and the massive short-form content industry.

While the technical achievements are undeniable, the public release of such a powerful tool brings immediate practical and ethical concerns to the forefront. Early testers have praised the model’s ability to generate "lifelike characters" with a level of realism that makes it "very hard to tell whether a video is generated by AI."[10][8][11] This hyper-realism, however, simultaneously fuels warnings from industry experts about the potential for a new wave of deepfakes and the associated trust crises in digital media.[9] Bytedance has implemented initial safeguards, such as privacy restrictions that block the uploading of images containing realistic human faces, though the efficacy of such measures in the long term remains a subject of debate.[9] From a user perspective, the model is touted for its ability to enable "One-Sentence Video Editing," allowing users to add, remove, or replace elements within existing videos using natural language, a major boost for efficient post-production workflows.[6] However, even with its sophisticated control mechanisms, some early adopters have reported that the model still struggles with extremely fine-grained, frame-level control and has exhibited minor glitches, such as garbled subtitles.[9][12] These are common, albeit diminishing, issues for models at the cutting edge of generative AI.

The release of Seedance 2.0 confirms that the race for an industry-defining AI video model is a neck-and-neck global contest, with Bytedance establishing itself as an aggressive and highly capable contender. The model’s focus on multi-shot narrative coherence, native audio integration, and 2K resolution pushes the technology from a proof-of-concept into a tool with genuine potential for professional applications in advertising, entertainment, and digital media. The rapid pace of development is lowering the barrier to entry for high-quality video production, empowering a new class of creators. As Seedance 2.0 begins its full rollout, its influence will be felt far beyond the tech sector, accelerating the debate over the future of creative labor and the nature of visual truth in the digital age.

Sources

[3]

[5]

[6]

[7]

[9]

[10]

[11]

[12]