Rigorous Test Exposes Top AI Models Hallucinate Even With Web Search

Advanced LLMs fail high-stakes, multi-turn tests, proving real-time web search cannot solve persistent 30% hallucination rates.

February 9, 2026

The persistent and vexing problem of large language model hallucination continues to plague the artificial intelligence industry, as evidenced by a challenging new benchmark that exposes substantial flaws even in the most advanced commercial systems. Researchers from Switzerland and Germany have introduced HalluHARD, a multi-turn evaluation designed to push frontier models to their limits on high-stakes, real-world tasks. The results are a sobering reminder of the technology's current limitations, revealing that top-tier models like Claude Opus 4.5, even when equipped with real-time web search capabilities, still produce incorrect or ungrounded factual information in nearly a third of all cases.[1][2]

The study, a collaboration between researchers at EPFL, the ELLIS Institute Tübingen, and the Max Planck Institute for Intelligent Systems, establishes a new baseline for factual accuracy that is significantly more stringent than previous tests.[1][2] By focusing on multi-turn dialogue, HalluHARD mirrors the complex, iterative nature of human conversation and professional workflows, where context accumulates and initial errors can cascade into greater inaccuracies.[1][3][2] The benchmark comprises 950 seed questions spanning four critical and knowledge-intensive domains: legal cases, medical guidelines, technical coding, and niche research questions.[3][4][2] A core component of the methodology involves operationalizing 'groundedness' by requiring models to furnish inline citations for every factual assertion. The verification pipeline then employs an advanced system to iteratively retrieve evidence via web search, fetching and parsing full-text sources, including embedded PDFs, to rigorously assess whether the cited material actually supports the generated content. This meticulous process ensures the benchmark focuses squarely on ungrounded factual mistakes, isolating true hallucination from other types of model failure.[3][2]

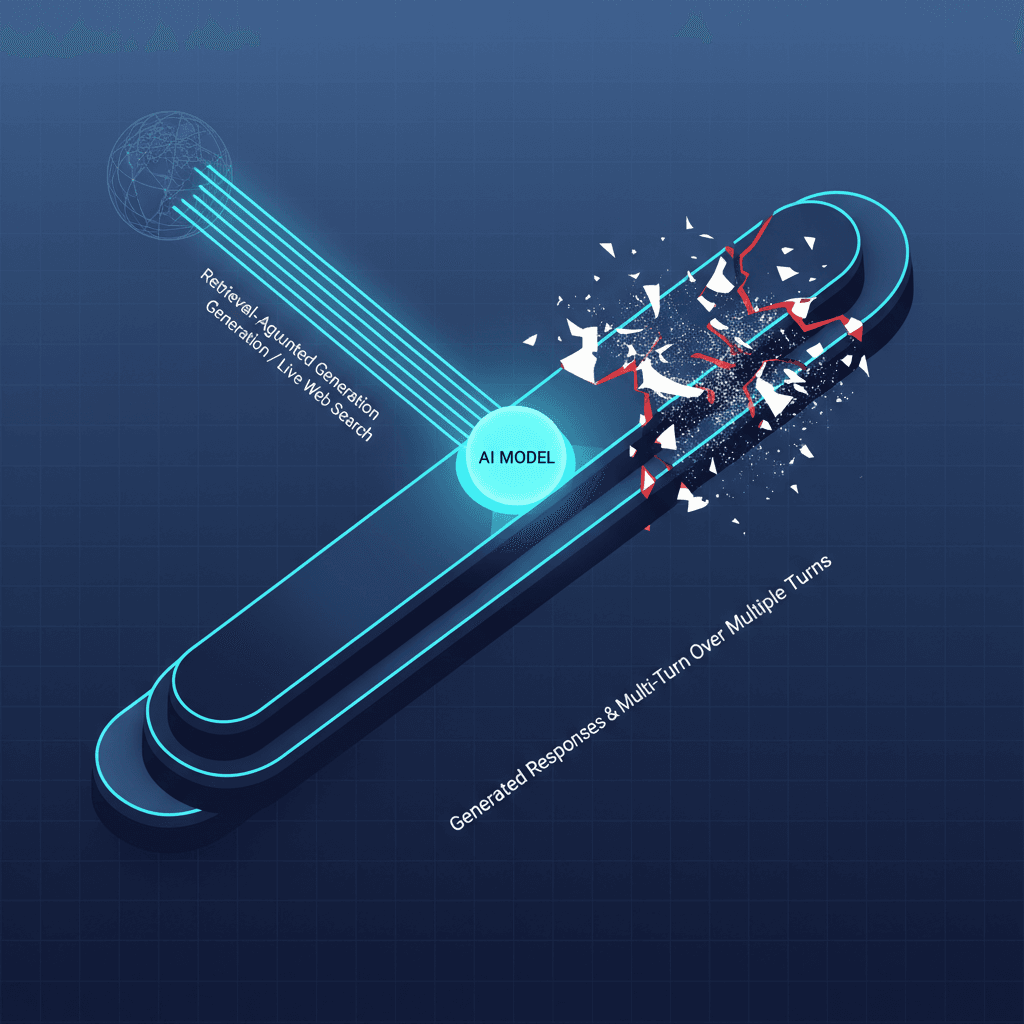

Despite the integration of retrieval-augmented generation (RAG) through live web search—a feature widely believed to be the most effective mitigation strategy against hallucination—the performance of leading models remains unexpectedly low. Claude Opus 4.5, one of the top performers in the study, achieved a hallucination rate of 30.2% when utilizing its web search functionality.[3][2] This means that approximately one out of every three claims produced by the model lacked verifiable support from the cited sources or was outright incorrect.[3][2] The importance of the web search tool is starkly illustrated by the model's performance without it, where the hallucination rate for the same model configuration soared to 60.0%, indicating that a majority of unassisted claims could not be factually verified.[1][3] Another leading system, GPT-5.2-thinking, also struggled, posting a hallucination rate of 38.2% even with its web-search function enabled.[3] These figures demonstrate a significant reliability chasm, particularly for use cases in regulated and high-stakes fields where accuracy is paramount, such as healthcare, law, and financial advice.

Further analysis of the HalluHARD results identified distinct patterns in the failure modes of the models. One major finding is the phenomenon of error propagation, particularly in the citation-grounded tasks across the legal, research, and medical domains.[4] The hallucination rate tended to increase significantly in the later turns of the multi-turn conversations, suggesting that models are prone to conditioning their subsequent responses on their own earlier, unverified mistakes.[4] This creates a feedback loop of inaccuracy that erodes user trust with every new response. More granular analysis revealed that content grounding failures—situations where the model incorrectly summarizes or draws unsupported conclusions from a valid source—were substantially more prevalent than simple reference failures, where the model fabricates a citation entirely.[4] This issue was exacerbated in the research domain, where verifying fine-grained details often required accessing and parsing full-text PDF documents, a task that current models struggle with when only provided with search snippets or high-level links.[4]

The benchmark also shed light on how models handle the boundary between known and unknown information. Surprisingly, models struggled more acutely with niche, obscure but genuinely real facts than with completely fabricated items.[4] The researchers hypothesize that models are incentivized to 'guess' when faint traces of niche knowledge exist in their vast training datasets, leading to speculative hallucinations. In contrast, they are often more likely to abstain or signal uncertainty when faced with items that are entirely fictitious.[4] This nuance suggests that simply increasing model intelligence is not a panacea; while more capable models generally demonstrate lower hallucination rates, their enhanced reasoning abilities can also produce longer, more detailed responses that inadvertently create greater surface area for ungrounded claims.[4] The persistent 30.2% rate for the leading model, even with sophisticated web search, underscores that the challenge is not just one of information access, but of fundamental reasoning and the ability to maintain epistemic humility—knowing what you do not know—in generating responses.

The implications of the HalluHARD benchmark are profound for the future direction of AI development. It serves as a clear call to action for the industry to move beyond superficial accuracy metrics and focus on robust, verifiable grounding mechanisms.[1] The current reliance on RAG as a complete solution is shown to be insufficient, and developers must focus on deeper integration of factual verification and better handling of multi-step, contextual reasoning. Until these core issues are addressed, the widespread deployment of generative AI in critical, decision-making applications will be limited not by its intelligence, but by its persistent, and often dangerously confident, unreliability. The path to truly trustworthy AI requires a shift in engineering focus, prioritizing provable groundedness and self-correction over sheer output volume and speed.[1][2]