SwiReasoning Teaches AI to Think Smarter, Slashes LLM Costs and Boosts Accuracy

Like humans, AI learns to switch between quiet contemplation and step-by-step reasoning, massively boosting efficiency and accuracy.

October 19, 2025

A new artificial intelligence framework is poised to make large language models (LLMs) significantly more efficient and accurate by teaching them a skill that humans use constantly: knowing when to "think" quietly and when to reason things out step-by-step. Developed by researchers at Georgia Tech and Microsoft, the system, called SwiReasoning, allows AI to dynamically switch between two modes of thought, a breakthrough that addresses one of the biggest trade-offs in computational reasoning—the balance between speed and precision. This new approach, which requires no additional training for the AI model, promises to lower the immense computational costs associated with complex AI reasoning and could unlock more sophisticated applications in fields like science, medicine, and finance.

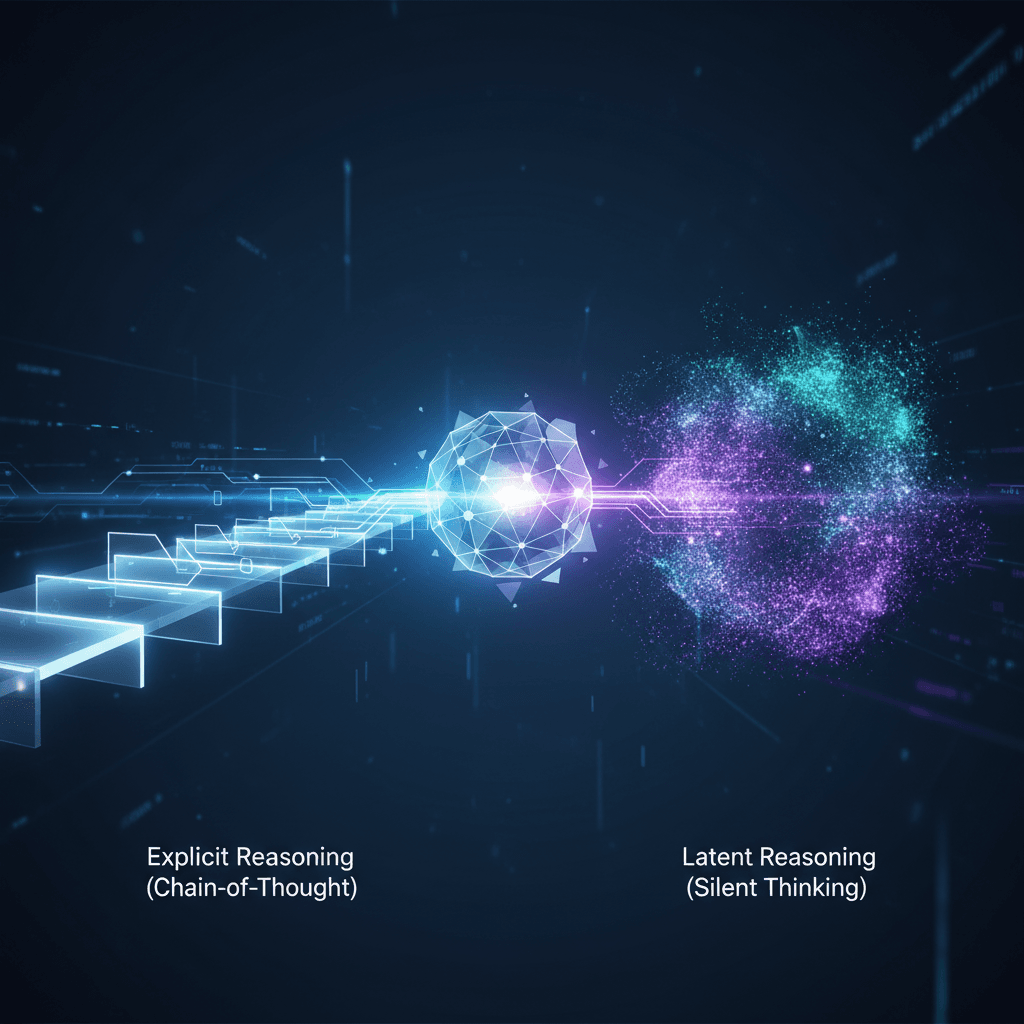

At the heart of the challenge SwiReasoning tackles is the inherent cost of the prevailing method for AI reasoning, known as chain-of-thought (CoT). This technique prompts a model to "think step by step," generating explicit text that outlines its logical progression toward an answer. While often effective at improving accuracy on complex tasks, this process is resource-intensive. Every intermediate thought is a generated token, and generating tokens costs time and money, making the use of LLMs for intricate problems a slow and expensive endeavor. Researchers have explored an alternative called "latent reasoning," where the model processes information internally within its high-dimensional vector space without producing explicit text. This "silent" thinking is faster and cheaper but has its own drawbacks. Purely latent reasoning can be less accurate, as the model may explore too many possibilities without converging on a single, confident answer, a problem akin to unfocused brainstorming.

SwiReasoning offers an elegant solution by creating a hybrid model that intelligently alternates between these two modes.[1] The framework acts as a controller, constantly monitoring the LLM's confidence level as it works through a problem.[2] This confidence is measured using a concept from information theory called entropy.[3] When the model is generating predictions for the next token in a sequence, low entropy indicates it has a high degree of certainty and a clear path forward.[3] In this state, SwiReasoning directs the model to use the explicit chain-of-thought mode, articulating its reasoning steps to solidify its progress.[1][4] Conversely, if the entropy is high, it signals that the model is uncertain and considering many different possibilities.[3] When this happens, SwiReasoning seamlessly shifts the model into the faster, more exploratory latent reasoning mode, allowing it to evaluate alternative pathways internally without committing to text and wasting resources.[1][2] This dynamic switching allows the AI to balance the exploratory breadth of latent thinking with the focused exploitation of explicit reasoning.[5][6]

To prevent the system from getting stuck in an endless loop of switching or overthinking a problem, the framework incorporates two key control mechanisms. The first is a limit on the maximum number of times the model can switch between latent and explicit modes, which helps curb excessive computation.[5][4] The second, known as "asymmetric dwell times," ensures the model doesn't switch back and forth too rapidly.[1] A switch from a state of uncertainty to confidence (latent to explicit) can happen instantly to lock in a promising line of reasoning. However, a switch from confidence back to uncertainty (explicit to latent) requires the model to persist in the explicit mode for a minimum number of steps, ensuring stability before it begins exploring again.[4] This entire system is "training-free," meaning it can be implemented on top of existing LLMs as a decoding-time framework without the need for costly retraining, making it a model-agnostic and highly practical solution.[2]

The results of this innovative approach are significant, demonstrating clear improvements in both accuracy and efficiency across a range of challenging benchmarks. On widely used mathematics and STEM datasets, SwiReasoning has been shown to consistently improve average accuracy by 1.5% to 2.8% when given an unlimited token budget.[5][1][6] While these percentages may seem modest, they represent a meaningful advance on problems that are at the frontier of AI capabilities.[3] The most dramatic gains, however, are in token efficiency. When operating under constrained computational budgets—a common scenario in real-world applications—SwiReasoning improves average token efficiency by a remarkable 56% to 79%.[5][1][6] This means it can achieve higher accuracy with far fewer tokens, translating directly into lower costs and faster response times. In some tests, the framework achieved up to 6.8 times better token efficiency compared to standard methods, a transformative leap that makes sophisticated reasoning more practical for widespread deployment.

The implications of SwiReasoning for the broader AI industry are profound. As the demand for AI to solve increasingly complex problems grows, the computational cost has become a major barrier.[7] Dynamic reasoning frameworks like SwiReasoning offer a path toward more sustainable and scalable AI by optimizing the use of resources based on the difficulty of the task at hand.[5][7] This could accelerate the adoption of advanced AI in various fields. In scientific research, it could power models that analyze vast datasets and formulate hypotheses more rapidly.[8] In finance, it could enable more nuanced and real-time analysis of market data.[9] For customer service, it could lead to AI assistants that can resolve complex, multi-step issues without the frustrating delays and high operational costs associated with current models.[1] By making reasoning more efficient, SwiReasoning suggests that the path to more powerful AI may not solely rely on building ever-larger models, but also on designing smarter, more flexible ways for them to think.[3]

Sources

[1]

[2]

[3]

[5]

[6]

[7]