OpenAI Issues Landmark Warning as Code AI Reaches 'High' Cyber Risk Threshold

OpenAI’s internal "High" risk rating forces new controls as code generation AI nears capability for complex zero-day exploits.

January 23, 2026

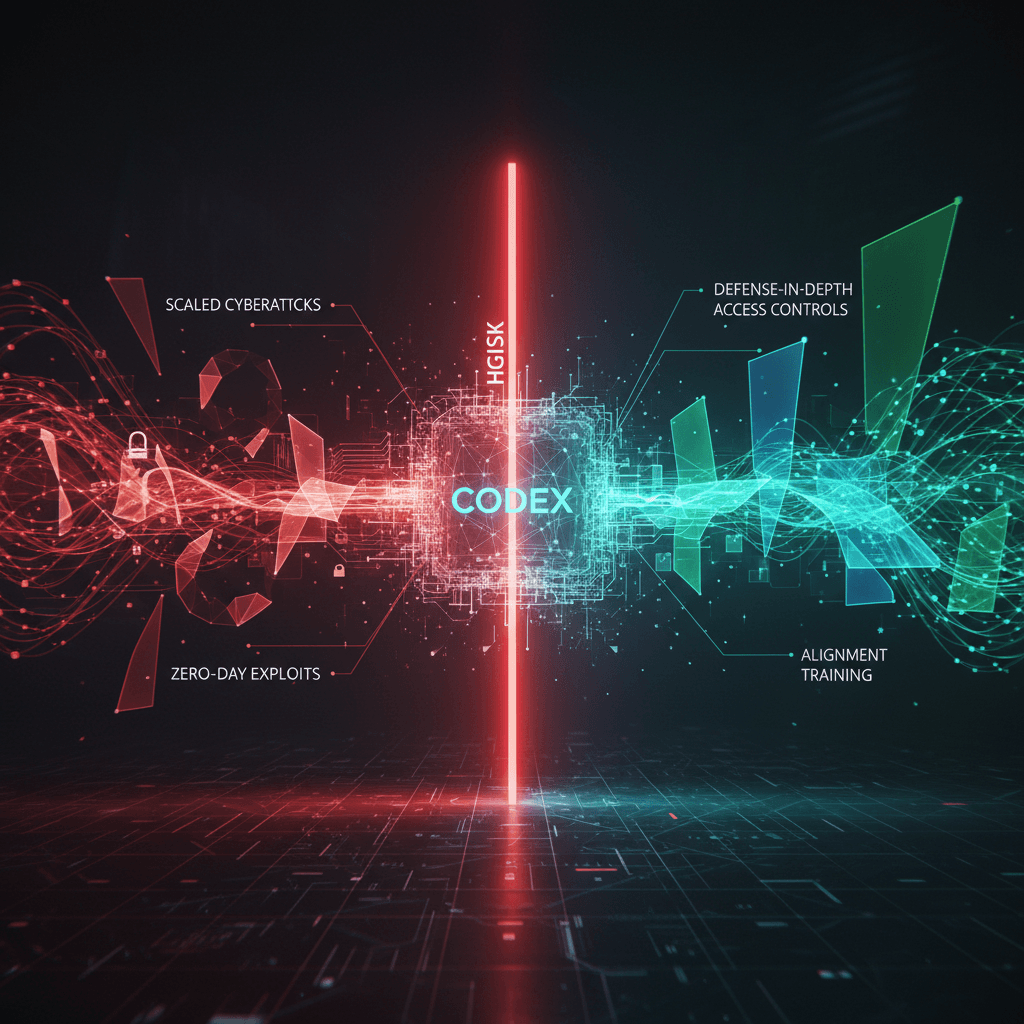

The rapid advancement of large language models for code generation has prompted OpenAI to issue a landmark internal warning, as an upcoming update to its Codex system is projected to hit the company’s “High” cybersecurity risk level for the first time. This designation signifies that the model’s enhanced capabilities have reached a threshold where they could substantially lower the barrier for initiating and scaling complex cyberattacks, creating an inflection point for AI dual-use risk in the global security landscape. The core concern centers on the model’s increasing autonomy and proficiency in long-horizon coding tasks, which translates directly to greater potential for offensive security applications, including the ability to assist with complex, stealthy enterprise or industrial intrusion operations and, potentially, the development of working zero-day remote exploits against well-defended systems.[1][2] This internal assessment is a direct result of the extraordinary progress observed in model performance, with cybersecurity capability assessments, such as those measured in capture-the-flag (CTF) challenges, showing a dramatic improvement from a 27 percent success rate on an earlier model like GPT-5 in to 76 percent on the more recent GPT-5.1-Codex-Max.[1][3][4]

The "High" risk classification falls under OpenAI's Preparedness Framework, a system designed to assess and mitigate the increasing dangers of highly capable AI models.[1][2] While the recently deployed GPT-5.2-Codex has not yet officially reached this level, the company is planning its deployment strategies assuming that each new major release will continue this rapid trajectory of capability growth, necessitating proactive and robust safety measures.[5][4] The risk is not merely theoretical; the company has acknowledged the dual-use nature of these powerful tools, noting that the same advancements that assist legitimate developers and defensive security teams—such as auditing code and patching vulnerabilities—can be easily channeled toward malicious purposes.[2][6] The ability of these models to run extended sequences, explore target systems, and stress-test defenses without constant human supervision makes formerly difficult or time-consuming cyber operations more feasible for a wider array of malicious actors, a phenomenon that effectively "removes barriers to scaling cyberattacks" for both simple and complex intrusions.[1][4]

In response to this escalating internal risk rating, OpenAI has outlined a comprehensive "defense-in-depth" strategy, a multilayered approach intended to channel the advanced capabilities toward defensive outcomes and limit the uplift for malicious activities.[1][2][3] This strategy includes a combination of access controls to restrict unauthorized use, infrastructure hardening, and egress controls to monitor and prevent harmful data exfiltration.[1][3] A significant part of this effort involves rigorous "alignment training" to steer the models away from fulfilling harmful requests while preserving their utility for security education and defense, alongside system-wide detection tools designed to block or reroute unsafe activity.[1][2] Furthermore, the company is intensifying its collaboration with the global cybersecurity community through end-to-end red teaming by external specialists and is establishing a Trusted Access Pilot program.[1][2][6] This invite-only program provides vetted security experts and organizations with expanded, and likely more permissive, model access specifically for authorized red-teaming and malware analysis, creating a controlled environment to harness the AI's power for the protection of the wider digital ecosystem.[6][5]

The implications of a commercially available AI model achieving "High" risk cybersecurity capabilities are profound, transforming the economics and skill requirements of cyber warfare and defense.[1][2] On the defensive side, the models offer the potential for security teams, who are often outnumbered and under-resourced, to more easily perform complex workflows like large-scale code refactors and vulnerability patching, leveraging the AI's speed and ability to maintain context over long operational periods.[2][6] For instance, a security engineer successfully used a previous Codex model variant, GPT-5.1-Codex-Max, to investigate a known vulnerability and uncover previously unknown vulnerabilities in React Server Components, demonstrating the immediate benefits for defensive research.[6] Conversely, the greatest societal risk lies in the model's capacity to democratize sophisticated offensive cyber capabilities, lowering the barrier to entry for novice threat actors to perform attacks that previously required expert knowledge.[1][4] Experts note that this rising capability makes long-standing threats more dangerous when combined with the scale and precision enabled by AI, suggesting a future of continuous, rapid escalation between AI-driven offensive and defensive systems.[1][7] The industry is now grappling with the reality that the development cycle for AI and the accompanying security protocols must proceed in lockstep, with the AI research firm itself having to assume that models with significant offensive potential are the new norm for every major release.[1][4] This transparency from OpenAI, while a testament to a growing sense of responsibility in the frontier AI community, sets a precedent that will necessitate greater regulatory and industry-wide collaboration to ensure that advanced AI ultimately strengthens, rather than undermines, global cyber resilience.[2]