Google DeepMind Debuts Project Genie, Letting Users Generate Interactive 3D Worlds

The Genie 3 world model instantly generates real-time, playable 3D environments for training AI agents and revolutionizing content creation.

January 29, 2026

A major shift in the landscape of generative artificial intelligence has commenced as Google DeepMind begins rolling out Project Genie, an ambitious prototype that allows users to create and interact with real-time, AI-generated worlds. The experimental feature is now accessible to Google AI Ultra subscribers in the United States, offering a hands-on introduction to a technology that moves beyond static images and videos to dynamic, playable environments. Powered by the advanced Genie 3 world model, this release represents a critical step in the company's long-term vision of creating versatile, embodied AI agents and fundamentally altering digital content creation.[1][2]

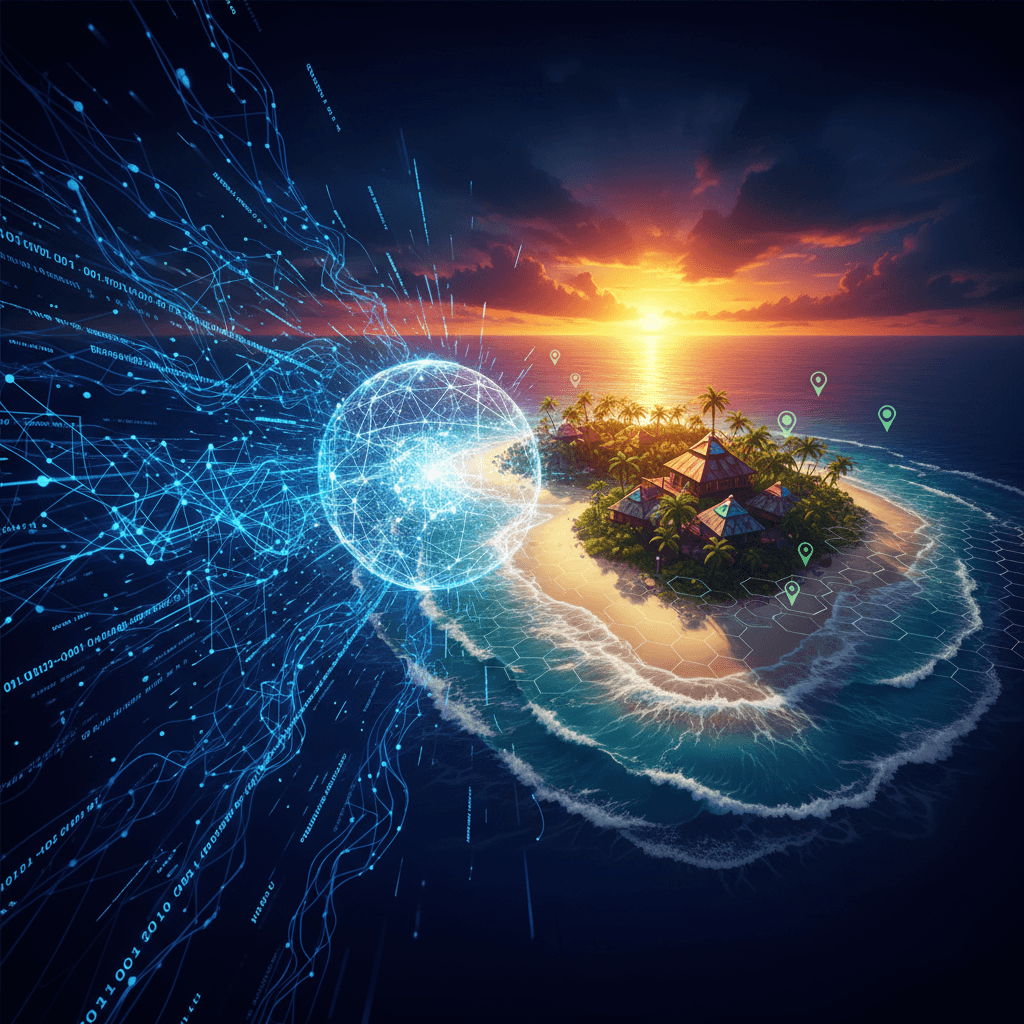

Project Genie leverages a highly sophisticated "world model" which is distinct from traditional game engines like Unity or Unreal.[3] Unlike environments built with pre-modeled 3D assets and hand-coded physics, Genie 3 generates each frame of the virtual world on the fly, predicting the environment and its dynamics based on user input and the preceding frames.[3] Users can initiate world creation with simple text prompts, images, or even sketches, describing a scene such as a "tropical island at sunset with wooden huts and moving waves."[3][2] The model then instantly brings that description to life as an explorable, action-controllable environment.[2] This real-time generation capability enables users to walk around, change the weather, or spawn new objects and characters, with the world regenerating itself dynamically as they navigate and interact.[3][4] The current prototype, built upon the Genie 3 iteration, is reported to operate at a resolution of 720p and a frame rate of 24 frames per second, maintaining visual consistency for several minutes of continuous interaction.[3][4]

The underlying technology driving Project Genie is the culmination of years of DeepMind's research into foundation world models. The original Genie model, introduced as a foundation model for playable 2D worlds, was trained on an immense dataset of 200,000 hours of 2D platformer game footage sourced from the internet.[5][6] This unsupervised training allowed it to learn an action-controllable virtual space without requiring labeled action data, inferring the connection between visual change and potential movement.[1][6] Its successor, Genie 2, scaled this approach to generate diverse and action-controllable 3D worlds, demonstrating emergent capabilities like complex character animation and modeling physics and object interactions.[1][7][8] The newest iteration, Genie 3, which powers the public prototype, focuses on higher-resolution generation and extended visual consistency, pushing the boundaries of what is possible in interactive simulation.[1] This progression from a 2D platformer generator to a 3D real-time world simulator is seen by DeepMind as a crucial step on the path toward creating general-purpose AI, as world models are essential for training AI agents to understand, navigate, and adapt to the complexity of the real world.[3][4]

The commercial and industrial implications of Project Genie extend far beyond the entertainment sector, pointing to a future of rapid digital prototyping and highly scalable AI training. For the gaming industry, the technology signals a potential revolution in content creation, moving the initial stage of world-building from a labor-intensive, multi-disciplinary effort to an instant, conversational process. Instead of needing teams of modelers and designers for initial concepts, a playable, explorable space can be generated in minutes.[3] Furthermore, the core capability to generate novel, unpredictable environments is invaluable for AI research. Embodied AI agents—AI systems designed to operate in the physical world, such as robots—require limitless, varied training environments to test their adaptability and resilience to unseen scenarios. Project Genie’s ability to generate an endless curriculum of rich simulations, complete with emergent physics and object interactions, can drastically cut development costs and timelines for companies building autonomous systems and advanced robotics.[3][7][4] Product Manager Diego Rivas noted that even in its early form, trusted testers were able to uncover "entirely new ways to use it," suggesting its utility spans across design, marketing, and engineering teams that need to visualize and interact with concepts quickly.[2]

While the initial release of Project Genie to US Gemini subscribers is a significant milestone, the technology remains a research prototype with certain limitations inherent to foundational world models. Early versions of Genie, for example, were limited by a short 'memory' or 'horizon' of around 16 frames, which caused challenges in maintaining long-term environmental consistency.[9] While the Genie 3 iteration has improved this, holding a coherent environment for a few minutes, the generated worlds can still exhibit some of the "hallucination" weaknesses found in other autoregressive transformer models, occasionally manifesting unrealistic futures or inconsistent dynamics.[9] However, the release itself is a deliberate strategy by Google DeepMind to stress-test the system on a diverse, large-scale user base, gathering critical data on how people interact with and push the boundaries of an emergent world model. This public experimentation is paramount to refining its stability, visual fidelity, and latency—the final frontiers before the technology could mature into a widely-adopted developer tool. The immediate availability to a paying subscriber base, rather than an academic or closed-beta audience, underscores Google’s belief in the model's robustness and its intention to quickly integrate this generation paradigm into the broader AI ecosystem.[2][10] The opening of Project Genie marks not just a new product feature, but a public demonstration of a core AI capability that fundamentally connects language, vision, and interaction in a simulated world, positioning DeepMind as a leader in the race toward general-purpose world simulation.[2]

Sources

[2]

[3]

[4]

[5]

[6]

[9]

[10]