Tencent Open-Sources AI That Creates Immersive 3D Worlds from Prompts

Tencent's open-source AI generates complex 3D worlds from text in minutes, democratizing content creation.

July 28, 2025

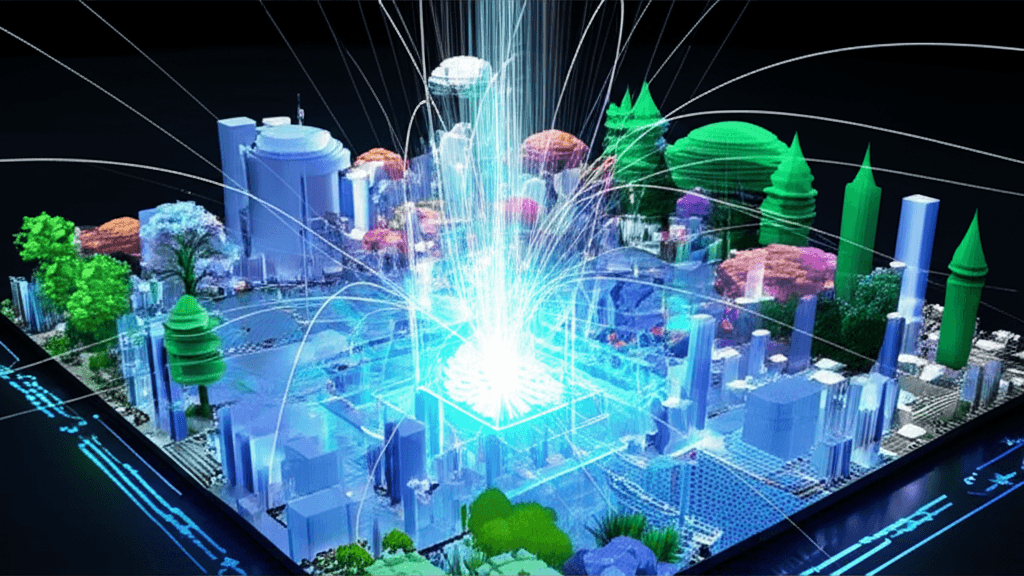

In a significant move for the generative AI landscape, Tencent has released its Hunyuan World Model 1.0, an open-source artificial intelligence model capable of creating immersive and interactive three-dimensional worlds from simple text or image prompts.[1][2] This development is poised to accelerate innovation in a variety of sectors, including video game development, virtual reality, and digital content creation, by democratizing the tools needed to build complex virtual environments.[3][4] The model, now publicly available on platforms like GitHub and Hugging Face, represents the industry's first open-source world generation model that is also compatible with standard computer graphics pipelines, allowing for seamless integration into existing workflows.[1][5][6]

At its core, Hunyuan World Model 1.0 addresses a fundamental challenge in generative AI: the trade-off between the visual richness of video-based generation and the geometric consistency of 3D-based methods.[3][7] Existing approaches have typically specialized in one area, with video diffusion models producing dynamic but often inconsistent scenes, while 3D-based models offer structural coherence but can lack visual detail and struggle with memory demands.[3][7] Tencent's model introduces a novel framework that bridges this gap. It employs a "semantically layered 3D mesh representation" that utilizes panoramic images as 360-degree proxies for the virtual world.[3][6] This technique allows the model to decompose a scene into distinct layers—such as sky, background, and foreground objects—and then reconstruct them into a coherent and editable 3D space.[7][4] This layered approach is a key innovation, as it provides users the ability to manipulate individual elements within the generated scene, a feature often missing from other models that produce a single, fused mesh.[2][7]

The technical process behind Hunyuan World Model 1.0 involves several sophisticated steps.[7] It begins by generating a full 360-degree panoramic image from the user's text or image input.[7] The system then performs a semantic decomposition, separating the panorama into its constituent parts like terrain, buildings, and other objects.[7][4] Following this, a layer-wise depth estimation process calculates the distance of these elements to create a true sense of three-dimensional space.[7] From this data, the model constructs the final 3D meshes, creating a navigable and interactive environment.[1][7] The output can be exported as 3D mesh files, which are compatible with popular game engines like Unity and Unreal Engine, as well as modeling software such as Blender.[1][5][4] This direct compatibility is a significant advantage, as it streamlines the creative process, allowing developers to move from concept to a working prototype with unprecedented speed.[4] Tasks that traditionally required weeks of work by professional modeling teams can now potentially be accomplished in minutes.[4]

By making Hunyuan World Model 1.0 open-source under a permissive community license, Tencent is actively fostering a collaborative ecosystem for both academic and commercial use.[1][3] This strategy aligns with a broader industry trend where open-source development is seen as a catalyst for rapid innovation, transparency, and skill development.[8][9] The open-source version is based on the Flux image generator but is designed to be adaptable to other models like Stable Diffusion, enhancing its versatility.[3][6] Tencent has stated that its model demonstrates superior performance in generation quality, texture detail, and adherence to user prompts when compared to other open-source alternatives.[1][4] This release is not an isolated event but part of Tencent's wider strategy in the generative AI space, which includes the recent launch of other tools like the Hunyuan3D-PolyGen model for faster 3D content creation and the CodeBuddy IDE for AI-assisted coding.[1]

The implications of this release are far-reaching. For the gaming industry, the model offers a powerful tool for rapid prototyping and level design, drastically reducing asset creation time and cost.[4] In virtual and augmented reality, it enables the creation of immersive 360-degree environments that can be experienced with headsets, bringing virtual worlds to life with greater ease.[3][4] Beyond entertainment, the technology has potential applications in robotics, where it can be used to train robots in realistic virtual simulations.[3] While the current iteration primarily generates interactive 360-degree panoramas with somewhat limited free movement, the foundation it lays is a critical step toward fully explorable and persistent virtual worlds generated on demand.[2][10] As the open-source community begins to build upon and refine the Hunyuan World Model, the pace of innovation in 3D generative AI is set to accelerate significantly, pushing the boundaries of what is possible in digital content creation.

Sources

[4]

[5]

[6]

[7]

[9]

[10]