Responsible AI Matures, But Data Quality and Hallucinations Threaten Widespread Adoption

Progress on RAI frameworks is strong, but data quality, regulatory gaps, and AI hallucinations impede widespread deployment.

January 22, 2026

The business imperative for ethical and responsible Artificial Intelligence has solidified within the enterprise landscape, yet a new report from Nasscom highlights a persistent dichotomy: a majority of AI-ready firms are maturing their Responsible AI (RAI) frameworks, but significant, technical, and regulatory challenges continue to impede wholesale adoption. The findings reveal that nearly 60 percent of businesses confident in their ability to scale AI responsibly have established mature RAI frameworks, demonstrating a strong correlation between an organization's overall AI capability and its commitment to responsible governance practices.[1][2] This momentum marks a clear year-on-year progression, with approximately 30 percent of all surveyed organizations now reporting mature RAI practices and an additional 45 percent actively implementing formal frameworks, signaling a substantial shift of Responsible AI from an optional ethical discussion to a core strategic priority.[3][2] The industry’s focus has moved beyond mere awareness to tangible implementation, driven by the need to build stakeholder trust and ensure long-term value creation as AI systems become embedded in critical business decisions.

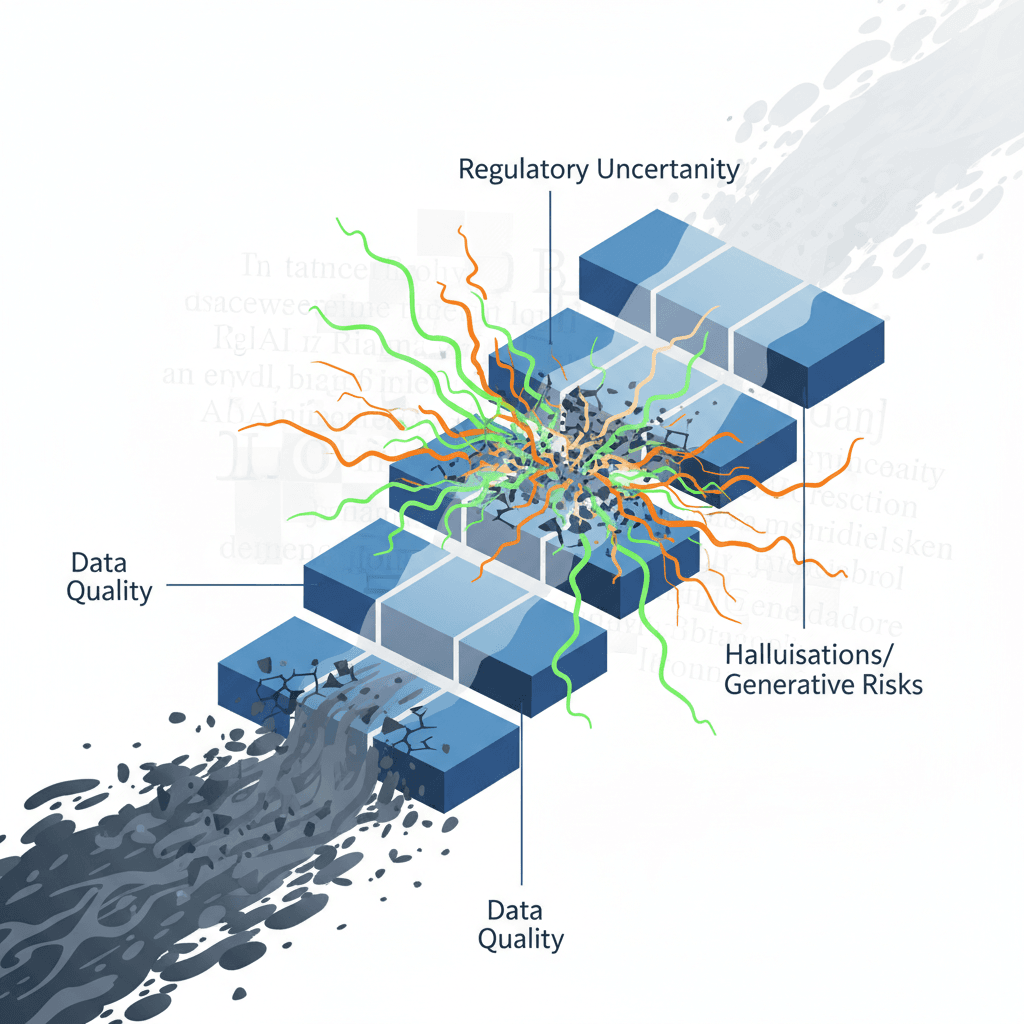

Despite the ecosystem's clear advancement, the report underscores crucial barriers that prevent effective, widespread implementation of Responsible AI principles across the board. Key among these implementation challenges is the persistent lack of access to high-quality data, cited as a primary obstacle by 43 percent of respondents.[3][4][5] Poor data quality directly undermines the core tenets of RAI, risking the introduction of inherent biases, reducing model explainability, and compromising the accuracy of AI-driven decisions. The reliance of advanced AI systems on massive, diverse, and clean datasets means that foundational data infrastructure and data governance policies must be prioritized to sustain the current rate of maturity. Without robust, high-quality data pipelines, even the most sophisticated RAI frameworks will struggle to prevent adverse outcomes in deployed applications. This challenge is further complicated by the second-most-cited barrier: the shortage of skilled technical and management personnel, which was noted by 15 percent of businesses.[3][4] To address this, the industry is making proactive investments, as nearly 90 percent of organizations with mature RAI practices are committed to continued investments in workforce sensitisation and compliance training.[2]

The advent of large language models and the accelerated adoption of generative AI systems have introduced a set of new, pronounced operational risks, chief among them being the issue of AI hallucinations. The report indicates that hallucinations are the most frequently experienced AI risk, reported by a substantial 56 percent of businesses.[3][4][5] This finding highlights a critical vulnerability in the current generation of generative AI tools, where models produce factually incorrect or nonsensical outputs, posing significant risks to applications ranging from customer service bots to medical diagnostics. Alongside hallucinations, other common risks include privacy violations, reported by 36 percent, a lack of model explainability, noted by 35 percent, and unintended bias or discrimination, which affects 29 percent of businesses.[3][4][5] The high prevalence of these risks suggests that while firms are establishing frameworks, the integration of risk mitigation techniques—such as adversarial testing, robust monitoring, and post-deployment auditing—is still a work in progress, requiring substantial updates to existing RAI policies to adequately address the novel and rapidly evolving risks associated with autonomous and agentic AI systems.

A separate, yet intertwined, challenge impeding RAI’s smooth ascent is the prevailing regulatory uncertainty, cited as a key barrier by 20 percent of firms.[3][4][5] The rapid pace of AI innovation has outstripped the speed of legislative and regulatory response globally, leaving enterprises to navigate a complex, fragmented, and often ambiguous compliance landscape. The lack of clear, uniform legal standards for issues like data usage, model accountability, and liability creates hesitation for companies looking to make large-scale, long-term investments in AI, especially in highly regulated sectors like Banking, Financial Services, and Insurance (BFSI). Interestingly, the BFSI sector currently leads in RAI maturity at 35 percent, followed by Technology, Media, and Telecommunications (TMT) at 31 percent, and healthcare at 18 percent, indicating that industries already accustomed to high regulatory scrutiny are adapting more quickly.[3] For large enterprises and startups, regulatory uncertainty remains a significant concern, while smaller and medium-sized enterprises (SMEs) cite high implementation costs as a critical constraint, highlighting a diverse set of obstacles based on organizational size.[3][5] To counter this, accountability structures are becoming more formalized, with 48 percent of organizations placing primary responsibility at the C-suite or board level, and 65 percent of mature organizations establishing formal AI ethics boards and committees to govern their practices.[3]

The implications of the report for the broader AI industry are profound, suggesting that Responsible AI is not merely an overhead cost but a prerequisite for scalable, confident AI deployment. The finding that AI-ready firms with mature frameworks are better prepared for emerging technologies, such as Agentic AI, solidifies the argument that robust governance is an enabler of innovation, not a brake on it.[3][4] As AI systems deepen their autonomy, the industry’s ability to build trustworthy, human-centric solutions hinges on closing the gaps identified in data quality and mastering the new technical risks posed by generative models. The ongoing, concerted efforts in workforce enablement, alongside the strengthening of governance mechanisms like AI ethics boards, point toward a future where businesses are working to embed responsibility across the entire AI lifecycle. For the industry to truly accelerate safe AI adoption and position itself as a global reference point for trustworthy AI, sustained investment and a collaborative approach to resolving the technical and regulatory ambiguities will be essential. This measured progress, tempered by the stark reality of persistent challenges, defines the current state of Responsible AI adoption as a journey of continuous improvement rather than a destination already reached.[3]