OpenAI pivots from brute force, pioneers new sustainable AI scaling laws.

OpenAI's financial surge battles colossal AI costs and technical plateaus, demanding a new, sustainable path for intelligence.

October 18, 2025

OpenAI, the trailblazer in the artificial intelligence boom, is navigating a precarious dual challenge that will define its future and that of the wider AI industry. The company is experiencing a meteoric rise in revenue, a growth trajectory unlike almost anything seen before in the technology sector, with projections soaring from $3.7 billion in 2024 to a target of $12.7 billion in 2025.[1][2] This explosive financial scaling, however, is not just a measure of success but a sheer necessity, driven by an equally demanding and perhaps unsustainable scaling law on the technology side: the colossal and ever-growing cost of creating and operating the very AI models that generate its income.

The financial numbers surrounding OpenAI are staggering, painting a picture of a company running on a high-stakes gamble. While annualized revenue reached an estimated $13 billion by mid-2025, the company's expenses are growing just as fast, if not faster.[3] Reports indicate OpenAI's operational costs could reach $8.5 billion in 2024, with expectations of a cash burn around $8 billion in 2025.[4][5] This has resulted in substantial losses, with a reported operating loss of around $5 billion for 2024.[6] The primary revenue drivers are subscriptions for premium versions of its popular ChatGPT product, which account for the majority of income, and fees for developers and businesses to access its models via an API.[4][3] Despite a massive user base, with ChatGPT attracting hundreds of millions of weekly active users, only a small fraction are paying subscribers, placing immense pressure on the company to convert more users and expand its enterprise clientele to justify its massive valuation and cover its exorbitant costs.[7][8]

This relentless pursuit of revenue is intrinsically tied to the foundational principle that has guided AI development for years: the scaling laws. First articulated by researchers at OpenAI, these laws established a predictable relationship where increasing a model's size (the number of its parameters), the volume of data it's trained on, and the computational power used for training would predictably improve its performance.[4][9] This belief fueled a race to build ever-larger models, but the strategy is now facing significant headwinds. The cost of training frontier AI models has exploded, with estimates for systems like GPT-4 running into the hundreds of millions of dollars, and future models projected to cost a billion dollars or more to train.[10] These costs are driven by the need for immense computational power, primarily from specialized and expensive GPUs, and the massive energy consumption required to run them.[6][11]

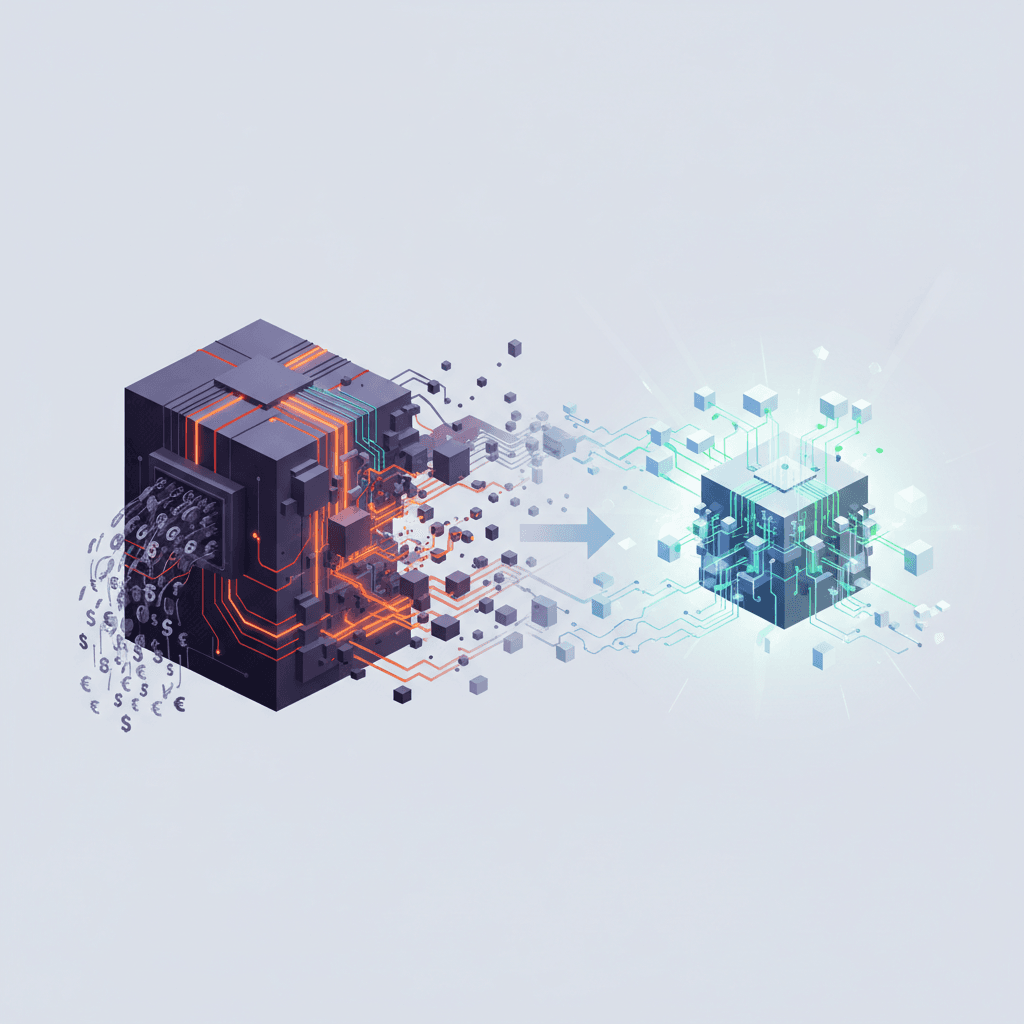

The industry is now confronting the reality of diminishing returns. Doubling the training cost for a large language model may now only yield a marginal improvement in quality.[12] Furthermore, the well of high-quality training data from the internet is finite and has been largely consumed, forcing researchers to explore more complex and expensive alternatives like synthetic data.[1][13] This has led to a growing consensus that the original scaling hypothesis, predicated on simply making everything bigger, is hitting a plateau. The performance jump from GPT-3 to GPT-4, for instance, was far more significant than subsequent improvements, suggesting that future gains will not come from scale alone.[1] This reality is forcing a strategic pivot, not just for OpenAI but for the entire field. The focus is shifting from solely scaling up model size to more nuanced and efficient approaches.

In response to these challenges, a new set of scaling principles is emerging. Instead of just pre-training ever-larger models, the focus is shifting to post-training optimization, smarter model architectures, and scaling up reasoning capabilities at the point of use, often called "inference-time compute."[4][14] This involves designing models that can "think" longer about a problem to produce a better answer, a move away from the brute-force method of simply expanding the model's static size.[4] This evolution is critical for OpenAI's long-term viability. For its financial model to work, the cost of running its services must decrease while capabilities continue to improve. The company's future success depends on inventing a new, more sustainable scaling law for its technology that doesn't demand a geometrically expanding budget for compute and data.

Ultimately, OpenAI finds itself in a race to scale its revenue faster than its colossal expenses, while simultaneously rewriting the technical rulebook that brought it to this point. The initial scaling laws powered the AI revolution, but they have also created a business model that burns through billions of dollars. The company's ability to pioneer more efficient, powerful, and economically viable ways to scale its AI will determine whether it can build a sustainable enterprise out of its groundbreaking technology. The entire industry watches, as OpenAI's quest for new scaling laws for both its models and its revenue will likely chart the course for the next chapter of artificial intelligence.

Sources

[2]

[3]

[6]

[7]

[9]

[10]

[12]

[13]