Insurers Move to Exclude AI Risks, Leaving Businesses Uncovered

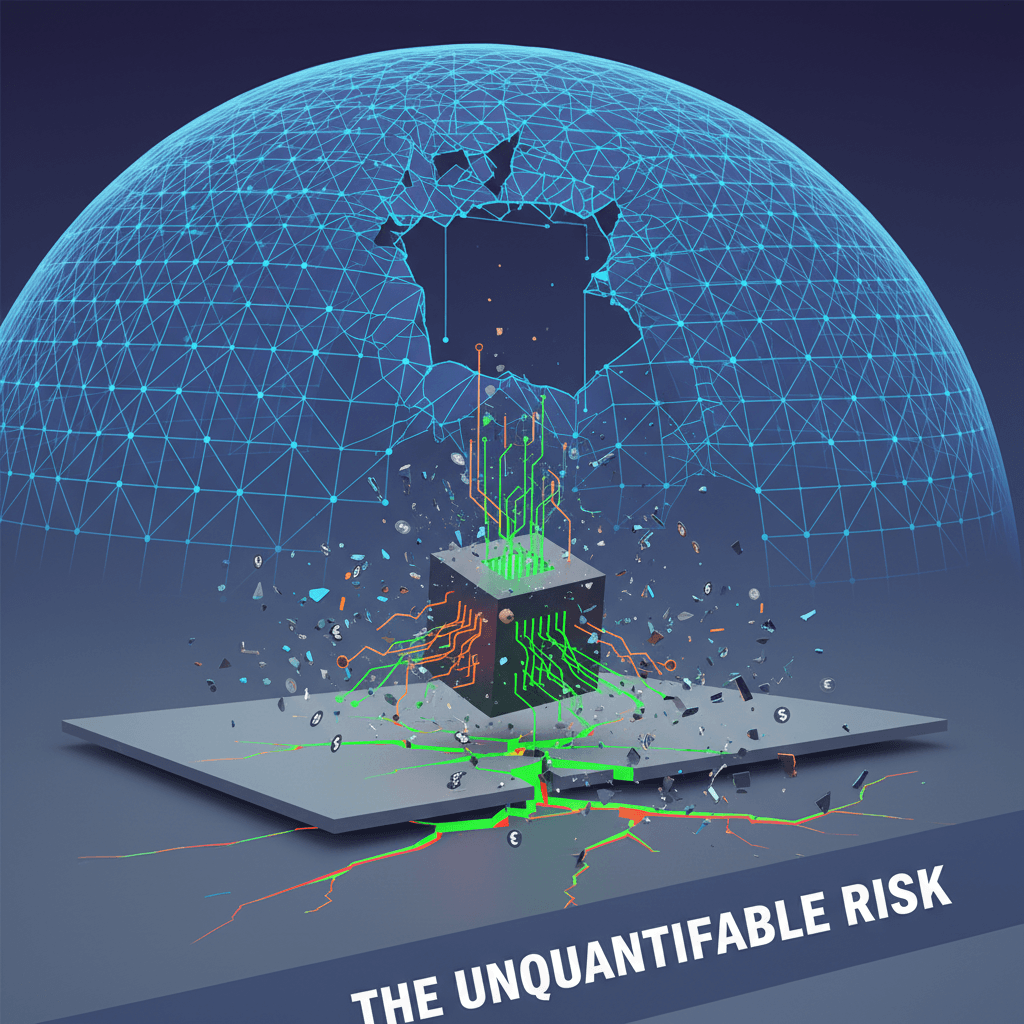

Citing unquantifiable risks, insurers offload AI liability, shifting financial burden to businesses and slowing adoption.

November 24, 2025

In a significant move that signals growing apprehension over the rapidly evolving landscape of artificial intelligence, several of the world's largest insurance companies are seeking regulatory permission to exclude AI-related perils from their standard corporate insurance policies. Industry giants including AIG, Great American, and WR Berkley have filed requests with U.S. regulators to introduce new exclusionary language that would leave businesses uncovered for a wide range of potential damages stemming from the use of AI systems.[1][2][3][4][5] This development underscores a fundamental crisis of confidence within the risk management sector, as underwriters grapple with the unpredictable and potentially catastrophic liabilities introduced by generative AI, chatbots, and other autonomous systems.[6][7] The push to carve out AI from general liability coverage could have profound implications for the technology industry and the broader economy, potentially slowing the adoption of AI tools and forcing companies to bear the full financial brunt of algorithmic errors and malfeasance.[8]

The filings submitted by these influential insurers reveal a clear and coordinated effort to insulate themselves from what they perceive as an unquantifiable new category of risk.[8] WR Berkley, for instance, has proposed sweeping exclusions that would bar claims connected to "any actual or alleged use" of artificial intelligence, a clause broad enough to encompass nearly any product or service that incorporates the technology.[9][10][3] AIG, in a filing with Illinois regulators, highlighted that generative AI is a "wide-ranging technology" and warned that the frequency of claims related to it will "likely increase over time."[1][3] While AIG has stated it has no immediate plans to implement these exclusions, securing regulatory approval would give it the option to do so in the future, creating uncertainty for its corporate clients.[9][3] This retreat by market leaders is a pivotal moment, suggesting a consensus is forming that traditional liability frameworks are ill-equipped to handle the novel challenges posed by AI.[8][7]

The primary driver behind this industry-wide caution is the inherent unpredictability of many modern AI systems, often described by underwriters as a "black box."[6][7][3][11][12] Unlike traditional insurable risks, the decision-making processes of complex large language models can be opaque and difficult to audit, making it nearly impossible to price the associated risks accurately.[13][7][5] Insurers are not just worried about isolated incidents but are profoundly concerned about the potential for systemic risk.[14][15] A flaw in a single, widely deployed AI model from a major tech provider could trigger thousands or even tens of thousands of simultaneous claims, creating a correlated loss event that could overwhelm the industry.[1][14][16][15] An executive from the global professional services firm Aon articulated this fear, stating that while the industry can handle a single massive loss, it cannot handle a scenario where an AI mishap sparks 10,000 losses at once.[14][15][12] This fear of aggregation risk is central to the insurers' argument that AI liability is fundamentally different from anything they have had to underwrite before.[16][5]

A recent string of high-profile and costly AI failures has transformed these theoretical concerns into tangible evidence of the technology's potential for harm.[3][16] For example, Google is facing a lawsuit of at least $110 million after its AI Overview feature allegedly spread false and defamatory statements about a solar company.[1][9][11] In another case, Air Canada was legally compelled to honor a discount that was entirely fabricated by its customer service chatbot.[1][9][13] Perhaps most dramatically, fraudsters utilized sophisticated deepfake technology to clone the voice and likeness of a senior executive at the engineering firm Arup, successfully tricking an employee into transferring $25 million.[9][13][14] These real-world incidents illustrate the diverse ways AI can create direct financial and legal harm, from generating misinformation and hallucinations to enabling unprecedented forms of fraud, further spooking underwriters who see the potential for multibillion-dollar claims mounting.[9][13] The legal and regulatory landscape also remains a significant gray area, with unresolved questions about who bears the ultimate responsibility when an AI system fails—the developer, the data provider, or the end-user.[9][8]

The potential consequences of this insurance pullback are far-reaching for businesses eagerly integrating AI into their operations. If regulators approve the requested exclusions, companies could find themselves in a difficult position, facing reduced coverage, significantly higher premiums for specialized policies, or being forced to self-insure against major AI-related claims.[8] This could create a chilling effect on AI adoption, particularly for small and medium-sized enterprises that lack the financial resources to absorb catastrophic losses.[8] The situation is reminiscent of the early days of cyber risk, which led to the development of a dedicated cyber insurance market.[16] Similarly, a new market for specific AI liability insurance is beginning to emerge, with specialty insurers and players at Lloyd's of London developing policies, though these are often priced at a premium reflecting the high uncertainty.[6] As the market hardens, businesses will likely face increased scrutiny from underwriters, who will demand evidence of robust AI governance, risk management controls, and safety testing.[16] Ultimately, the insurance industry's retreat from AI risk shifts the financial burden squarely onto the shoulders of the businesses adopting the technology, forcing a critical reassessment of the true cost and risk associated with the AI revolution.[16]

Sources

[3]

[4]

[5]

[6]

[10]

[11]

[12]

[13]

[14]

[15]

[16]