AI's Cloud Cost Crisis Forces APAC Enterprises to Edge Computing

APAC companies rethink AI as spiraling cloud costs and latency drive a critical shift to edge computing for inference.

November 24, 2025

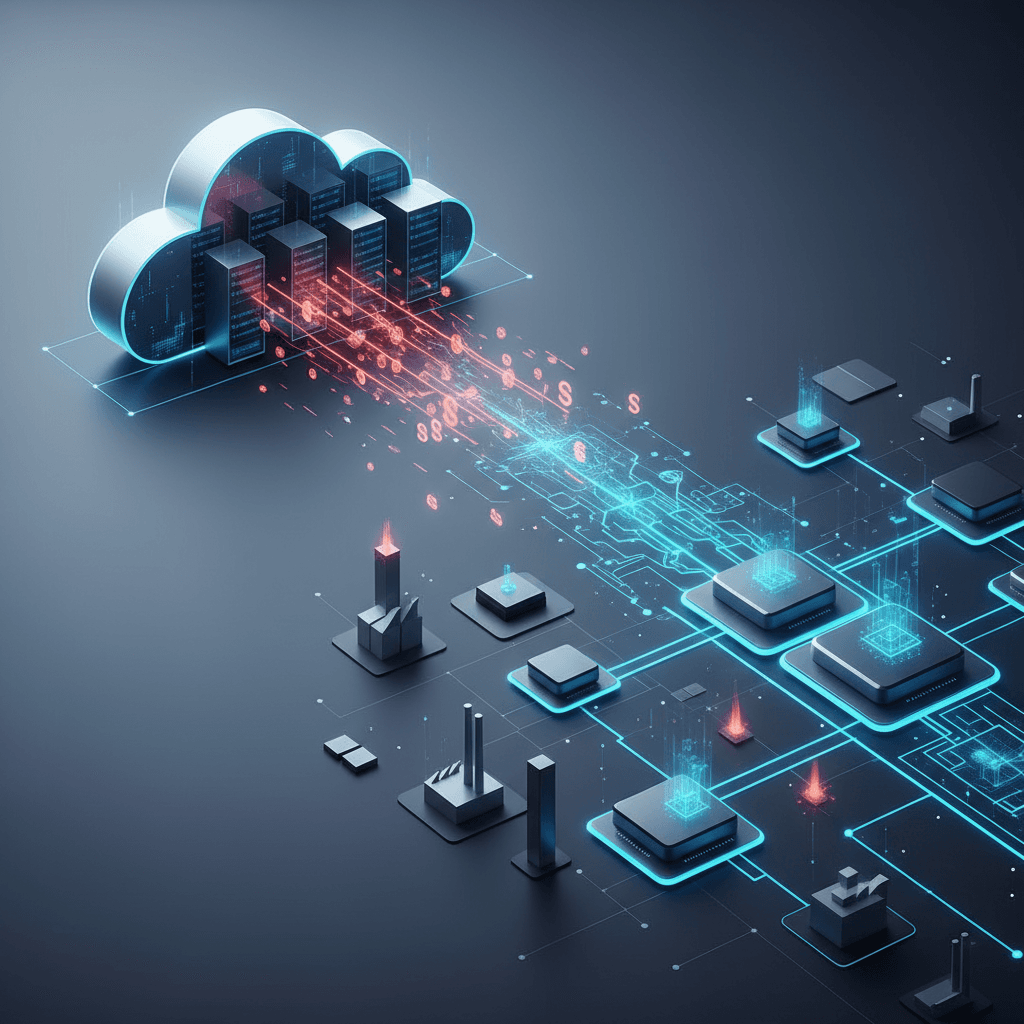

A wave of strategic rethinking is sweeping across the Asia-Pacific region as enterprises grapple with the spiraling costs and performance limitations of running artificial intelligence applications. Despite soaring AI investments, projected to reach US$175 billion by 2028, many companies are failing to realize the expected returns from their AI projects.[1] The bottleneck, many are discovering, lies not in the AI models themselves, but in the centralized cloud infrastructure traditionally used to deploy them. The intensive, ongoing process of AI inference—using a trained model to make real-time predictions—is proving to be prohibitively expensive and slow in the cloud, forcing a pivotal shift of AI infrastructure towards the edge.

The challenge stems from the fundamental nature of modern AI applications, which demand instantaneous responses to function effectively. Centralized cloud architectures, which powered the digital transformation of the last decade, are often ill-suited for these real-time demands.[1] When data has to travel hundreds or thousands of kilometers to a remote data center for processing, the resulting latency can render applications like generative AI chatbots, autonomous vehicles, and real-time financial fraud detection ineffective.[2][3][1] This delay is compounded by the sheer cost of AI inference, which, unlike the one-off cost of training a model, is a constant and resource-intensive operational expense.[4] Running powerful GPUs around the clock in the cloud generates massive and often unpredictable bills, while data ingress and egress fees add another layer of expense.[3][5][6] Furthermore, the immense power required for these large-scale AI data centers is straining energy grids and driving up operational costs, with AI workloads expected to increase power usage in APAC data centers by 165% by 2030.[2][7]

In response to these pressures, enterprises are increasingly turning to edge computing, moving AI processing closer to where data is generated and actions are taken.[8][9] This architectural shift directly addresses the core problems of latency and cost. By processing data locally on edge servers or devices, organizations can achieve the split-second responses necessary for real-time AI services.[1] This move also provides a pathway to more predictable cost management by reducing reliance on expensive cloud bandwidth and compute resources.[10][11] Beyond performance and cost, the fragmented and stringent regulatory landscape of the Asia-Pacific region makes the edge a compelling proposition for data security and compliance.[1] Processing and storing sensitive data locally helps organizations meet complex data sovereignty and privacy requirements that can be difficult to satisfy with a centralized cloud approach.[3][1] The momentum behind this transition is clear, with one IDC report predicting that 80% of CIOs in APAC will utilize edge services for their AI inferencing workloads by 2027.[10][11]

This "Edge Evolution" is not merely a forecast but a reality taking shape across the region, with distinct adoption patterns emerging.[10] In India, businesses are actively building out edge capabilities in tier 2 and 3 cities to support generative AI demand while managing costs.[10][11] China has seen a significant number of enterprises move generative AI into production, supported by a combination of public cloud and growing edge investments.[11][12] However, the transition is not without its hurdles. Enterprises face the complexity of managing a distributed network of systems and ensuring interoperability between diverse hardware and software.[13][9] Moreover, there are concerns about whether local power grids and supply chains are fully prepared to support the advanced cooling and energy requirements of high-density AI infrastructure at the edge.[7] These challenges are fostering a consensus around a hybrid strategy, where the cloud remains the domain for the heavy-lifting of training massive AI models, while the edge is leveraged for fast, efficient, and secure inference.[1]

Ultimately, the migration of AI infrastructure to the edge represents a critical adaptation for enterprises in the APAC region. The limitations of a purely cloud-centric model have become a barrier to unlocking the full potential of AI investments. The rising costs of inference, coupled with the non-negotiable demand for low latency and the complexities of regional data regulations, have made the shift inevitable. For companies navigating this new landscape, the future is not a choice between the cloud and the edge, but a strategic integration of both. By embracing a hybrid model that leverages the strengths of centralized training and distributed inference, organizations can build a more efficient, resilient, and cost-effective foundation to finally capture the transformative value of artificial intelligence.