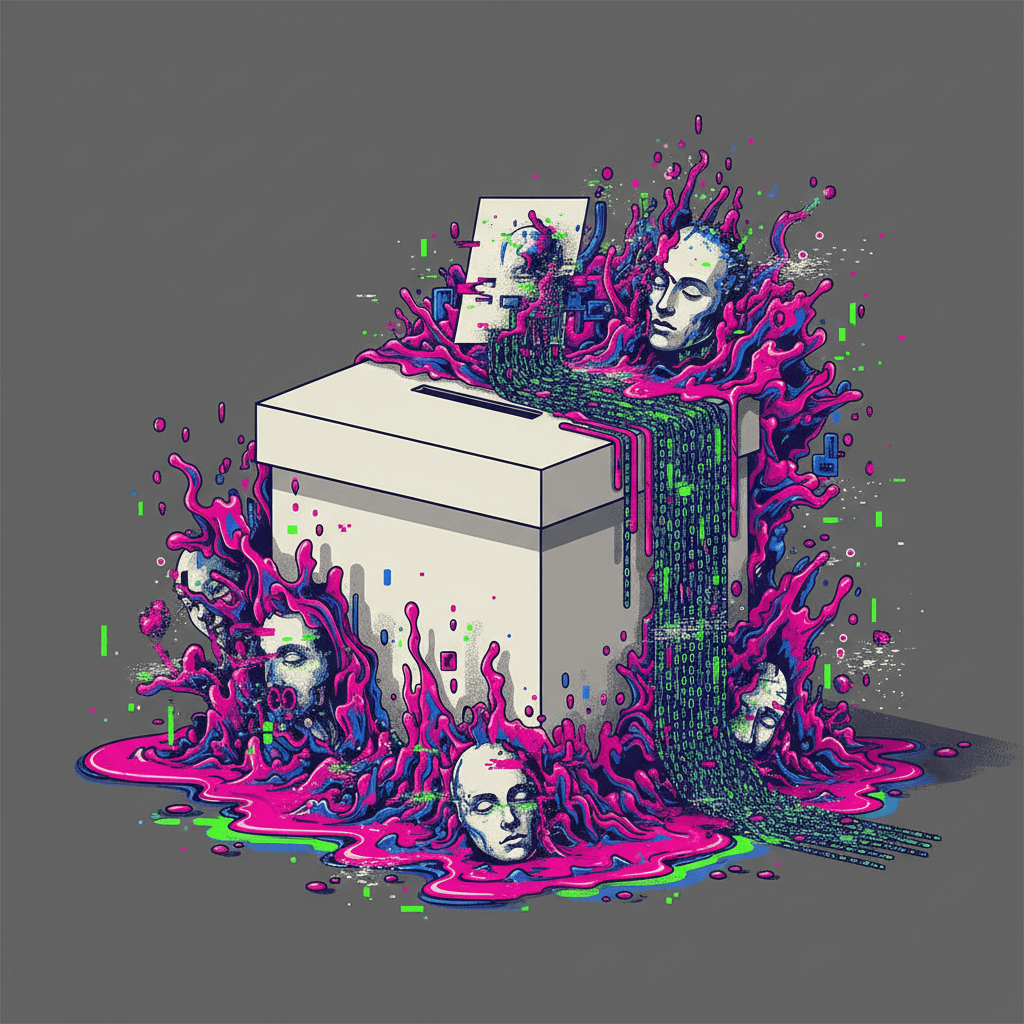

Generative AI Deepfakes Flood Japan Election, Decimating Voter Trust

AI-driven deepfakes shattered voter trust, turning the campaign into a stark test case for authenticating reality.

February 7, 2026

Japan's lower house election has emerged as a stark and unnerving test case for the impact of generative artificial intelligence on modern democracy, showcasing the unprecedented speed and sophistication with which political misinformation can now spread. The proliferation of AI-generated fake videos, known as deepfakes, and doctored images across major social media platforms during the short campaign period created a challenging environment for voters attempting to discern truth from sophisticated fiction[1][2]. This digital flood of deception has prompted warnings from media watchdogs and political parties alike, underscoring a collapse in public confidence that threatens to fundamentally distort electoral fairness.

The deployment of generative AI tools has dramatically lowered the barrier to entry for creating high-impact political disinformation, moving beyond simple manipulated text to convincing audiovisual fabrications[1][3]. One of the most widely circulated examples was a deepfake video posted to X, formerly Twitter, that purported to show co-leaders of the newly formed Centrist Reform Alliance, Yoshihiko Noda and Tetsuo Saito, abruptly abandoning their formal policy speech broadcast to push aside the podium and perform an energetic fan dance[4][2]. The video, which manipulated a segment that the Public Offices Election Law mandates must be broadcast unedited, garnered more than 1.6 million views before the uploader deleted it, admitting it was an AI-altered video intended as a joke[4]. Despite the retraction, the sheer volume of consumption—millions of voters—had already been exposed to the caricatured image of the opposition leaders[2]. In a direct attack aimed at fabricating corruption allegations, a deepfake video titled “Caught in Secret Trading” targeted National Democratic Party representative Yuichiro Tamaki, featuring a fake version of the politician, speaking in his voice, casually confessing to manipulating coin prices and growing a slush fund through his advocacy for cryptocurrency tax cuts[2]. Furthermore, the ruling party's leadership was not immune, as an unnaturally altered image of Prime Minister Sanae Takaichi was posted on X, captioned with text suggesting it revealed the "darkness and terror within her heart," a post that amassed over 3.6 million views[1]. These attacks, ranging from humorous caricature to outright defamatory crime fabrication, demonstrate a new scale of political toxicity enabled by readily available AI tools.

This onslaught of deepfakes was amplified by the structural characteristics of the campaign itself. The shortened, 16-day election period for the House of Representatives necessitated a heavy reliance on online platforms like X and YouTube Shorts for campaigning[2]. In this fast-moving digital environment, sensational fake content, enabled by generative AI, spread so rapidly that fact-checkers and traditional media found it "nearly impossible to catch up"[2]. The pervasive nature of these convincing fakes has led to a profound crisis of trust among the Japanese electorate. A 2024 survey of 3,700 people found that 51.5% of respondents mistakenly believed the fake news they were presented with was truthful, with only 14.5% successfully identifying it as inauthentic, and this vulnerability showed no difference across age groups[5]. The level of concern is widespread; a separate nationwide survey indicated that 91% of respondents were worried that the spread of misinformation and disinformation on social media could ultimately influence the election results[6]. The integrity of political communication has become so degraded that when the Centrist Reform Alliance's leadership faced criticism for a "stiff and unnatural" delivery in their *actual* policy speech, they were forced to re-upload the original file with an explicit clarification: “This is not AI”[2]. This requirement to actively authenticate reality demonstrates the complete erosion of the public's default assumption of veracity, a challenge to the very foundation of electoral discourse[5][2].

The implications of the election’s digital battleground extend directly to the generative AI industry and its evolving relationship with global regulatory bodies. Japan has maintained a relatively lenient domestic regulatory stance on AI development, aiming to foster innovation, yet it has taken a global leadership role through initiatives like the Hiroshima AI Process to establish international governance standards[7]. This dual approach highlights the central tension for the AI industry: how to balance rapid technological advancement with the civic responsibility of preventing misuse. In the absence of strict domestic election-specific legislation on deepfakes, the burden of defense falls heavily on social media platforms and the developers of the AI technology itself[7][3]. Some major tech companies, such as Microsoft, have proactively pledged to embed digital watermarks into images created using their AI tools as a means of distinguishing authentic content from deepfakes[3]. However, the 28-fold increase in deepfake scams in Japan last year suggests that the pace of malicious adaptation outstrips both regulatory and voluntary corporate countermeasures[8].

The Japanese election serves as a critical warning for democracies worldwide, many of which face their own electoral cycles in the coming year. While Japan's historically stringent election campaign laws and high trust in traditional media may have helped to limit the overall *volume* of non-AI disinformation[7], the *impact* of AI-generated content was undeniably potent. The country now joins the ranks of other nations, including South Korea, that have witnessed AI-driven political deception in their recent elections[2][9]. Moving forward, the global challenge is two-fold: technology providers must urgently accelerate the development and mandatory adoption of robust provenance and detection tools, while governments must pursue comprehensive strategies that combine clear regulation for malicious deepfakes with extensive media literacy education for a public increasingly unable to distinguish simple, AI-generated falsehoods from complex fact[5][10]. The Japanese lower house election was not an isolated incident, but rather a harbinger of the "AI slop" that now threatens to dilute political debate, leaving democratic processes vulnerable to manipulation and eroding the collective trust necessary for informed governance[5].