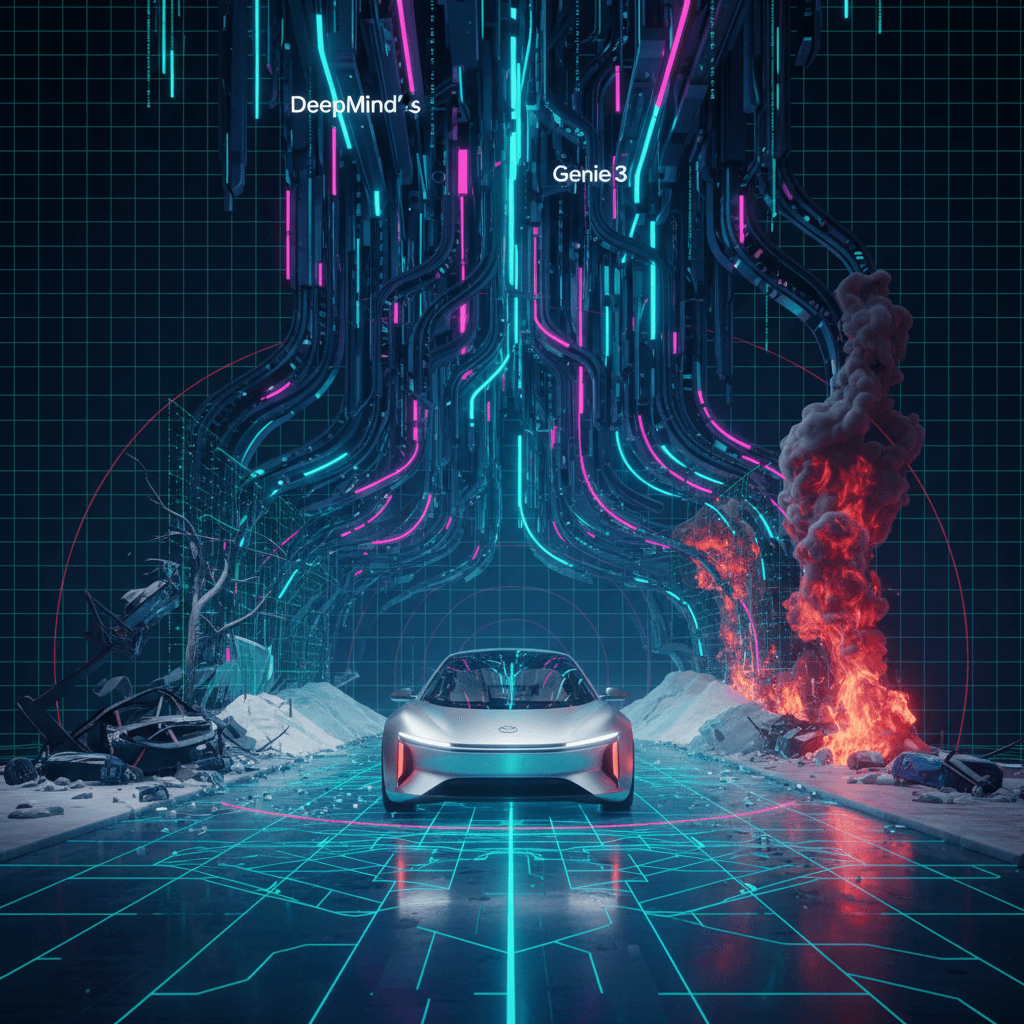

DeepMind's Genie 3 Generates Unprecedented AI World for Waymo's Robotaxi Safety.

DeepMind's Genie 3 creates hyper-realistic, generative simulations to proactively master autonomous driving edge cases.

February 6, 2026

In a major advance for autonomous vehicle development, Waymo, the self-driving technology unit of Alphabet, has integrated Google DeepMind’s powerful generative AI, Genie 3, to create an unprecedented simulation environment for training its robotaxi fleet. Dubbed the “Waymo World Model,” this collaboration represents a significant leap beyond traditional, data-constrained simulation methods, enabling Waymo to engineer hyper-realistic, interactive scenarios that its vehicles have never encountered—and may never encounter—in the real world. By leveraging DeepMind’s state-of-the-art world model, Alphabet is showcasing a profound example of internal AI synergy, using one subsidiary’s foundational generative capabilities to directly supercharge the safety and scalability of another's core product, a strategic advantage few competitors in the autonomous driving sector can match.

The primary challenge for autonomous vehicle developers has always been the sheer difficulty of collecting sufficient data for "long-tail" or "edge-case" events—the rare, hard-to-predict situations that account for a disproportionate number of real-world driving risks. Traditional simulation models, while robust, are fundamentally constrained by the sheer volume and diversity of real-world driving data collected by the fleet, making it nearly impossible to programmatically generate genuinely new, high-fidelity scenarios like a vehicle swerving to avoid an unexpected obstacle at high speed, or navigating a street flooded by a sudden storm. The Waymo World Model completely breaks this barrier, using Genie 3's generative power to conjure up scenarios from simple text prompts, allowing engineers to test for everything from the mundane to the truly catastrophic. Waymo has publicly cited examples ranging from an elephant blocking a road to a car maneuvering through a street filled with debris from a tornado or facing extreme weather conditions such as wildfires and deep snow[1][2][3].

This new system functions as a highly customized application of Genie 3, a general-purpose world model renowned for its ability to generate interactive, 3D virtual environments with impressive temporal consistency and realism, based on text and visual inputs[4][5][6][7]. Waymo’s customization is key, adapting the general-purpose model for the rigorous demands of the driving domain. The Waymo World Model is trained to generate photorealistic synthetic driving footage and, crucially, to produce high-fidelity multi-sensor outputs that precisely mimic what the vehicle’s sensor suite—including cameras, radar, and lidar—would capture[8][9][3]. This multi-modal output is essential, as the Waymo Driver relies on a fusion of this data to create a comprehensive 3D understanding of its environment. The model is also capable of ingesting real-life dashcam datasets and converting them into fully simulated environments, which can then be manipulated by developers to run countless variations of a single recorded incident, exploring different outcomes and "what if" counterfactuals at speeds up to four times faster than real-time[2][9][3]. This allows Waymo's engineers to run massive-scale testing, ensuring the autonomous driver’s software is robust and reliable when facing the kind of uncommon, high-risk situations that are critical for achieving Level 4 autonomy[10].

The strategic implications of this development for Alphabet and the broader AI industry are profound, underscoring the value of vertically integrated AI research and application. By unifying DeepMind's foundational AI expertise—Genie 3’s immense general world knowledge gained from its pre-training on a vast and diverse video dataset—with Waymo's specialized driving data and hardware-specific sensor modeling, Alphabet has created a powerful feedback loop[3][11]. This leveraging of AI technology across subsidiaries not only accelerates Waymo's product roadmap but also creates a significant moat against competitors. For the autonomous vehicle industry, which is projected to become a multi-trillion-dollar market, this advancement in simulation is viewed as a game-changer[12]. Improved simulation fidelity and scale directly address a critical bottleneck in the safe and rapid expansion of robotaxi services. Waymo’s ability to proactively prepare its system for the rarest scenarios will enhance the reliability and safety of its autonomous service, aiding its expansion into new geographical markets and more complex driving environments[9][3]. Industry analysts suggest that such AI-enhanced simulations could potentially reduce the need for real-world testing miles by a substantial margin, significantly accelerating the timeline for mass adoption of fully driverless technology[12].

In essence, the Waymo World Model, powered by Genie 3, transforms the autonomous vehicle training paradigm from one of reactive data collection—driving millions of miles to encounter rare events—to one of proactive, generative creation. It allows the system to learn from "impossible" situations, dramatically improving the safety and competence of the autonomous driver before a scenario is ever encountered on public roads. This fusion of a state-of-the-art AI world model with a leading autonomous vehicle application is a clear demonstration of how generative AI is transitioning from a consumer novelty to an essential, safety-critical tool in the most complex, real-world engineering challenges. The successful deployment of this technology will not only cement Waymo's position at the forefront of the self-driving race but also establish a new gold standard for simulation and safety in the entire autonomous mobility sector.

Sources

[2]

[4]

[6]

[7]

[8]

[9]

[10]

[11]

[12]