Deepseek OCR 2 Beats Gemini Pro, Reimagines Document AI with Causal Flow.

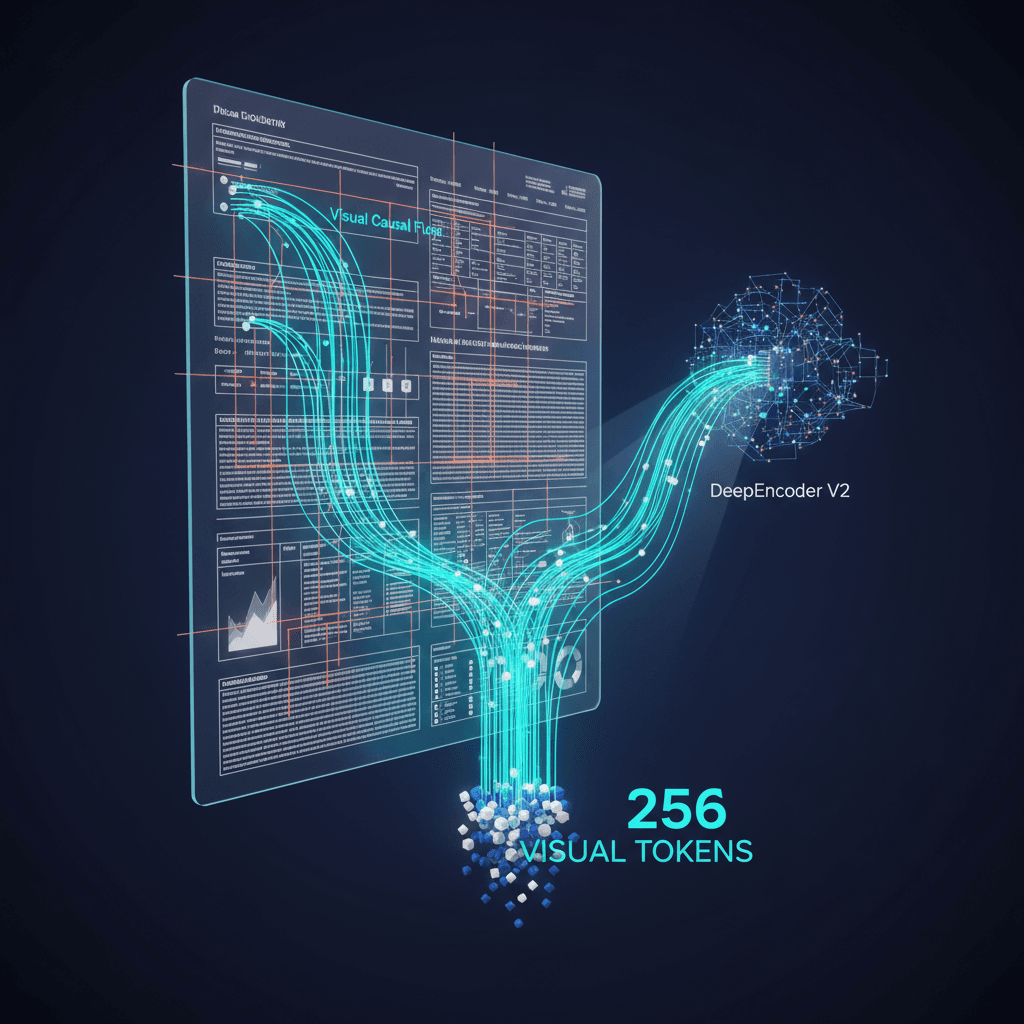

Its DeepEncoder V2 pioneers Visual Causal Flow, shattering efficiency barriers and beating Gemini 3 Pro in parsing fidelity.

February 1, 2026

The release of Deepseek OCR 2 marks a significant architectural advancement in vision-language models for document understanding, introducing a novel vision encoder that fundamentally redefines how artificial intelligence perceives and processes complex page layouts. The new system achieves state-of-the-art performance in structured document parsing while dramatically reducing the computational overhead typically associated with high-resolution image analysis. Its core innovation allows the model to compress visual information into an exceptionally small set of tokens, operating with a visual token count as low as 256 and a maximum of 1120 per page, effectively cutting the necessary context for visual understanding by a large margin compared to traditional fixed-patch models[1][2][3]. This efficiency gain is not at the expense of accuracy; on the critical OmniDocBench v1.5 benchmark, Deepseek OCR 2 achieved a comprehensive score of 91.09 percent, setting a new industry high and outperforming closed-source, large-scale models like Gemini 3 Pro in head-to-head document parsing tasks[4][2].

The breakthrough lies in the DeepEncoder V2 architecture, which replaces the conventional method of processing images in a rigid, fixed order—the "raster-scan" approach of top-left to bottom-right—with a concept called "Visual Causal Flow." Traditional vision models, much like a photocopier scanner, flatten a two-dimensional image into a one-dimensional sequence of patches, which often destroys the inherent semantic and logical structure of a complex document like a multi-column paper or a dense table[1][2][3]. DeepEncoder V2, conversely, mimics human visual perception by processing information based on content context and semantic meaning, flexibly adjusting its "reading" path to follow the logical flow of the document[5][2][3]. This ability is what allows the model to maintain the integrity of complex layouts, significantly improving its capacity to understand the correct reading order, a known challenge for earlier optical character recognition systems[4][2].

The performance metrics underscore the paradigm shift enabled by this architectural change. On the OmniDocBench v1.5, Deepseek OCR 2 demonstrated a 3.73 percentage point improvement in overall score compared to its previous generation[1][2]. Crucially, the model’s understanding of document structure is quantified by the Reading Order (R-order) Edit Distance, a metric that measures the difference between the predicted and ground-truth reading sequences[1][2]. Deepseek OCR 2 saw this edit distance drop substantially from 0.085 to 0.057, a clear indication that the causal visual flow mechanism drastically improves the model's ability to logically sequence text from complicated documents[1][2]. When directly compared to one of the most powerful closed-source systems, Deepseek OCR 2 was shown to deliver a better document parsing result[2]. Specifically, under similar constraints of approximately 1120 visual tokens, Deepseek OCR 2 achieved a document parsing edit distance of 0.100, which surpassed the 0.115 edit distance recorded for Gemini 3 Pro[1][2]. This means that Deepseek's model extracts and structures document content with greater fidelity and fewer errors than its large-scale competitor on the tested benchmarks[1][2].

At the heart of DeepEncoder V2’s ingenuity is its departure from standard vision encoder designs, substituting the original CLIP-based module with an architecture derived from a lightweight Large Language Model (specifically, a Qwen2-0.5B style transformer)[1][3]. This move transforms the encoder from a mere feature extractor into a dedicated visual reasoning module[3]. The architecture employs a dual-attention mechanism where visual tokens retain a bidirectional, full-image view, while a set of learnable "Query Tokens" adopt a causal, one-way attention pattern[3][6]. These causal query tokens are the key to the semantic reordering; they are taught to progressively infer the logical reading sequence of the document, and only this small set of logically ordered tokens is then passed to the downstream Mixture-of-Experts (MoE) LLM decoder[1][3]. By front-loading the complex task of structural parsing into the vision encoder, the downstream language model receives a pre-sorted, highly compressed, and semantically coherent sequence, leading to lower computational cost and superior interpretative accuracy[3]. This approach to visual token management is responsible for the dramatic efficiency, allowing a document that might generate thousands of raw text tokens to be represented by a small fraction of compact visual tokens while preserving over 97 percent of the structural and semantic information[7][8]. The cost benefits are enormous, with applications seeing processing costs drop from a significant expenditure per batch to a negligible amount[9].

The implications of the Deepseek OCR 2 release extend far beyond the immediate domain of optical character recognition. Researchers view the DeepEncoder V2 as an important technical step toward the long-pursued goal of unified multimodal AI[5][3]. The demonstration that an LLM-style architecture can function effectively as a visual encoder, complete with causal reasoning capabilities over spatial data, opens the door to a framework where the same foundational design could process and reason over various modalities, including text, images, and potentially audio, simply by changing the query embeddings[3]. This move towards native multimodality would allow for more seamless and efficient integration of vision and language, addressing the computational bottlenecks that currently hinder the scaling of general-purpose multimodal large language models[7]. By making the model, its code, and its research paper fully open-source, Deepseek is democratizing this cutting-edge capability, inviting further rapid innovation from the global AI community in what is being heralded as a shift from character-level extraction to genuine, document-level structural interpretation[4][2][10]. The success of Deepseek OCR 2 fundamentally changes the competitive landscape for document AI, setting a new benchmark for both performance and efficiency and accelerating the industry's progression toward AI systems that can "read" documents with human-like understanding.

Sources

[2]

[3]

[6]

[8]

[9]

[10]