DeepMind's D4RT breakthrough grants AI instant, unified 4D spatial awareness.

Achieving a 300x speed increase, this 4D model solves the bottleneck of real-time spatial AI for robots and AR.

January 24, 2026

The introduction of Google Deepmind's Dynamic 4D Reconstruction and Tracking, or D4RT, model marks a pivotal advancement in the quest to bestow machines with a truly human-like sense of their surroundings. This unified AI model is engineered to reconstruct dynamic scenes from ordinary video input in four dimensions—three spatial and one temporal—at speeds dramatically exceeding the current state of the art, moving the industry closer to real-time, high-fidelity spatial awareness for autonomous systems and extended reality devices. The model directly addresses a critical and long-standing bottleneck in spatial AI: the computational cost and fragmentation of understanding a world in constant motion. Its architectural shift unifies previously disparate tasks like depth estimation, object tracking, and camera pose estimation into a single, efficient framework, promising to fundamentally accelerate the deployment of intelligent agents in unstructured, dynamic physical environments.

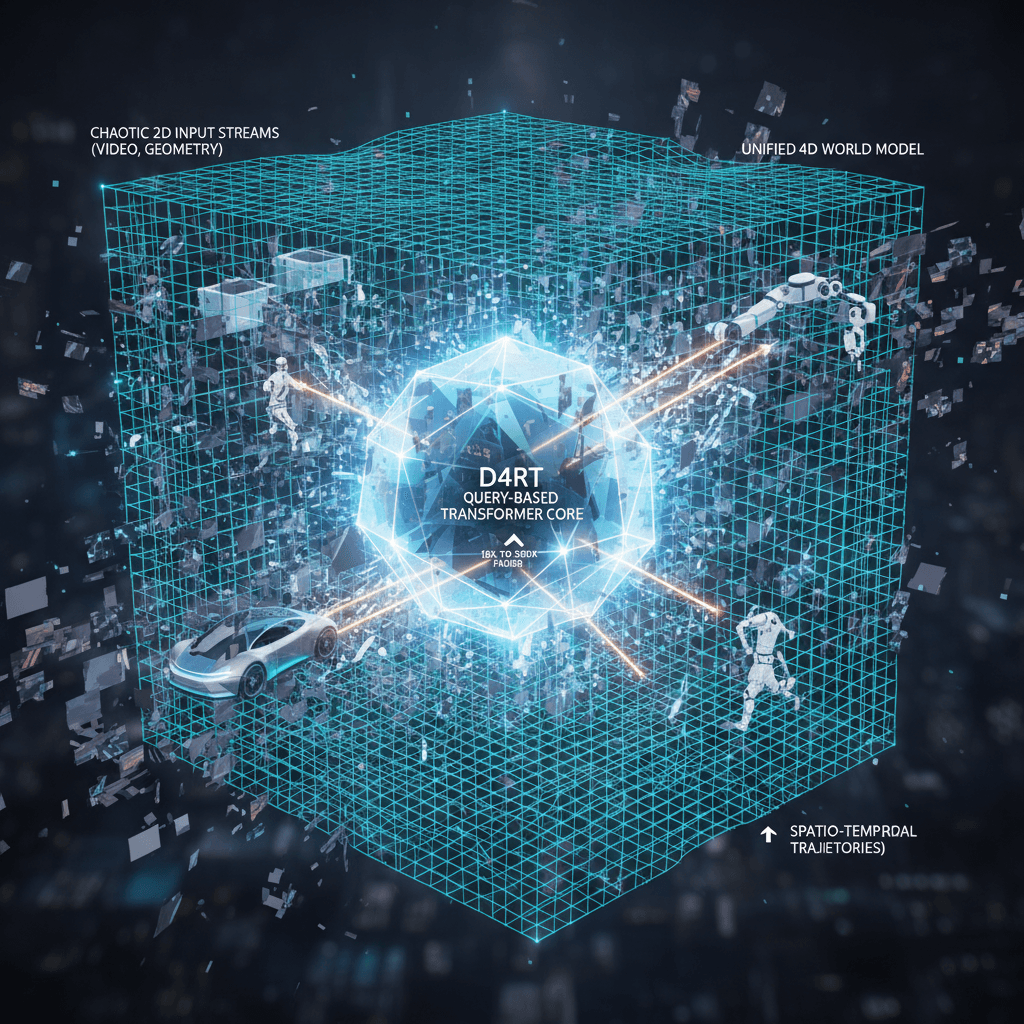

At the core of the D4RT breakthrough is a novel, query-based transformer architecture that transforms the complex, inverse problem of turning 2D video into a volumetric 4D world representation. Traditionally, achieving this level of comprehensive spatial understanding required a fragmented patchwork of specialized AI models—some for depth, others for movement, and yet others for camera angles—leading to slow, computationally intensive, and often incoherent reconstructions. In contrast, the D4RT system utilizes a global self-attention encoder to compress the entire input video into a latent Global Scene Representation, which is then accessed by a lightweight decoder. This decoder answers specific spatio-temporal queries, effectively asking, "Where is a particular pixel located in three-dimensional space at a specific point in time and from a given viewpoint?".[1][2][3][4][5] This approach sidesteps the heavy computation of dense, per-frame decoding, which has historically been the primary source of latency in such systems. By unifying these tasks, D4RT can simultaneously and coherently solve for static geometry, object motion, and camera trajectory, a capability that mimics the integrated perception of the human visual-cognitive system.[1]

The model’s efficiency delivers performance metrics that establish a new benchmark for dynamic scene reconstruction, making real-time applications a tangible reality for the first time. In testing, D4RT demonstrated speeds 18 times up to an astonishing 300 times faster than prior state-of-the-art methods.[1][2][3] For example, processing a one-minute video, a task that could take previous methods up to ten minutes, can be completed by D4RT in approximately five seconds on a single TPU chip, an improvement of 120 times.[1] Crucially, this significant gain in computational efficiency does not come at the expense of accuracy. Evaluations have shown that D4RT achieves top-tier performance in 3D point tracking using real-world data like smart-glasses footage from the Aria Digital Twin dataset, verifying its robust handling of complex scenarios involving fast camera movement (ego-motion) and object occlusions.[1] Furthermore, qualitative comparisons highlight the model's ability to maintain a solid, continuous understanding of the moving world, overcoming a major challenge for older methods that often struggled with dynamic objects, leading to 'ghosting' or reconstruction failures.[1]

The immediate and transformative impact of D4RT is expected to materialize in two major technological fields: robotics and augmented reality. For autonomous robots, D4RT’s capability to generate a high-fidelity, low-latency four-dimensional map of its environment is the foundation for safe, reliable navigation and dextrous manipulation in complex, dynamic human spaces.[1][2] Robots need to interact with worlds populated by unpredictable, moving objects and people; the model's ability to instantly predict a pixel's 3D trajectory across time, even when the object is temporarily occluded, provides the crucial spatial foresight required for flexible obstacle avoidance and precise task execution.[1][3] In the augmented reality sector, D4RT’s speed is the key to unlocking seamless, convincing AR experiences. AR glasses require an instant, low-latency understanding of a scene’s geometry to correctly overlay digital content onto the real world without perceptible lag or spatial misalignment.[1][2] The model’s efficiency contributes directly to making on-device deployment for next-generation AR hardware a tangible reality. Pilot tests, based on industry benchmarks, have already indicated up to a 50 percent improvement in AR rendering speeds when utilizing D4RT’s spatial awareness capabilities, highlighting its potential to dramatically enhance user immersion and system responsiveness.[6]

Beyond its direct application in consumer and industrial hardware, the D4RT model represents a foundational step in the broader quest for Artificial General Intelligence. Researchers consider the ability to form and maintain a persistent, dynamic internal model of physical reality—a "world model"—as a necessary precursor to true general AI.[1][2] By effectively disentangling and jointly modeling static geometry, the motion of the observing camera, and the movement of all objects within the scene, D4RT brings AI a significant step closer to possessing an intuitive, causal understanding of the physical world.[1][3] This is a move from merely processing visual input to constructing an underlying, predictive representation of reality. The efficiency and unified architecture of D4RT suggest a trajectory toward fuller spatial cognition in AI systems, setting the stage for more complex agents capable of predicting future states and drawing intuitive conclusions about the relationships between past, present, and future events in the dynamic four-dimensional world.[2][3] The advent of D4RT thus signifies not just an iterative improvement in computer vision but an architectural shift that could accelerate the timeline for truly autonomous and context-aware AI systems.