Decart’s AI Instantly Edits Live Video, Achieving Zero-Latency Stream Transformation

Text prompts instantly transform live 1080p camera feeds, shifting generative AI to continuous, low-cost reality.

January 28, 2026

The generative AI landscape has reached a new velocity with the debut of Lucy 2.0 from the startup Decart, a technology that is radically redefining the frontier of real-time video manipulation using nothing more than text prompts. This new model moves video generation from a batch-processing task, where users submit a prompt and wait for a clip, into a continuous, live operation, effectively shifting the paradigm to a "video in, video out" reality. Decart, a company backed by major venture capital firms, is positioning Lucy 2.0 as a real-time world model, capable of running a live camera feed at 1080p resolution and 30 frames per second with near-zero latency, a technical achievement that breaks a significant barrier for video generation systems.[1][2][3] This capability to transform live streams without buffering or pre-defined limits distinguishes it from earlier generative video experiments and marks a crucial step toward ubiquitous, continuous AI-powered visual media.[1][2]

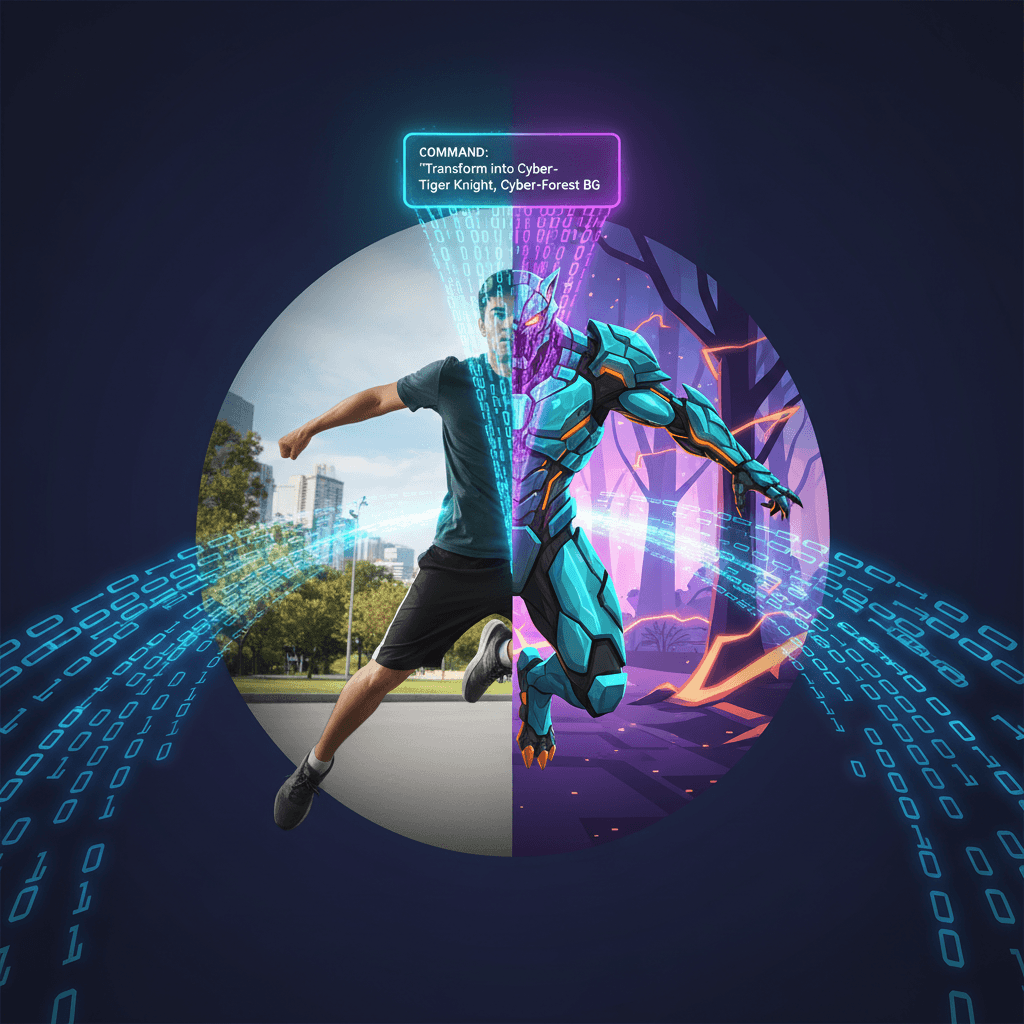

The core technological breakthrough lies in Lucy 2.0's ability to maintain persistence across a continuous stream, meaning the motion, identity, lighting, and physical presence of subjects are generated frame-by-frame as the camera remains live.[1] This stability ensures that transformations—such as instantly changing a person's clothing, replacing a character with a fantastical creature, or swapping the entire environment—respond instantly and track the subject's movement with high fidelity.[4][5][6][1][2] For instance, a user can instruct the system to "Replace the human with a bipedal tiger-like humanoid" or "Transform the scene to early autumn with warm afternoon light," and the changes are applied immediately to the live feed.[4][5][6] This real-time editing capability, which Decart markets as Lucy Edit Live, functions without the need for manual rendering steps or timeline adjustments, dramatically collapsing the time required for complex visual effects from hours to seconds.[4][7] This efficiency is further bolstered by the model's sharply reduced operational cost, which brings sustained real-time generation down from potentially hundreds of dollars per hour to approximately three dollars per hour, making an "always-on" use case economically feasible for the first time.[1]

The immediate and profound impact of Lucy 2.0 is poised to be felt across several interactive sectors, most notably live entertainment, streaming, and gaming. In live broadcasting, the model has already been tested with Twitch creators, a user base with effectively zero tolerance for latency.[1] These tests have successfully demonstrated on-the-fly character swaps, wardrobe changes, and environmental transformations that dynamically respond to a streamer's actions and audience text prompts within standard live production workflows.[1] Decart's co-founder and CEO has likened the speed requirement for this sector to the improvisational nature of live content, stating that the AI needs to be just as fast as the creators.[1] Beyond entertainment, the technology enables immediate applications in virtual try-on experiences for e-commerce, allowing shoppers to see garments transformed on live models or themselves.[1][2] In gaming, the platform opens up new possibilities for dynamic, adaptive environments, instant character skin changes, and immersive experiences in augmented and extended reality (XR) that adapt to a user’s movement and space in real time.[4][8][9] Furthermore, the API-first design, providing a simple interface for developers to build with the models, suggests a foundational shift where real-time generative video becomes a core utility for building new classes of interactive applications.[8]

Industry analysts are calling Lucy 2.0 a potential "GPT-3 moment" for world models, emphasizing that achieving live, real-time operation at high resolution without quality compromises is an inflection point that will create entirely new markets.[1] This technological leap highlights a significant movement in the AI industry away from discrete clip generation toward continuous, responsive systems that simulate and interact with a live world. Unlike earlier, off-line models that relied on post-production to correct errors and synthesize short segments, Decart's focus on persistence and speed suggests a future where AI models are integral to the very fabric of live, interactive media.[1] The underlying architecture, which builds on advanced diffusion-transformer technology, prioritizes speed and fidelity, aiming to become the default framework for real-time generative video.[9][6] This development is not merely an improvement in video quality but a fundamental change in how visual information can be created, edited, and consumed, pushing the boundaries of what is possible in live-action augmented reality and continuous media generation.

In conclusion, Lucy 2.0's introduction signifies a massive acceleration in the development of generative video AI, setting a new benchmark for speed and continuity. The ability to execute instruction-guided edits on live video at 1080p and 30fps with near-zero latency, combined with a dramatically lowered operational cost, positions the technology to be a disruptive force across media, interactive experiences, and commerce. By making real-time, high-quality video manipulation a reality, Decart has not just launched a new product but has arguably unlocked the next phase of generative media, where the digital and physical worlds can be seamlessly and instantly edited via a simple text prompt. The long-term implications point toward a future where "live" no longer means "uneditable" and where the visual reality of a stream or a camera feed can be dynamically rewritten as it happens, fostering a new era of spontaneous and interactive content creation.

Sources

[1]

[2]

[3]

[4]

[7]

[8]

[9]