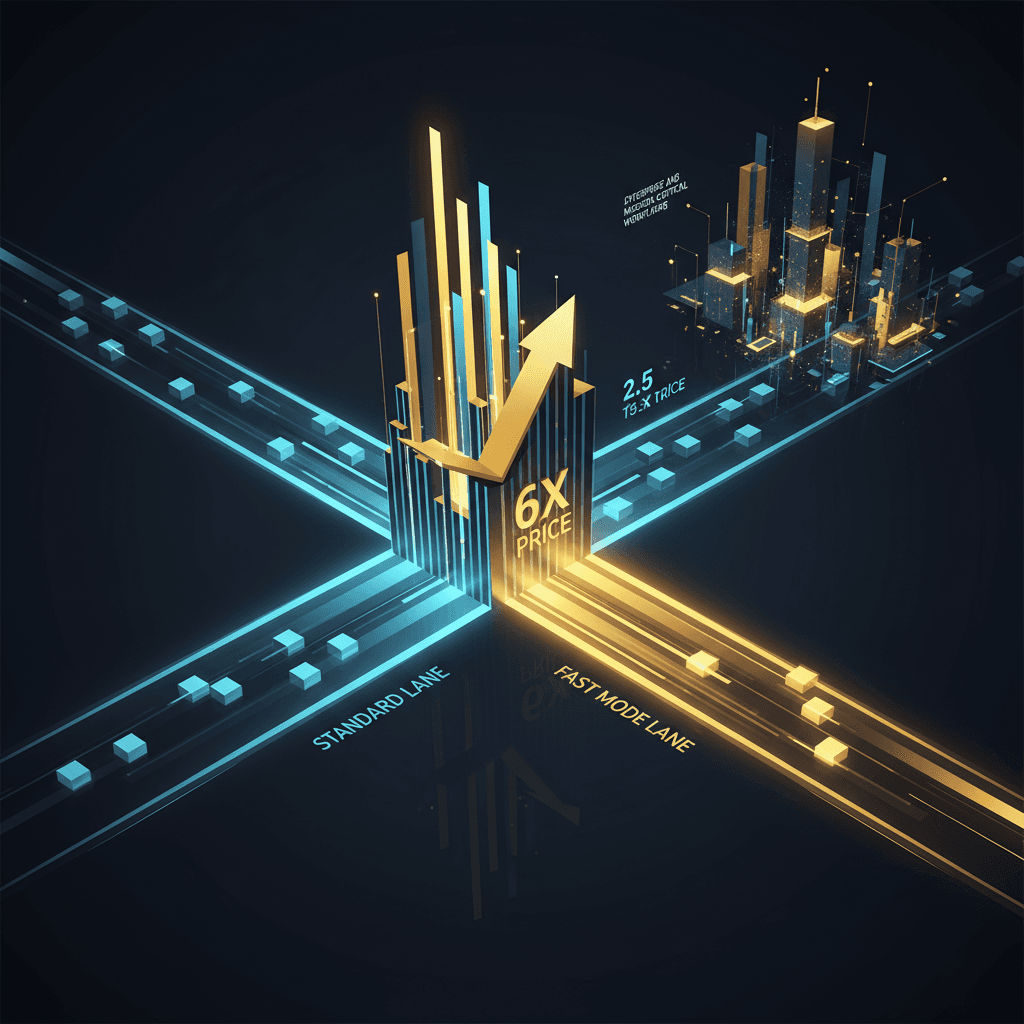

Anthropic's Fast Mode Sets AI Speed Trap: Six-Times Price for Enterprise Access.

Anthropic’s Fast Mode charges a 600% premium for speed, separating enterprise users from individual developers.

February 8, 2026

The introduction of Anthropic's new Fast Mode for its flagship Claude Opus 4.6 model has ignited a fierce debate in the artificial intelligence industry, exposing a widening chasm between the needs of individual users and high-spending enterprise clients. The feature, which promises responses that are 2.5 times faster with no degradation in quality, comes with a stark caveat: an astonishing up to six-times markup on the standard token price, a tradeoff that redefines the premium tier of frontier AI access[1][2]. This aggressive pricing strategy positions Anthropic as a clear player in the race for high-value enterprise workflows, effectively monetizing developer impatience and business-critical latency requirements.

Standard API pricing for Claude Opus 4.6 sits at $5 per million input tokens and $25 per million output tokens[3][4]. The Fast Mode, however, drastically re-rates this to $30 per million input tokens and $150 per million output tokens, a direct six-fold increase[5][6]. The price hike becomes even more pronounced for users leveraging the model’s massive 1-million-token context window, where the standard price already doubles for input tokens exceeding 200,000[3][7]. For these long-context prompts, the Fast Mode cost can surge to an unprecedented $60 per million input tokens and $225 per million output tokens, a price point that has been described by some users as unjustifiable[8][6]. Anthropic has framed the new feature, which is not a different model but a different API configuration that prioritizes speed over cost efficiency, as a "research preview"[9]. The company initially offered a 50% introductory discount on the Fast Mode pricing for a short period, a move some critics suggested was a deliberate strategy to hook users on the speed before the full, steep price took effect[8][6].

The primary justification for this extreme cost is the significant reduction in latency, making the model far more suitable for time-sensitive, interactive applications. Use cases specifically highlighted by Anthropic include rapid iteration in coding and live debugging, where instantaneous feedback from an AI assistant can dramatically accelerate a developer's workflow[9]. For a developer whose time is valued in hundreds of dollars per hour, the financial calculus shifts: paying a few extra dollars on tokens to save minutes of waiting time can be a net positive for a corporation[8]. The Fast Mode feature, which is primarily accessible via the Claude API and in tools like Claude Code, is explicitly marketed toward users on subscription plans (Pro/Max/Team/Enterprise) and is billed as "extra usage," entirely bypassing any regular subscription token quotas[8][9]. This mechanism firmly establishes Fast Mode as a corporate speed dial for mission-critical tasks, not a feature designed for casual or budget-conscious use[8].

The implementation details of Fast Mode have also drawn fire, particularly in online developer communities. One of the most controversial mechanics is the retroactive repricing of an entire conversation history at the higher Fast Mode rate if a user decides to switch the mode on mid-chat[8]. Furthermore, the mode's persistence across sessions, along with an auto-renewal feature after a cooldown period, creates a potential for unexpected, high-cost charges if a user's context window grows without them realizing the premium mode is still active[8]. These mechanisms have been labeled "predatory" by some in the community, fueling a perception that the feature is engineered to optimize Anthropic’s revenue from large corporate budgets with minimal user friction, even at the risk of surprise billing for individual developers[8]. This pricing complexity mirrors other advanced features of Claude Opus 4.6, such as the "Adaptive Thinking" default, which prioritizes higher quality reasoning (and thus burns more expensive output tokens, even for internal thought processes unseen by the user) over cost efficiency, further nudging the average token spend upward[7].

The introduction of Fast Mode also has significant implications for the broader AI market and the competitive landscape of foundation models. By creating a six-tier price differential for the same model quality, Anthropic is pioneering a new form of hardware and infrastructure monetization. The underlying cost for running frontier models at a higher throughput is substantial, requiring preferential access to scarce, high-demand compute resources like specialized graphics processing units[10]. This premium pricing model is a direct way to recoup those high operational costs and fund continued research into next-generation models, solidifying Anthropic's position at the cutting edge of AI development[8]. However, the move also invites direct comparison to competitors. For instance, some commentary has pointed to models like Gemini 3 Pro, which reportedly offers a strong tokens-per-second speed at a lower price point, even if its capabilities in specific domains like coding are not yet as strong as Opus 4.6[10]. The willingness of customers to pay a 600% markup for 250% speed illustrates that for a select class of users—primarily enterprises building AI agents or working on urgent, high-stakes problems—latency is a more critical constraint than cost per token[8]. This sets a potential new industry standard where time-to-output becomes the ultimate luxury feature.

In essence, Anthropic's Fast Mode is a masterclass in market segmentation and value-based pricing, offering a stark demonstration of what corporations are willing to pay to eliminate computational waiting time. It establishes a tiered reality in the use of high-end AI: an economical, standard track for non-critical, batch, and recreational use, and a turbo-charged, exponentially more expensive fast lane for the industrial and professional developer complex. The public backlash from individual users highlights a growing desire for the opposite—a "Slow Mode" that offers even cheaper rates for non-urgent computation[8]. Yet, the focus of the AI frontier remains firmly on the lucrative, speed-obsessed enterprise sector, reinforcing the narrative that in the highest echelons of AI, efficiency is the new commodity, and the premium for speed is effectively limitless. The new Fast Mode signals a future where AI access will be increasingly defined by a pay-to-accelerate model, fundamentally changing the token economics of sophisticated language models.

Sources

[2]

[3]

[5]

[7]

[8]

[9]

[10]