Altman Taunts Anthropic: ChatGPT’s Texas Users Beat Claude Nationally.

Scale meets ethics as OpenAI minimizes Anthropic’s ad-free model amid a fundamental business model war.

February 5, 2026

The escalating rivalry between generative AI titans OpenAI and Anthropic reached a new point of contention with OpenAI CEO Sam Altman’s dismissive claim that his company’s ChatGPT has a greater user base in the state of Texas alone than Anthropic’s Claude has across the entire United States. The provocative assertion came as a direct shot at Anthropic's multi-million-dollar "anti-ad campaign," which sought to position its product, Claude, as an ethically superior, ad-free alternative to models like ChatGPT, thereby highlighting the deep and growing philosophical schism over the future commercialization of conversational AI. Altman’s comment, intended to underscore the vast disparity in scale between the two companies, serves as a stark reminder of OpenAI’s market dominance, even as it forces an industry-wide discussion on the integrity of large language models that are increasingly integrated with advertising.

Anthropic’s recent campaign, titled "A Time and a Place," focused its marketing efforts, including a major Super Bowl spot, on critiquing the integration of advertising into the core AI conversation experience. The ads featured humorous, yet pointed, parodies of chatbots injecting product placements into sensitive user interactions, such as a fitness query devolving into an unsolicited commercial for shoe insoles or a therapy session veering toward a dating site pitch[1][2]. The underlying principle of Anthropic's public pledge to keep Claude permanently ad-free is the argument that the conversational format of AI is fundamentally different from search engines or social media, with users sharing highly personal or complex information that could be unduly influenced by commercial interests[3][4]. In a detailed statement, the company argued that including advertisements in such intimate conversations is incompatible with the goal of being a "genuinely helpful assistant," a position that implicitly casts doubt on the ethical model of its primary competitor[4].

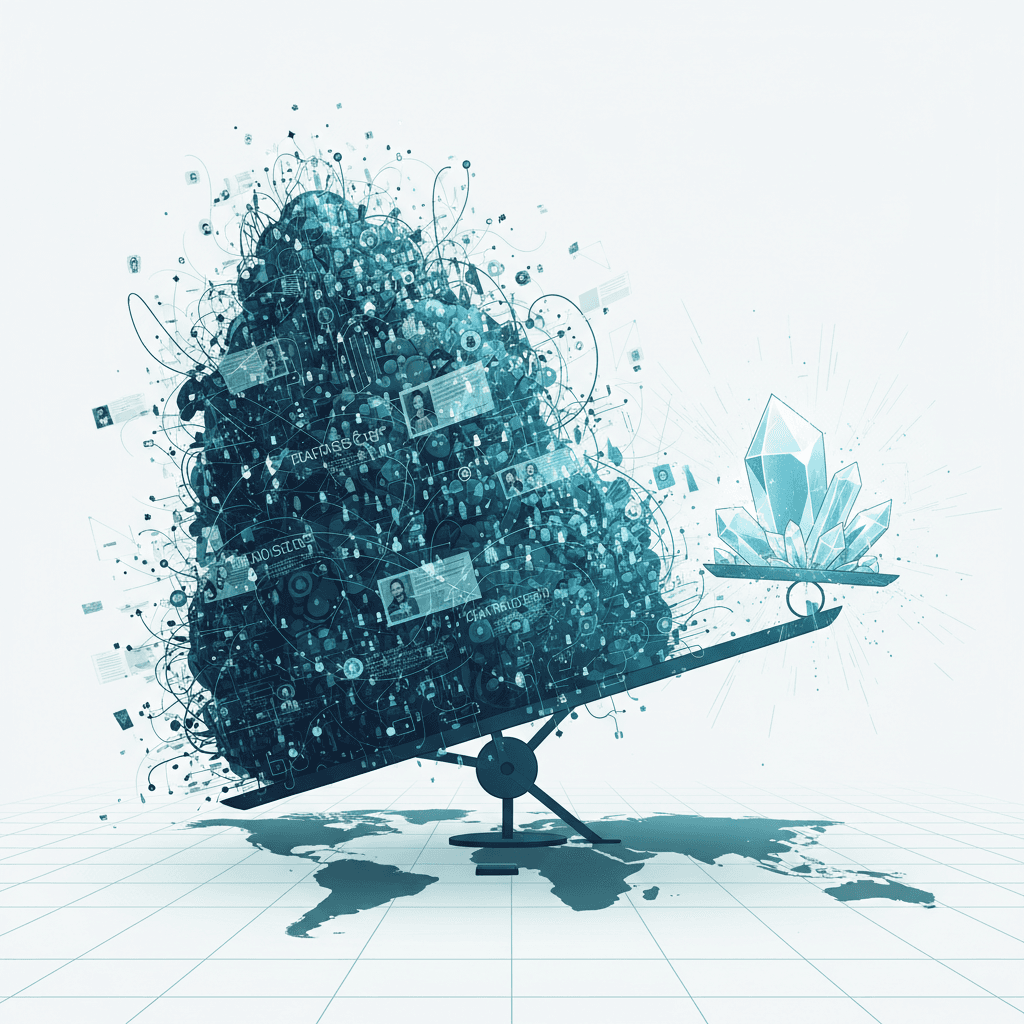

Altman’s rejoinder sought to reframe the debate, characterizing Anthropic’s ethical stance as "dishonest" marketing that attempts to diminish a successful and necessary business model[2]. His specific claim regarding user numbers—Texas’s ChatGPT users outweighing Claude's entire national base—is a powerful rhetorical tool in the battle for public perception and investor confidence, leveraging sheer volume to minimize the moral argument of his competitor. Current market data, while not offering precise state-by-state breakdowns, generally supports the enormous gulf in user adoption. While an estimated 34% of U.S. adults have used ChatGPT, its platform records around 800 million weekly active users globally, with monthly visits reaching into the billions[5][6]. In contrast, Claude is still an ascendant challenger, with global monthly active users estimated to be less than 20 million, with a small fraction of the overall AI chatbot market share[7][8]. Given that Texas is the second-most populous state in the U.S., with over 31 million residents, even a modest market penetration of the Lone Star State’s population would result in a massive user count that would likely eclipse Claude's current national footprint[3][9][7].

However, the debate quickly became complicated for OpenAI due to the historical context of its own leader’s public warnings. Altman himself has been one of the most vocal industry figures cautioning against the societal risks posed by advanced AI, specifically pointing to the danger of "superhuman persuasion." He has previously noted that he expects AI to be capable of this level of extreme influence well before achieving true Artificial General Intelligence (AGI), which he warned could lead to "very strange outcomes"[6][10]. This previous ethical stance is directly at odds with his defense of an ad-supported model that Anthropic is critiquing for its inherent risks of introducing commercial bias and manipulative content into vulnerable conversations[1][10]. Anthropic, a spin-off founded by former OpenAI researchers, has long positioned itself as a values-driven organization, focusing on AI safety and alignment, a mission that makes its ad-free commitment a natural extension of its core philosophy[3][11].

The significance of the clash extends far beyond marketing bravado, representing a fundamental fork in the road for the AI industry's future business models. For OpenAI, backed by billions in investment, an ad-supported model is a path to massive revenue generation, essential for recovering the extraordinary costs of building and maintaining frontier AI models. For Anthropic and other challengers, the ad-free model acts as a strategic differentiator—a moral high ground that appeals to enterprise clients concerned with data privacy and users wary of the increasingly pervasive commercialization of digital life[3][12]. While Altman relies on the undeniable fact of market share, asserting that the company "would obviously never run ads in the way Anthropic depicts them," the counter-argument maintains that the economic incentive to increase user engagement and deliver advertiser value will inevitably compromise the integrity of the AI assistant's responses[2]. The outcome of this rivalry—whether the sheer scale of the dominant platform prevails, or if a significant segment of the market opts for the "trust-first" value proposition—will ultimately determine how AI systems are financially sustained and, critically, how much control users retain over their digital conversations.