OpenAI Pivots: Elite Engineers Embed to Drive $100 Billion Enterprise AI Race

OpenAI launches a massive consulting push, deploying elite engineers to unlock the multi-trillion-dollar enterprise market.

February 5, 2026

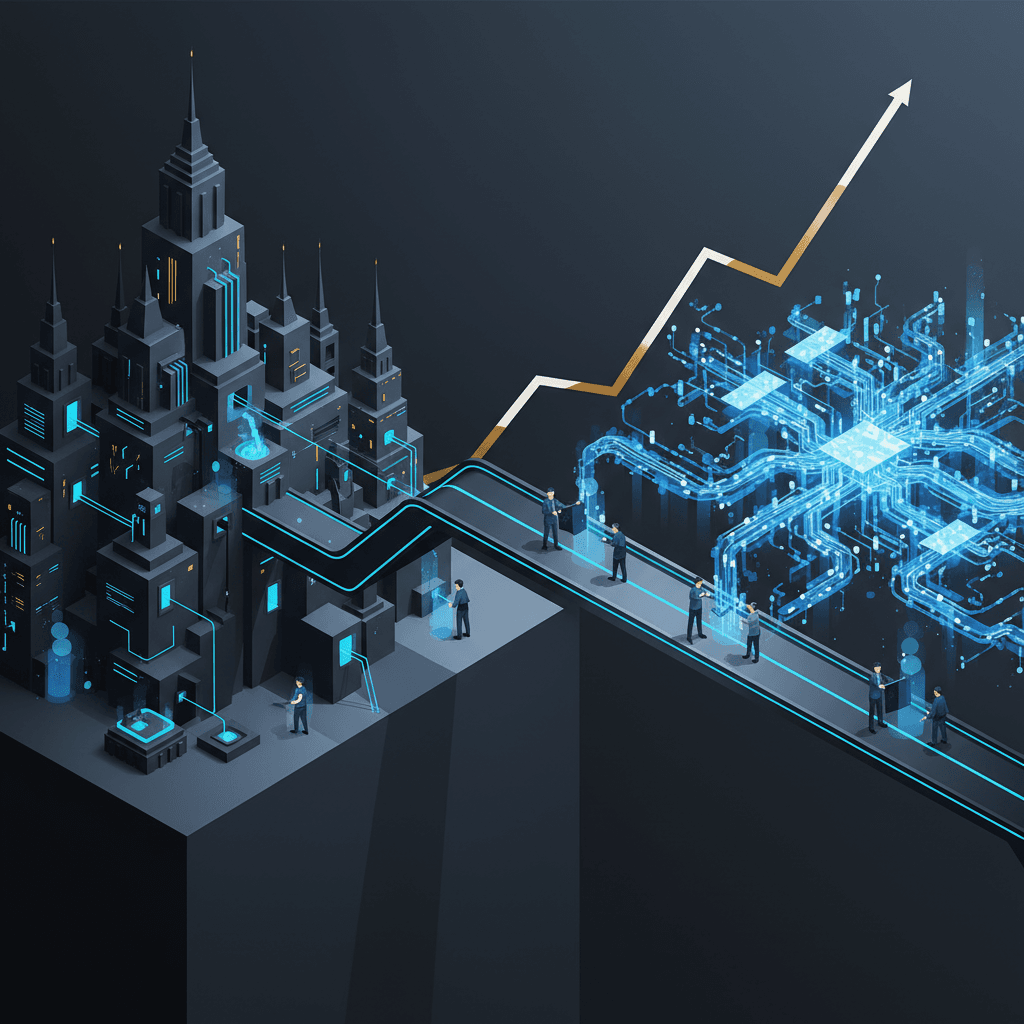

The race for enterprise AI dominance is shifting from a technological frontier battle to an on-the-ground sales arms race, marked by a fundamental change in strategy by the world’s leading generative AI companies. At the forefront of this pivot is OpenAI, which, in its aggressive push toward a stated ambition of US$100 billion in annual revenue by 2027, is reportedly constructing a formidable technical consulting division designed to bridge the chasm between its cutting-edge models and complex corporate boardrooms.[1][2] This move signals a significant maturation of the AI market, where merely offering an API is no longer sufficient; the winning play involves deep, embedded implementation and bespoke digital transformation.[2][3]

The audacious US$100 billion revenue target, a figure CEO Sam Altman has suggested could be achievable by 2027, necessitates a massive and immediate scale-up beyond the consumer and API segments.[1][4] While OpenAI’s annualized revenue had already surged, hitting an estimated US$20 billion in 2025, up from US$6 billion in 2024, the path to the next US$80 billion requires unlocking the multi-trillion-dollar market of global knowledge work.[2][4] Achieving this trajectory is dependent on the explosive growth of AI Agents—autonomous applications that can perform entire job functions with minimal human oversight—which would need to account for a significant majority of the company's revenue by the target date.[4] To realize this, OpenAI cannot rely on enterprises figuring out implementation alone, which is why the company is reportedly hiring hundreds of new employees for its technical consulting division.[5]

OpenAI's strategy is an 'AI-native' consulting model, directly challenging the traditional technology implementation role held by management consulting firms and systems integrators.[6][3] This new division, in a clear parallel to Palantir’s approach, involves deploying highly specialized "Forward-Deployed Engineers" directly into client operations.[7][3] These elite engineers are tasked not just with recommending AI use cases, but with the full-scale delivery of custom solutions, including fine-tuning models on proprietary workflows, building domain-specific copilots, and developing custom AI applications.[7][3] This high-touch, hands-on model transforms the relationship from a vendor-customer dynamic to a deeply embedded partnership, often starting with high-value contracts of US$10 million or more.[7][6] By embedding its own builders, OpenAI dramatically accelerates the adoption lifecycle and creates a powerful structural lock-in: once the AI becomes a seamless part of a company’s core workflows, switching to a competitor becomes exponentially more disruptive.[3]

This strategic shift is a direct response to the stubbornly wide gap between AI pilot projects and full-scale enterprise production. Despite the universal acknowledgment of AI's potential, industry data indicates that the majority of AI initiatives often stall; one report suggests that while a high percentage of large enterprises are implementing AI solutions, only a small fraction of use cases reach full, company-wide deployment.[2] The primary roadblocks are not model capabilities, but rather the complexity of integrating AI with chaotic legacy IT systems, addressing stringent data privacy and regulatory compliance needs, and managing the profound organizational and change management challenges.[2][3] These are human and organizational problems that an API key cannot solve. By providing its own implementation expertise, OpenAI is effectively cutting out the middleman, promising faster, more reliable, and more deeply customized AI-as-a-Service solutions built by the very creators of the foundational technology.[6]

The move immediately escalates the AI sales arms race, placing OpenAI in direct competition with a diverse array of established and emerging rivals. The most direct competitors in the enterprise AI space, Anthropic and Google, have already sharpened their own enterprise strategies.[5][8] Anthropic, for instance, has successfully courted security-conscious organizations by emphasizing model reliability and safety, which has translated into meaningful gains in enterprise penetration, nearly doubling its production deployment share in a matter of months.[9][10] Google, leveraging its vast cloud and software ecosystem, is playing a long-term game by integrating its Gemini models across Workspace and Google Cloud services.[8] While OpenAI continues to hold a leading position in overall enterprise deployments, competitors are steadily gaining 'wallet share,' pushing many large organizations to adopt a multi-model strategy where different frontier models are used for different specialized workloads—a clear sign that the market is fragmenting by use case.[9][10]

In this newly intensified competitive environment, the AI consulting playbook is the new standard. OpenAI’s decision to appoint an insider, Barret Zoph, to lead its enterprise push, underscores the company’s pivot toward delivering a secure, scalable, and business-friendly experience, replacing the initial, hype-driven phase of adoption with a focus on real-world outcomes.[8] By assuming the role of the implementation partner, the company is directly addressing the primary friction point in enterprise adoption, ensuring its own models become not just a feature, but an indispensable piece of core business infrastructure. This shift solidifies that the next phase of the AI revolution will be won not just in the lab with superior models, but in the trenches of enterprise sales and technical delivery.[2][3]