AIs Ditch Text for Direct Memory Chat, Supercharging Team Intelligence

A new C2C method allows LLMs to share internal memory directly, bypassing text for faster, more accurate, and synergistic AI teams.

October 12, 2025

In a significant leap forward for multi-agent artificial intelligence systems, researchers in China have developed a novel communication method that allows large language models (LLMs) to interact directly through their internal memory, bypassing the need for text-based conversation. This new technique, dubbed Cache-to-Cache (C2C), enables faster, more accurate, and more nuanced collaboration between AI agents, potentially unlocking new frontiers in complex problem-solving and system efficiency. The breakthrough addresses fundamental limitations inherent in current multi-LLM frameworks, which rely on generating and interpreting human language, a process that can be slow, imprecise, and prone to information loss. By creating a direct conduit for sharing semantic meaning, the C2C paradigm paves the way for more deeply integrated and capable AI teams.

The prevailing method of communication for collaborative LLMs has been text-to-text, where one model generates a written output that a second model then reads and interprets.[1][2][3] This approach, while functional, mirrors human conversation and carries similar drawbacks. Researchers identified three core problems with this method: it acts as a bottleneck, natural language can be ambiguous, and the token-by-token generation of text introduces significant latency.[4] For instance, if a programmer LLM instructs a writer LLM to "write content to the section wrapper," the writer model might misunderstand the structural context of a coding element like "

", leading to errors.[4] The process of converting rich, internal representations of data into a linear sequence of words inevitably loses semantic information, much like trying to describe a complex image using only words.[1][2][3] The C2C method circumvents this by allowing models to share information directly from their internal memory storage, specifically the KV-cache, which stores key contextual information the model uses to generate responses.

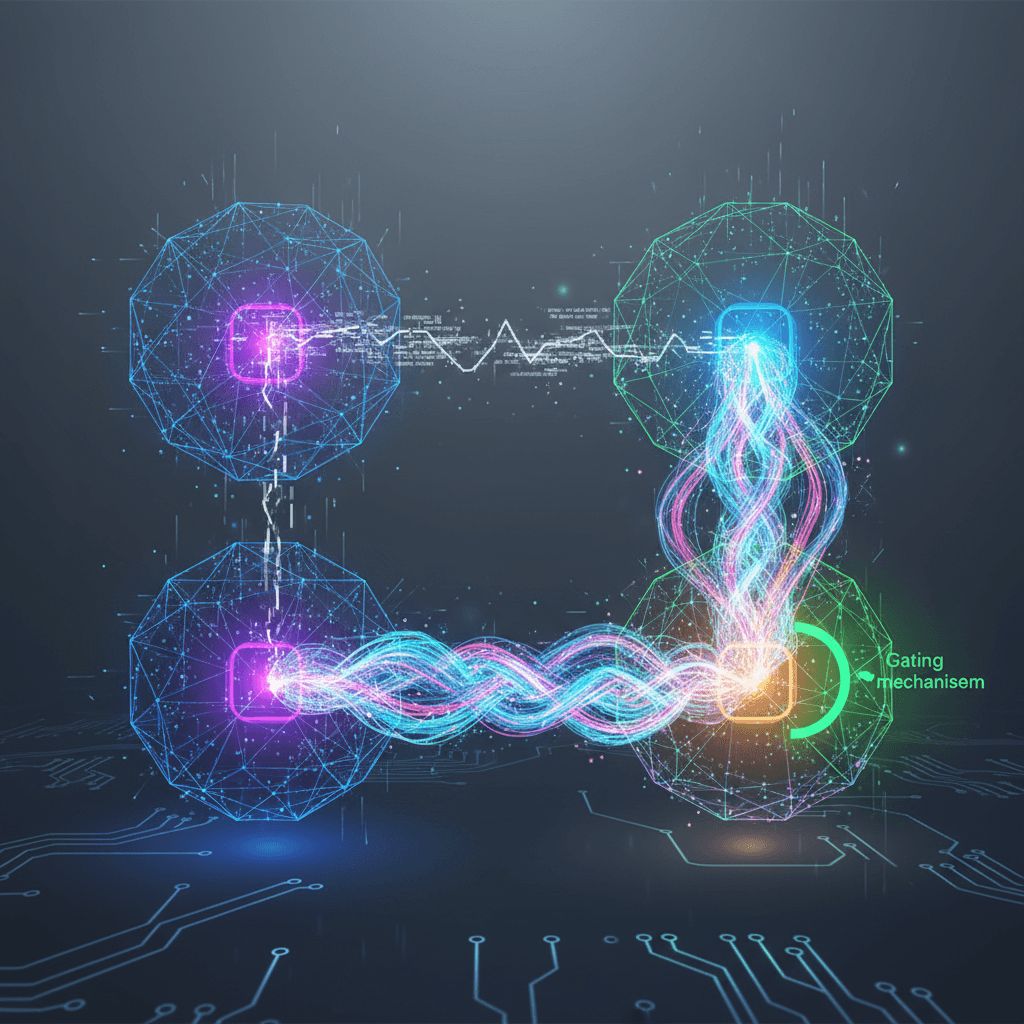

At its core, the Cache-to-Cache system works by creating a direct bridge between the internal states of two different LLMs. The C2C framework uses a neural network to project the KV-cache from a source model into the compatible space of a target model.[1][5] This projected memory is then fused with the target model's own KV-cache.[1][2] This process allows for the direct transfer of rich semantic understanding without the intermediate and lossy step of generating text.[1][2][6] A crucial component of this architecture is a "learnable gating mechanism," which intelligently selects the specific layers within the target model that would most benefit from the external cache communication.[1][2][5] This ensures that the information is integrated in the most effective way, enhancing the receiving model's understanding and capabilities. By sharing the underlying meaning and context stored in this internal memory, the models can achieve a more profound and accurate level of communication.

The performance gains demonstrated by the C2C method are substantial, highlighting its potential to revolutionize multi-agent AI systems. Experiments conducted by the research team showed that C2C achieves an average accuracy 8.5% to 10.5% higher than individual models working alone.[1][2][6] More significantly, it outperforms traditional text-based communication between models by a margin of 3.0% to 5.0%.[1][2][6] The implications of this extend beyond mere accuracy; the efficiency gains are equally impressive. By eliminating the need to generate intermediate text, the C2C method delivers an average speedup of 2.0x in latency.[1][2][6] The researchers tested this approach with various combinations of models, including Qwen, Llama, and Gemma, demonstrating its versatility.[4] The findings suggest that as multi-agent systems become more prevalent for tackling complex tasks, this form of direct communication will be critical for enabling them to coordinate and collaborate effectively, creating a whole that is truly greater than the sum of its parts.[7][8]

In conclusion, the development of Cache-to-Cache communication marks a pivotal moment in the evolution of collaborative AI. By enabling large language models to share meaning directly through their internal memory, this research from China offers a powerful solution to the bottlenecks of speed and accuracy that have constrained multi-agent systems. The reported improvements in performance and the reduction in latency provide a compelling case for moving beyond text as the primary interface for AI-to-AI interaction. As artificial intelligence continues to advance, fostering more sophisticated and efficient ways for models to share knowledge and work in concert will be essential. The C2C method represents a fundamental shift in this direction, promising a future where teams of specialized AIs can collaborate with unprecedented synergy and intelligence, tackling complex challenges far beyond the scope of any single model.