Verbalized Sampling Prompts End AI Monotony, Unlock Vast Creative Diversity

Verbalized Sampling: A simple prompt adjustment unleashes AI's full creative diversity, bypassing ingrained human bias.

October 19, 2025

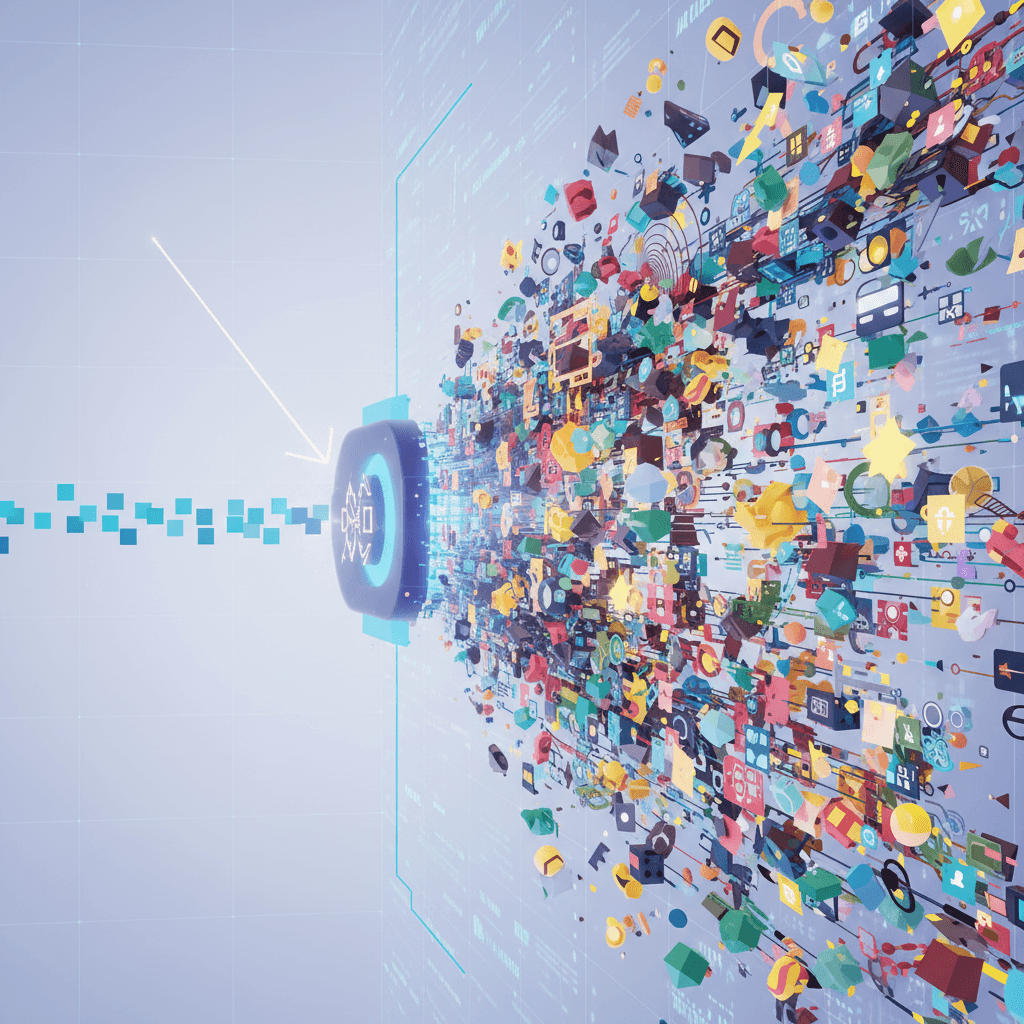

A simple yet powerful prompting technique known as Verbalized Sampling is showing significant promise in tackling a common and frustrating trait of modern AI: its tendency toward repetitive and stereotypical responses. By altering how a query is posed to a large language model (LLM), this method can unlock a much wider range of creative and diverse outputs without requiring any changes to the underlying AI model itself. Research has demonstrated that this technique can increase the diversity of creative writing tasks by a factor of 1.6 to 2.1 without negatively impacting factual accuracy or safety.[1][2][3][4] The approach is training-free, meaning it can be applied to virtually any instruction-following AI, including popular models like those from the GPT, Claude, and Gemini families.[5][6]

The core problem Verbalized Sampling addresses is a phenomenon known as "mode collapse," where an AI model, despite its vast training data, repeatedly defaults to a narrow set of common or "safe" answers.[1][7][3] Ask an AI for five different jokes about coffee, and you might receive the same punchline five times.[7][8][9] Researchers from Stanford University, Northeastern University, and West Virginia University have identified a key driver of this behavior: a "typicality bias" ingrained during the AI's alignment process.[1][3][8][9] This process often involves using human feedback to fine-tune the model's responses. The research suggests that human annotators, influenced by well-established cognitive biases, systematically prefer text that is familiar, predictable, and fluent.[1][10][3][11] This preference for the typical acts as a tie-breaker, pushing the AI to favor the most common answer among many potentially correct or creative options, thereby "sharpening" the probability distribution towards a few stereotypical outputs.[10][3][11] This isn't necessarily a flaw in the AI's algorithm, but rather a reflection of the human data used to refine it.[7]

Verbalized Sampling circumvents this learned bias with a straightforward change in the user's prompt. Instead of requesting a single instance, such as "Tell me a story," the user asks the model to generate a probability distribution over a set of possible responses.[1][2][3] A typical prompt might be: "Generate 5 responses to the user query, each with a corresponding probability, sampled from the full distribution."[1][12][8] This seemingly minor adjustment fundamentally changes the task for the AI. It shifts from providing the single most likely answer to verbalizing its broader understanding of the possible responses and their relative likelihoods.[2][10] By prompting for a distribution, the user encourages the model to access the diverse knowledge it gained during pre-training, effectively bypassing the mode collapse that occurs during the alignment phase.[7][10][8] This method is orthogonal to adjusting other parameters like "temperature," which controls randomness, as Verbalized Sampling changes the underlying distribution itself.[7]

The implications of this technique are broad and significant for the AI industry. In creative fields like writing poems, stories, and jokes, it demonstrably boosts the variety and novelty of generated content.[1][2][5] This is crucial for brainstorming and ideation, where receiving the same few conventional ideas defeats the purpose of using an AI assistant.[7] The method also shows substantial benefits in more technical applications. For instance, when used for synthetic data generation for tasks like solving math problems, the more diverse data created through Verbalized Sampling led to improved performance in downstream models.[2][7][10] Furthermore, in dialogue simulations, it resulted in more human-like and realistic behaviors.[2][10] An emergent trend observed by the researchers is that more capable models benefit more from Verbalized Sampling, suggesting the technique is effective at unlocking the latent creative potential of the most advanced AIs.[1][2][7][4]

In conclusion, Verbalized Sampling offers a practical, accessible, and training-free remedy for the pervasive issue of AI-generated monotony. By identifying typicality bias in human preference data as a root cause of mode collapse, researchers have provided a new, data-centric perspective on the behavior of aligned language models.[1][2][10] The solution's elegance lies in its simplicity: changing the prompt to ask "what could the answers be?" rather than "what is the answer?" This allows users to tap into the full generative diversity inherent in the models. As AI becomes more integrated into creative and analytical workflows, techniques like Verbalized Sampling will be vital for ensuring that these powerful tools augment, rather than limit, the breadth of human-computer collaboration.[7][3]