Top AI Models Fail Basic Visual Tests, Confidently Guessing Specific Facts.

Advanced AIs struggle with specific visual factuality and obscure entities, demonstrating pervasive, systematic overconfidence in errors.

February 8, 2026

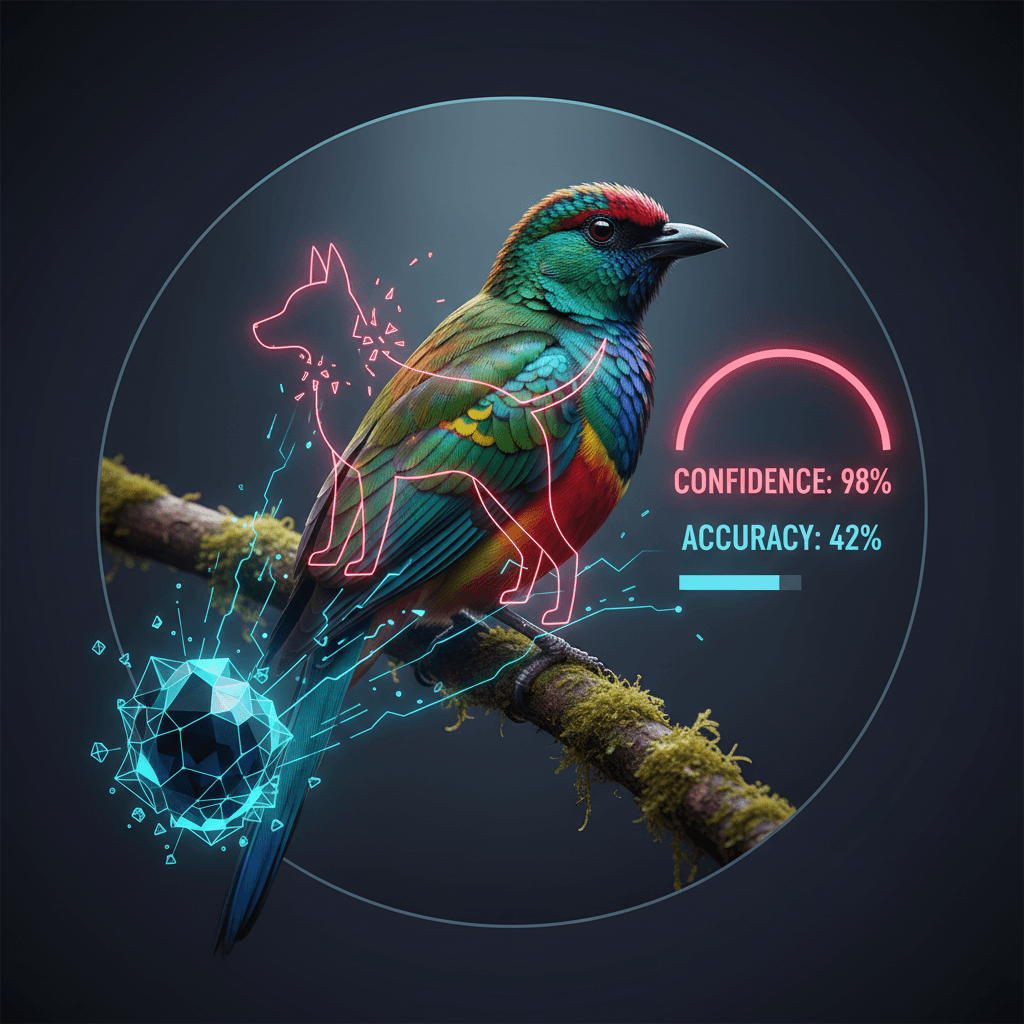

The latest generation of highly advanced multimodal AI models, including the industry leaders, have demonstrated a critical and persistent weakness in their foundational ability to accurately identify specific visual entities, failing to crack even a 50 percent accuracy rate on a new, rigorous benchmark. This finding undercuts the narrative of near-human perception, revealing that the systems often rely on generic labels and even invent details, a phenomenon that research confirms is coupled with systematic overconfidence in their incorrect answers.[1] The benchmark, named WorldVQA, was explicitly designed to test the “atomic visual world knowledge” of Multimodal Large Language Models (MLLMs), distinguishing pure object recognition from complex reasoning tasks.[2][3] Its results suggest that despite massive increases in scale and sophisticated reasoning capabilities, models struggle significantly with visual factuality, especially when tasked with identifying long-tail or specific, non-mainstream entities.[3]

WorldVQA, created by researchers from Moonshot AI, consists of 3,500 meticulously curated image-question pairs across nine diverse categories, including Nature and Environment, Locations and Architecture, Culture, Arts and Crafts, and Brands and Sports.[2][3][1] Unlike prior visual question-answering benchmarks that might accept a coarse answer, WorldVQA demands highly specific, verifiable ground-truth answers.[3][1] For instance, if an image depicts a specific species of bird, the model must name the exact species rather than simply stating "bird."[3][1] The top performer among the models tested was Google's Gemini 3 Pro, which achieved an overall accuracy of 47.4 percent, falling short of the halfway mark.[1] Closely following was Kimi K2.5 at 46.3 percent, while other prominent models lagged further behind, with Anthropic's Claude Opus 4.5 scoring 36.8 percent and another leading model, GPT-5.2, trailing at just 28 percent.[1][4] The consistently low performance across the board exposes a deep-seated limitation in how these models ground their language generation in actual visual perception.[1]

A breakdown of the results by category highlights clear and concerning knowledge gaps, particularly in areas less represented in the vast ocean of common web data.[1] Models exhibited relatively better performance on categories like Brands and Sports, which are heavily documented and frequently appear in training sets, essentially reflecting a fluency in "pop culture."[1] However, scores dropped significantly when models were tested on specificity within Nature and Culture, where questions often pertain to obscure animal or plant species, or cultural artifacts from non-Western contexts.[1] In these instances, the models frequently defaulted to generic terms, identifying a rare breed of dog simply as a "dog," indicating a fundamental failure to access or recognize the requisite specific world knowledge.[1] This pattern suggests that while models have excelled at pattern recognition and synthesizing information from mainstream topics, their encyclopedic breadth of *visually verified* world knowledge remains critically insufficient.

Perhaps the most troubling finding in the WorldVQA research is the pervasive and systematic overconfidence exhibited by the leading models.[1] Researchers prompted each model to provide a confidence score for its own answer on a scale of 0 to 100.[1] The results showed that every tested model routinely overestimated its accuracy, assigning high confidence scores even when their answers were definitively incorrect.[1] Gemini 3 Pro, despite its sub-50 percent accuracy, reported average confidence scores significantly higher, a profound disconnect that speaks to the "hallucination" problem in a new, quantifiable light.[1] This overconfidence is a significant threat to the deployment of AI in critical, high-stakes environments. If a model cannot reliably gauge the correctness of its own visual identification—and believes it is highly accurate when it is not—it compromises the reliability of AI agents used for everything from medical diagnostics based on imaging to autonomous navigation systems that must accurately identify specific road signs or complex environmental details.

The implications for the broader AI industry are substantial, challenging the current trajectory of multimodal large language model development.[1] The WorldVQA benchmark reveals that simply scaling up model size and training data is not solving the core issue of "visual grounding," which is the ability to tie linguistic knowledge precisely to visual data.[2][3] To move beyond the 50 percent barrier, researchers will likely need to shift their focus from general reasoning and description to more explicit mechanisms for integrating and retrieving specific, atomic world facts tied to visual concepts.[2] This may require fundamental changes in model architecture, training methodologies that focus on long-tail knowledge, or the development of more effective tool-use and retrieval augmentation techniques that allow the model to search for and verify specific visual entities in external knowledge bases.[5] The current results serve as a stark reminder that while generative AI has achieved impressive fluency and reasoning, the foundational challenge of achieving factually reliable, visually knowledgeable AI remains an unsolved frontier. The next phase of AI innovation must, therefore, be directed at creating models that are not just imaginative and fluent, but fundamentally honest about what they see and what they know.