To Master AI, Businesses Must Prioritize Building Human Trust

The AI trust gap: Transparency, ethical governance, and human-centric strategies are vital for successful business adoption.

October 27, 2025

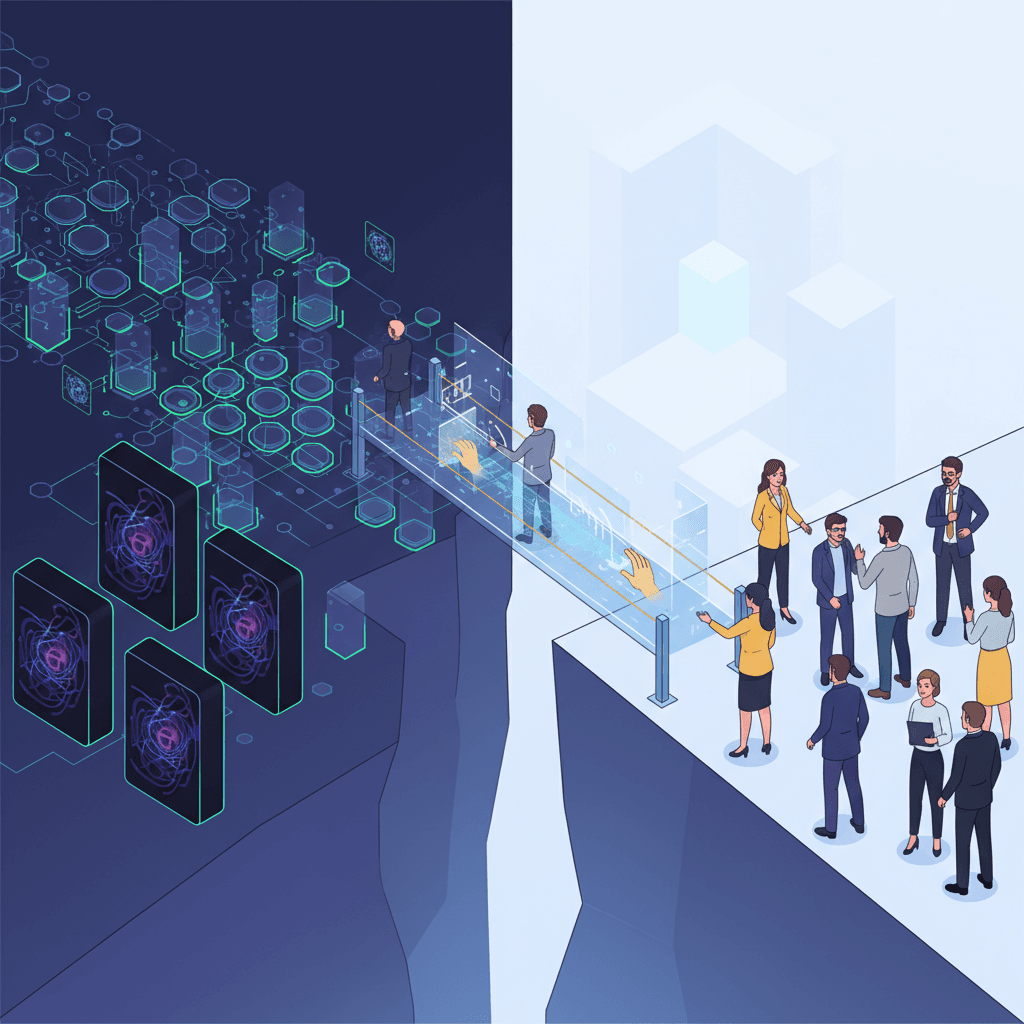

Artificial intelligence is rapidly becoming a cornerstone of modern business, yet a significant gap exists between its widespread implementation and the willingness of people to fully trust it. While companies increasingly rely on AI for everything from operational efficiency to customer experience, global trust in these systems remains precarious, with only 46% of people globally saying they trust AI.[1] This chasm between adoption and acceptance presents a critical challenge for business leaders. Using AI is no longer a choice for those aiming to stay competitive, but integrating it successfully requires more than just technical deployment; it demands a concerted effort to build confidence among employees, customers, and the public. For businesses, bridging this AI trust gap is not merely a compliance issue but a strategic imperative that underpins long-term growth, stakeholder confidence, and the ultimate return on investment from this transformative technology.[2][3]

The roots of the AI trust gap are multifaceted, stemming from deep-seated concerns about the technology's reliability, transparency, and ethical use. A primary driver of skepticism is the "black box" problem, where the decision-making processes of complex algorithms are opaque even to their creators, let alone the average user.[4][5] This lack of transparency breeds suspicion, especially when AI is used in high-stakes areas like hiring, lending, or healthcare.[6] Furthermore, high-profile instances of AI bias have demonstrated how systems trained on historical data can perpetuate and even amplify societal discrimination, eroding public confidence.[7][8] Data privacy is another major concern, with many consumers expressing discomfort over how their personal information is collected and used to train AI models.[9][10] Research shows that while a majority of consumers want personalized experiences, only 39% trust companies to use their personal data responsibly.[10] This apprehension is mirrored in the workplace, where a recent Gallup survey revealed that 53% of workers feel unprepared to work with AI, and many harbor fears about job displacement and a lack of fairness in AI-driven evaluations.[11][12]

To effectively close this trust deficit, businesses must move beyond mere implementation and adopt a proactive strategy centered on transparency, accountability, and ethical governance. A foundational step is the adoption of Explainable AI (XAI), a set of methods and techniques designed to make AI decisions understandable to humans.[13][14][15] XAI allows stakeholders to comprehend the reasoning behind an AI's output, identify potential biases, and verify that the system is functioning as intended.[13][4] Companies like Adobe and Salesforce have embraced transparency by being open about the data used to train their models and by building features that cite sources and highlight areas where AI-generated answers may be uncertain.[2][16] This level of openness is crucial, as it transforms AI from an inscrutable authority into a verifiable tool. Establishing a robust AI governance framework is equally essential.[17][18] This involves creating clear policies, defining roles and responsibilities for AI oversight, and embedding ethical principles throughout the entire AI lifecycle, from data collection to deployment and monitoring.[19][17][20] Such frameworks ensure that AI systems are not only compliant with regulations like the EU AI Act but also aligned with corporate values and societal expectations.[21][6]

Building trust is not solely a technical or policy challenge; it is fundamentally about people. For employees, this means clear communication about the purpose of AI integration, reassuring them that the technology is intended to augment their roles, not replace them.[22] Providing comprehensive training and fostering a culture where employees can voice concerns and share best practices is critical for internal adoption.[23][24][25] In fact, 75% of employees report they would be more accepting of AI if their companies were more transparent about its use.[12] Creating opportunities for feedback and incorporating a "human-in-the-loop" system, where people can review and override AI decisions in critical situations, reinforces accountability and ensures that human judgment remains central.[2][21] For customers, trust is built through clear labeling of AI-generated content and transparent communication about how their data is used.[26][9] Ultimately, when businesses prioritize a human-centric approach, they foster a collaborative environment where AI is viewed not with suspicion, but as a reliable partner in achieving shared goals.[27][24]

Ultimately, navigating the AI trust gap is an ongoing commitment rather than a one-time fix. As AI technology continues to evolve at a breathtaking pace, the ethical and societal questions it raises will become more complex.[28] Businesses that succeed will be those that view trust as the central pillar of their AI strategy. This requires moving beyond a compliance-focused mindset to one that actively engineers ethics, fairness, and transparency into the very fabric of their AI systems.[29] By demystifying AI's inner workings through explainability, establishing clear governance and accountability structures, and engaging openly with both employees and customers, organizations can bridge the divide between technological capability and human confidence. The future of AI in business depends not just on the power of the algorithms, but on the willingness of people to believe in them. Building that belief is the essential work that lies ahead.[30][21]

Sources

[1]

[3]

[5]

[6]

[7]

[10]

[13]

[14]

[15]

[16]

[17]

[18]

[19]

[20]

[21]

[22]

[23]

[24]

[25]

[26]

[27]

[28]

[30]