Starcloud trains first large language models in space, pioneering sustainable AI.

The first large language model trained in orbit by Starcloud, using an NVIDIA H100, promises sustainable AI computing off-Earth.

December 10, 2025

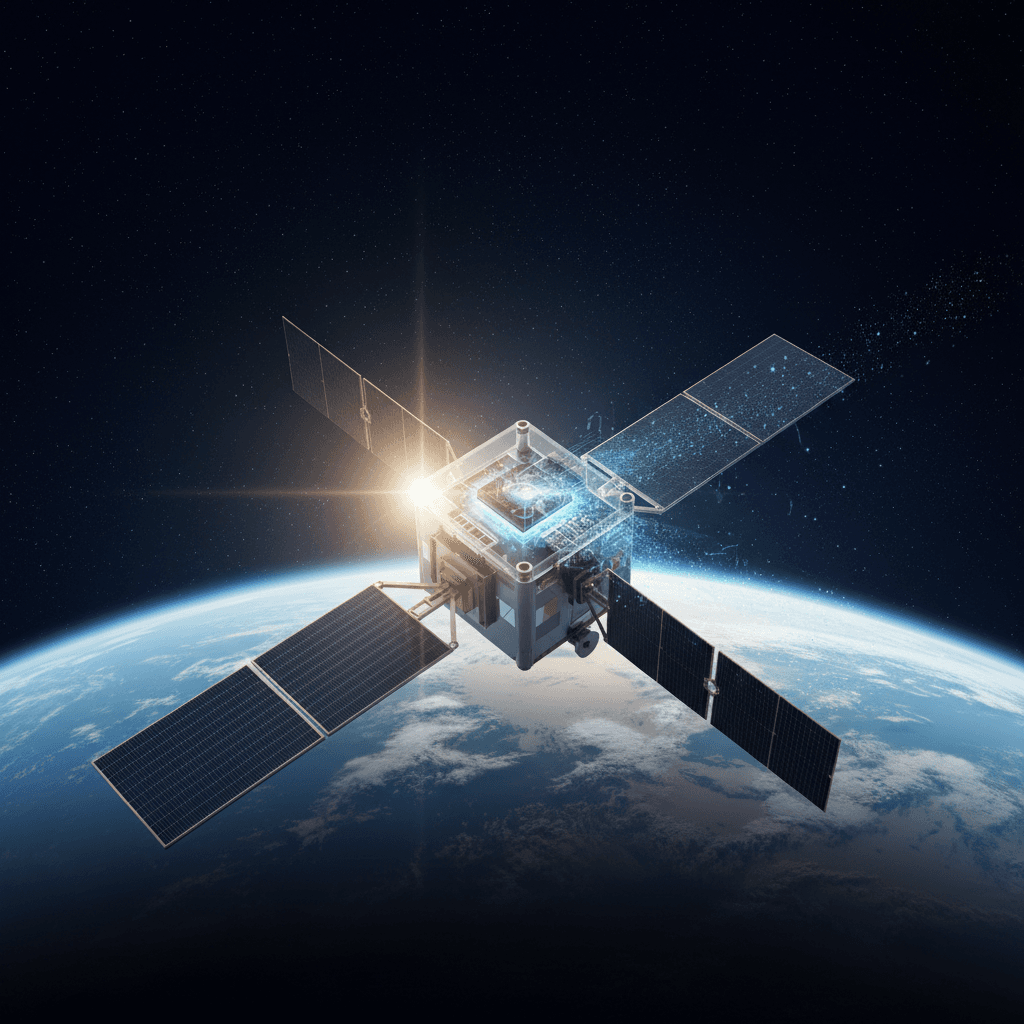

In a landmark achievement that blurs the line between science fiction and the future of artificial intelligence, the NVIDIA-backed startup Starcloud has successfully trained the first large language models in space. Aboard its Starcloud-1 satellite, orbiting approximately 325 kilometers above Earth, the company utilized a powerful NVIDIA H100 GPU to train Andrej Karpathy's nano-GPT on the complete works of Shakespeare and run inference on Google’s open-source Gemma model. This pioneering mission serves as a critical proof-of-concept for the firm's ambitious goal of moving the immense and ever-growing energy footprint of AI data centers off the planet, heralding a new era of orbital computing. The successful operation of a state-of-the-art, data-center-class GPU in the harsh environment of space represents a significant step toward what some industry leaders believe is an inevitable shift in global computing infrastructure.

The primary driver behind this celestial ambition is the terrestrial crisis of energy and resource consumption. On Earth, data centers are voracious consumers of electricity and water, placing immense strain on power grids and local environments.[1] The cooling systems required to prevent servers from overheating are particularly resource-intensive, often relying on massive amounts of fresh water.[2] Starcloud's proposition is that space offers fundamental advantages that overcome these earthly limitations. In a sun-synchronous orbit, a satellite has access to constant, uninterrupted solar power, eliminating the need for battery storage or reliance on strained terrestrial grids. Furthermore, the vacuum of space acts as an infinite heat sink, allowing for passive radiative cooling and negating the need for water-based cooling systems. Starcloud projects that this approach could eventually lead to energy costs up to ten times lower than land-based options and a significant reduction in the carbon footprint associated with large-scale AI.[1][3]

Executing this vision, however, required surmounting formidable engineering hurdles. The Starcloud-1 satellite, a 60-kilogram microsatellite roughly the size of a small refrigerator, was specifically designed to house and operate the NVIDIA H100, a GPU that is approximately 100 times more powerful than any processor previously sent into orbit.[2][1] Operating such a delicate and powerful piece of electronics in low Earth orbit presents unique challenges, most notably intense radiation and the difficulty of heat dissipation in a vacuum.[4] Starcloud has developed proprietary hardware for shielding against cosmic radiation and software designed to autonomously override its effects.[5] For thermal management, the system relies on deployable radiators that vent the GPU's significant heat waste directly into the cold of space, a solution that is both effective and weight-efficient for a satellite platform.[6][7] The successful training of nano-GPT and operation of Gemma validates the viability of these innovative engineering solutions.

With this successful demonstration, Starcloud is advancing a phased business strategy aimed at systematically building a new market for in-orbit computing. The company, which has raised approximately $28 million in seed funding from investors including Y Combinator, NFX, and NVIDIA, is initially targeting the Earth observation market.[8] Satellites constantly generate terabytes of data, but the bottleneck has always been the limited windows for downlinking this raw data to ground stations.[9] By providing powerful GPU compute in orbit, Starcloud enables other satellites to process their data in real-time, sending down only the crucial insights. This capability is invaluable for time-sensitive applications such as wildfire detection, disaster response, and national security.[10][9] The company's next launch, Starcloud-2, is planned for 2026 and is expected to be significantly more powerful, incorporating multiple H100 chips and next-generation NVIDIA Blackwell hardware.[1] Starcloud has also partnered with Crusoe, an AI infrastructure provider, to begin offering cloud computing services directly from space by early 2027.[11] This second phase of their plan will see them compete directly with terrestrial cloud providers for AI model training and inference workloads, a market poised for explosive growth.

The long-term vision for Starcloud and a growing segment of the tech industry is the creation of massive, gigawatt-scale data centers in orbit. Starcloud envisions eventual facilities powered by solar arrays stretching kilometers across, capable of supporting AI training clusters that would be difficult, if not impossible, to power and cool on Earth.[9][1] This ambition is echoed by industry leaders who foresee a future where falling launch costs, driven by reusable rockets like SpaceX's Starship, make large-scale orbital infrastructure economically competitive with building on land.[5][12] While significant challenges related to in-space assembly, maintenance, and orbital debris remain, this first successful training of an advanced AI model among the stars is a pivotal moment. It transforms the concept of orbital data centers from a distant theoretical possibility into a tangible engineering reality, potentially paving the way for a more sustainable and scalable future for artificial intelligence.