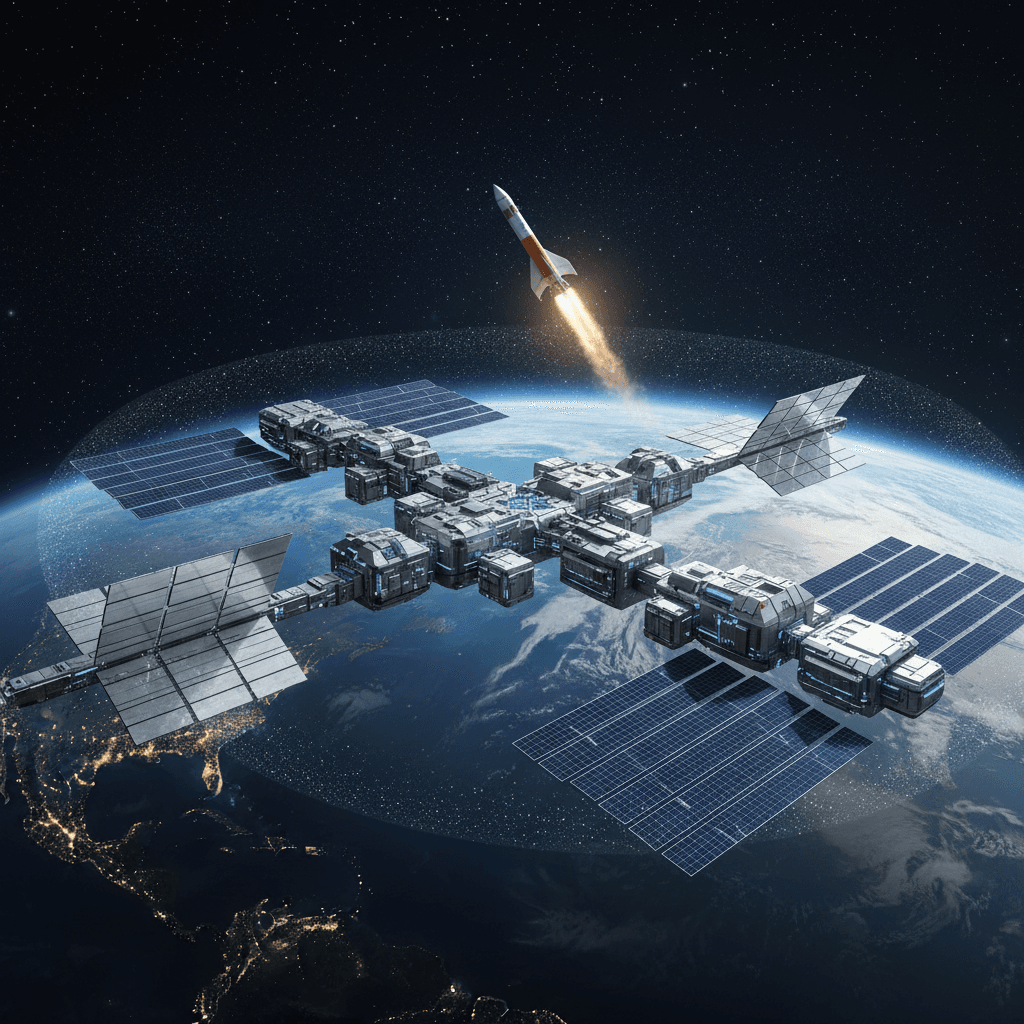

Reusable Rockets Propel AI to Space: Orbital Data Centers Become Reality

Beyond Earth: AI's future demands orbital data centers, overcoming extreme cooling, radiation, and launch costs with groundbreaking tech.

December 11, 2025

The insatiable energy demands of modern artificial intelligence are pushing technology giants to look beyond Earth, conceptualizing a future where massive data centers orbit the planet.[1][2] This ambitious leap into space is driven by the potential for near-limitless solar power and a solution to the increasingly complex and resource-intensive challenge of cooling high-performance processors.[3][4][5] However, this cosmic ambition is tethered to terrestrial realities; realizing the vision of AI in space hinges on overcoming two monumental hurdles: developing novel cooling technologies for the vacuum of space and ensuring the economic viability of such projects through cheap, reusable rockets.[6][7] Companies are now thinking in terms of decades, not years, signaling a long-term strategic shift in how and where the next generation of AI will operate.[8]

The primary challenge for deploying powerful AI hardware in orbit is thermodynamics.[6] On Earth, data centers rely on air and water for cooling, but in the vacuum of space, convection is not an option.[9][4] Heat can only be dissipated through conduction and radiation.[9][10] This fundamental constraint requires a complete rethinking of thermal management for the powerful GPUs and processors that fuel AI.[9][4] Engineers are exploring passive systems like heat pipes and large radiator surfaces to transfer the immense heat generated by AI chips into space.[6][10] The vacuum of space itself can act as an infinite heat sink, which could make orbital data centers more efficient than their terrestrial counterparts that consume vast amounts of water.[3][11] Startups like Starcloud and established players like Google are actively developing these systems, with Starcloud planning to use large cooling panels and Google focusing on passive systems to maximize reliability.[3][6] The design of these systems is critical, as components must operate within a specific temperature range, often between -40°C and 85°C, to avoid failure in an environment with extreme temperature swings from -150°C in shadow to over 120°C in direct sunlight.[9]

Beyond the thermal challenge lies the harsh, radiation-filled environment of space.[6][12] Constant bombardment by cosmic rays and high-energy particles can cause "Single Event Effects," such as spontaneous bit flips in memory, which can corrupt data and calculations.[6][12] In more severe cases, the cumulative effect of radiation, known as the total ionizing dose, can permanently damage or destroy sensitive electronic components.[6][12] While traditional space missions use radiation-hardened processors, these are typically generations behind commercial chips in performance and are not suitable for state-of-the-art AI applications.[12] To address this, companies are testing the resilience of commercial-grade hardware. Google, for instance, subjected its Tensor Processing Units (TPUs) to proton beams to simulate years in low-Earth orbit.[6][13] The results were promising, indicating that the hardware could survive without permanent failure, though memory errors did occur.[6][13] This suggests that while commercial chips may be viable, sophisticated error-correction systems and potentially some level of shielding will be necessary to ensure the reliability of AI models trained and operated in space.[11][12]

The ultimate feasibility of establishing an AI infrastructure in orbit is inextricably linked to the cost of getting there.[13] Historically, high launch costs have been the primary barrier to large-scale commercial activities in space.[13][14] However, the advent of reusable rocket technology, pioneered by companies like SpaceX, is fundamentally altering the economic equation.[7][15] Reusable rockets like the Falcon 9 have already drastically reduced the cost per kilogram to orbit, making the prospect of launching the dozens or even hundreds of satellites required for a space-based data center more conceivable.[16][17][18] Projections suggest that with fully reusable systems like Starship, launch costs could plummet further, potentially making the cost of launching and operating a space-based data center comparable to the energy costs of a similar facility on Earth.[13][19] This dramatic reduction in cost is the linchpin for the entire endeavor, enabling companies like SpaceX, Blue Origin, Google, and various startups to seriously plan for deploying AI-capable satellites and orbital computing clusters.[20][8][13] Without cheap and frequent access to space, the vision of gigawatt-scale computing in orbit would remain firmly in the realm of science fiction.[21][22]

In conclusion, the push to move artificial intelligence into space represents a convergence of pressing terrestrial needs and burgeoning technological capabilities. The escalating energy consumption and cooling demands of AI data centers on Earth provide a powerful incentive to harness the constant solar energy and natural cooling properties of space.[3][23][5] Companies are now embarking on a multi-decade journey to overcome the significant engineering hurdles of dissipating heat in a vacuum and protecting sensitive electronics from cosmic radiation.[6] This ambitious future, however, is entirely dependent on the continued success and cost reduction of reusable rocket technology.[24][5] As launch costs fall, the prospect of orbiting data centers transitions from a distant dream to a tangible, long-term strategy, promising to reshape the infrastructure of AI and unlock computational possibilities at a scale currently unimaginable on Earth.[7][25]

Sources

[1]

[2]

[3]

[5]

[9]

[11]

[12]

[13]

[15]

[16]

[17]

[18]

[19]

[21]

[22]

[23]

[24]