Rackspace Shifts AI Focus: MLOps and Governance Unlock Enterprise Production

The shift from AI pilot to production requires mastering MLOps, data governance, and secure cloud integration.

February 4, 2026

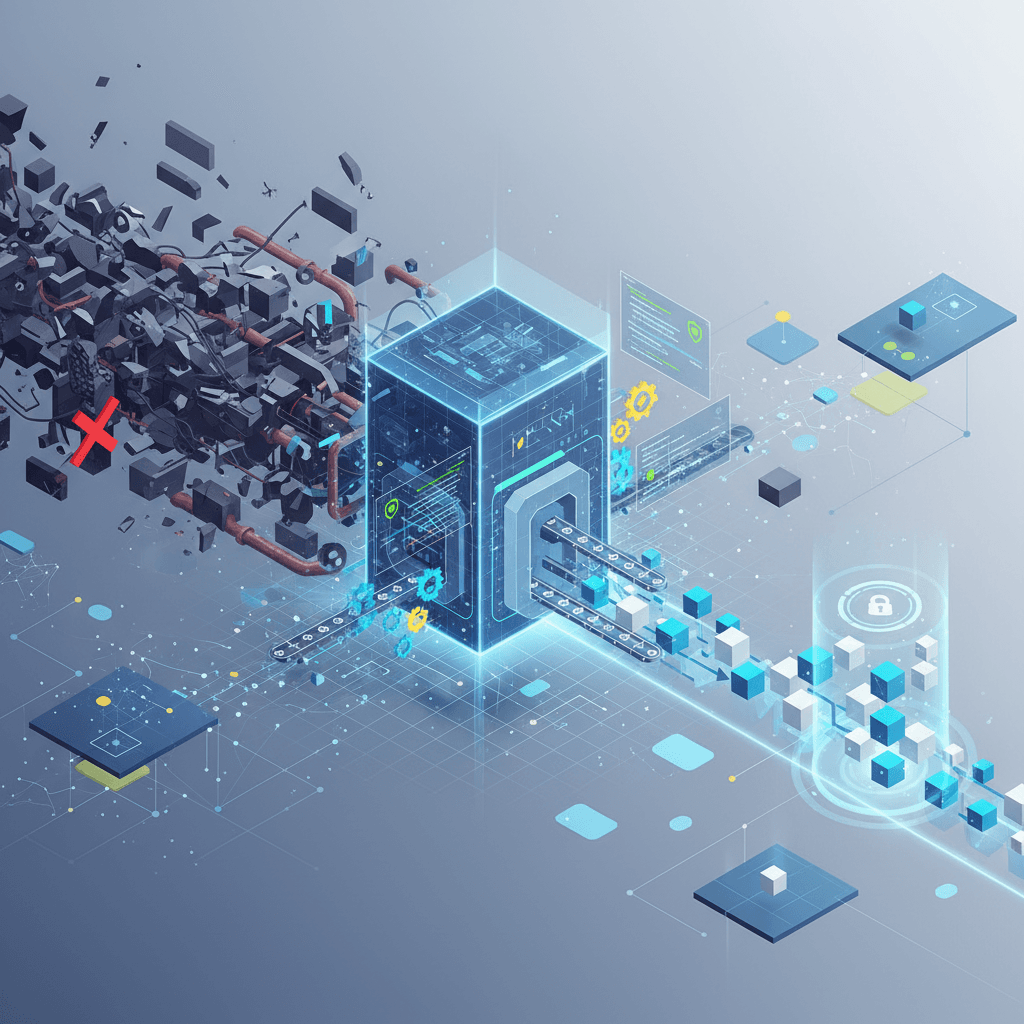

The journey to operational artificial intelligence is frequently hindered not by the sophistication of the algorithms, but by a series of foundational enterprise challenges. Industry giant Rackspace has articulated these bottlenecks with clarity, pointing to the messy data that plagues training pipelines, the unclear ownership models that stall decision-making, wide governance gaps that introduce risk, and the runaway cost of running models once they move from experimental sandbox to full production. By framing these common issues through the lens of service delivery, security operations, and cloud modernisation, the company highlights where its strategic focus is being placed: on the practical mechanics of MLOps and responsible AI industrialisation. This shift from focusing solely on the ‘build’ phase of AI to the ‘operate’ phase reflects a critical inflection point in the broader AI industry, where the value is no longer in the proof-of-concept, but in the sustained, governed performance of models in the enterprise workflow.

The most pervasive barrier Rackspace identifies begins with the **data problem and governance deficit**, a universal issue that fundamentally limits AI’s utility. Data in many large organisations is fragmented, siloed across legacy systems, often duplicated, and inconsistent, rendering it unusable for training complex models at scale. Enterprises, rich in historical data, paradoxically struggle the most because their scale becomes a drag and their history, a technical debt[1]. Rackspace’s operational pointers insist on a cultural and architectural solution, not just a technical fix, by advocating for clear ownership and governance frameworks[2][3]. Without a governing body or clear accountable parties, inconsistencies persist, and crucial technical assessments required for responsible AI cannot be scaled[2][4]. The financial stakes are significant, with more than a quarter of data and analytics professionals reporting annual losses exceeding US$5 million due to poor data quality, underscoring that the ‘garbage in, garbage out’ principle is a measurable cost center, not just an adage[5]. Rackspace addresses this head-on with services dedicated to Data Strategy & Governance, aiming to protect and govern data assets for better business outcomes, a service that treats data quality as an essential operational component of the AI lifecycle[3][6].

A second core pointer revolves around the **critical transition from pilot project to production reality**, an area where many enterprise AI efforts quietly fail. The cost of running models in production is a compounding factor, but it is often eclipsed by the operational complexity of MLOps—Machine Learning Operations—which involves model deployment, scaling, monitoring, and versioning[7]. Rackspace’s response to this is embodied in services like their AI Launch Pad and MLOps Foundations, which are designed to eliminate the 'paralysis by complexity' that often stalls large enterprises[8]. These offerings provide a structured, managed path from initial experimentation to full-scale production environments, directly tackling the challenge of securing positive returns on AI investments[8]. The MLOps framework they promote seeks to automate critical steps like data preprocessing, model deployment, and continuous quality assurance, ensuring that AI systems are not only launched but also consistently optimized and monitored for accuracy and drift[7]. This industrialization guidance is crucial because, as industry data shows, nearly half of all companies fail to monitor their production AI systems for drift or misuse, a clear indication that a significant governance and operational gap exists between model development and ongoing maintenance[9].

The company’s strategic framing of these issues within **security operations and cloud modernisation** highlights the third key operational pointer: the imperative for AI to be secure, compliant, and integrated into the broader IT ecosystem. The need for security and compliance is paramount, especially when dealing with sensitive information like personally identifiable information (PII). Rackspace's emphasis on air-gapped systems, which ensure data does not leave organizational boundaries, and the masking of sensitive data, points to a sophisticated understanding of regulatory and compliance risks in the AI landscape[10]. This security-first approach is directly tied to the cloud modernization imperative. For AI models to be cost-efficient and performant, they must leverage the elastic, resilient, and optimized infrastructure of the cloud[11]. Rackspace integrates AI implementation into its multicloud and hybrid cloud services, positioning AI operationalization not as a standalone technology project, but as a natural evolution of cloud maturity[12][11]. The integration of AI solutions like the Foundry of AI by Rackspace (FAIR) within major cloud platforms like AWS, Google Cloud, and Microsoft Azure underscores the operational reality that successful AI is a cloud-native capability, requiring continuous optimization for resiliency, security, and the all-important cost benefits[3][11].

In essence, the operational pointers distilled from Rackspace’s guidance paint a picture of AI maturity that is less about raw machine learning expertise and more about enterprise-grade discipline. The transition from AI as a discrete science experiment to AI as a scalable, governed, and secure production asset requires resolving the perennial issues of data quality, ownership, compliance, and cost control. By embedding its AI strategy within the established practices of service delivery, security, and cloud modernization, Rackspace is attempting to bridge the chasm between the lab and the line of business, providing a blueprint for enterprises seeking to industrialise their AI investments and finally reap the promised competitive advantage from their machine learning models. This focus on the ‘how’ of operationalisation—the MLOps and governance layers—marks a significant step forward in professionalising the enterprise AI landscape.

Sources

[1]

[2]

[4]

[8]

[9]

[10]

[11]

[12]