OpenAI deploys custom ChatGPT to monitor its workforce and identify sources of confidential leaks

OpenAI leverages a custom version of ChatGPT to monitor staff communications, turning its flagship technology into a surveillance tool.

February 12, 2026

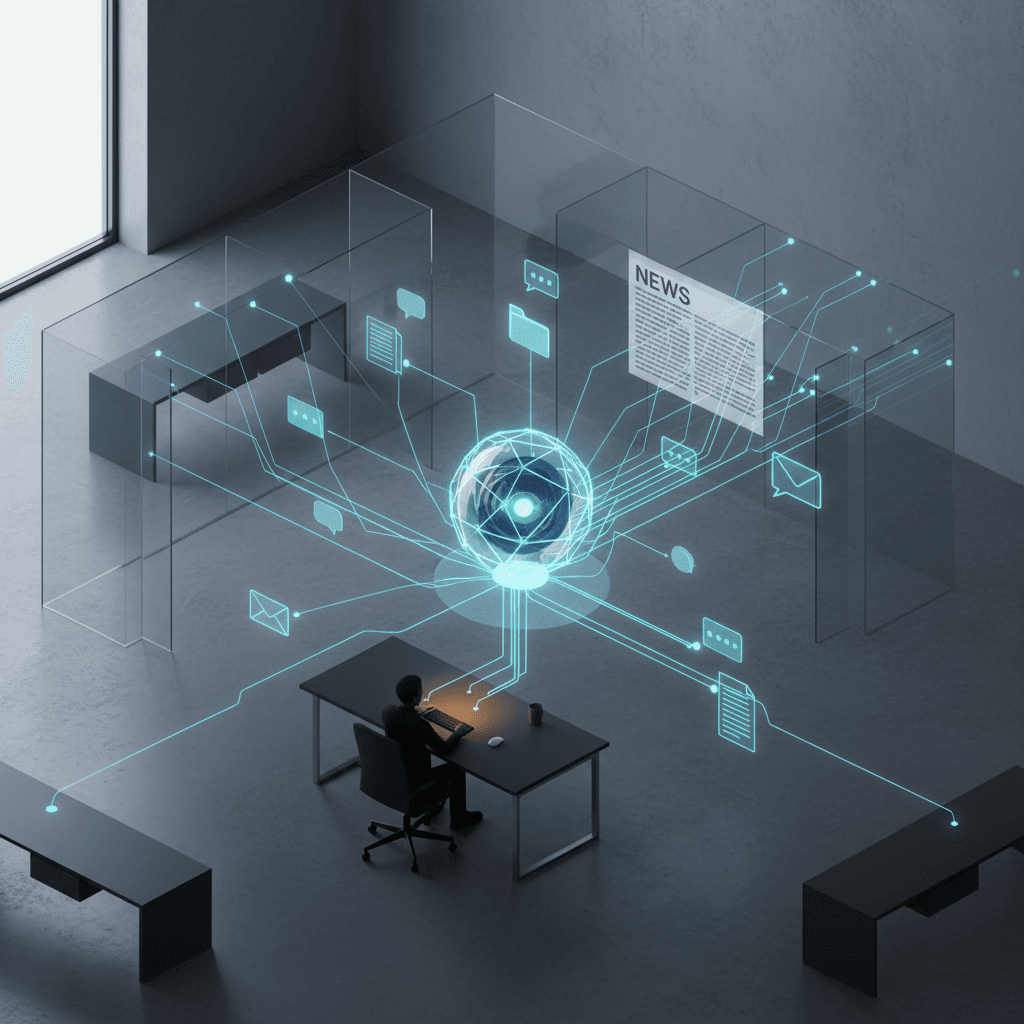

The rapid transformation of OpenAI from a collaborative research laboratory into a highly guarded corporate enterprise has reached a new milestone with reports that the company is deploying a specialized version of its own artificial intelligence to monitor its workforce.[1][2] According to internal sources and recent investigative reports, OpenAI’s security teams are utilizing a custom iteration of ChatGPT specifically designed to identify employees who disclose confidential information to the media.[3] This digital detective system allegedly possesses the capability to ingest external news articles and cross-reference the details within those stories against a vast repository of internal communications, including Slack messages, employee emails, and restricted documents.[2][4][3] By analyzing which staff members had access to specific files or participated in particular discussions at the time a leak occurred, the system provides security personnel with a narrowed list of suspects, effectively turning the company’s flagship technology into a powerful instrument of internal surveillance.

This development underscores the intensifying atmosphere of secrecy within the artificial intelligence industry, where a single unauthorized disclosure can shift billions of dollars in market valuation or compromise years of proprietary research. The specialized tool does not merely search for keywords; it reportedly uses the semantic and analytical strengths of large language models to map the flow of information across the organization.[1] When a journalist publishes a report containing sensitive details about a forthcoming model or an internal policy shift, OpenAI’s security investigators feed that text into the system. The AI then suggests which internal channels or documents served as the likely source of the leak and identifies the individuals who interacted with that data.[4][2][3] This level of forensic linguistics and behavioral mapping represents a significant escalation in corporate security tactics, moving beyond traditional metadata logs to a more nuanced, AI-driven analysis of internal institutional knowledge.[1]

The deployment of such a system comes at a time when OpenAI has been plagued by a series of high-profile unauthorized disclosures regarding its strategic roadmap and internal divisions. Over the past year, leaks have revealed the existence of advanced models like the o1 series and the Sora video generator well before their official announcements, as well as internal memos describing a mission to create a full-spectrum super assistant. Beyond product secrets, the company has also faced a wave of public scrutiny following the departure of high-level safety researchers and the temporary ousting of CEO Sam Altman in late 2023. These events have contributed to a culture of high alert, with leadership seemingly viewing information control as a prerequisite for maintaining its competitive edge against rivals like Google and Anthropic. The use of a specialized ChatGPT to hunt leakers suggests that the company now views its own employees’ communications as a data set to be mined for threats.[1][5]

The ethical and cultural implications of this practice are profound, particularly for a company that was founded on the principles of transparency and the safe, open development of artificial intelligence for the benefit of humanity.[1] Critics and industry analysts point out a jarring irony: a firm that advocates for the responsible and transparent deployment of AI is using that same technology to create what some describe as a digital panopticon. Within the AI safety community, there are growing concerns that such aggressive surveillance could stifle the very "rigorous debate" OpenAI claims to encourage. If employees believe that every Slack message or document they access could be used as evidence in an AI-driven investigation, they may become less likely to raise internal alarms about safety risks or ethical lapses. This creates a potential paradox where a system intended to protect the company’s secrets could inadvertently undermine its long-term safety mission by silencing whistleblowers.

OpenAI has recently made efforts to clarify its stance on internal disclosures, publishing a "Raising Concerns Policy" intended to protect employees who report safety issues or legal violations.[6] However, the company continues to draw a firm line between protected whistleblowing and the disclosure of trade secrets, which remains strictly prohibited under non-disparagement and confidentiality agreements. The tension between these two categories is at the heart of the current controversy.[1] In a recent incident, a senior safety executive was terminated for what the company described as discriminatory conduct, though the executive alleged the dismissal was retaliation for opposing certain product features.[7] When companies use advanced AI to police these boundaries, the distinction between a legitimate whistleblower and a malicious leaker becomes increasingly managed by algorithms rather than human discretion, raising questions about fairness and due process in the modern workplace.

From a legal perspective, the use of AI for employee monitoring falls within a complex and evolving regulatory landscape.[1] While labor laws in jurisdictions like California—where OpenAI is headquartered—generally allow employers to monitor communications on company-owned devices, the use of generative AI to draw inferences about employee behavior represents a qualitative shift in surveillance capabilities.[1] Traditional monitoring typically involves searching for specific violations or reviewing logs after a breach is suspected. The AI-driven approach is more proactive and predictive, capable of identifying patterns that a human investigator might miss. As this technology becomes more accessible, other major tech firms may follow OpenAI’s lead, potentially normalizing a standard of employment where every digital interaction is subject to automated scrutiny and linguistic profiling.

The broader AI industry is watching these developments closely, as they reflect the "arms race" mentality that now dominates the sector. As the stakes for achieving artificial general intelligence continue to rise, the pressure to maintain absolute secrecy has eclipsed the collaborative spirit of the early AI research era. The move to weaponize ChatGPT for internal security highlights the transition of OpenAI into a mature, multi-billion-dollar entity that prioritizes intellectual property protection as a core pillar of its survival. It also serves as a case study in the unintended consequences of powerful AI; a tool designed to assist users in writing, coding, and learning is equally proficient at tracking, analyzing, and exposing the very people who built it.

Ultimately, the reported use of a "special version" of ChatGPT to hunt leakers signals a new chapter in the relationship between technology companies and their workforces. It demonstrates that the power of generative AI is being applied not just to solve global challenges, but to enforce internal discipline and corporate loyalty. As OpenAI continues to push the boundaries of what its models can achieve, the company also appears to be testing the limits of workplace privacy and employee trust.[7] Whether this strategy succeeds in plugging leaks or merely deepens the divide between leadership and staff remains to be seen, but it has undoubtedly established a new precedent for how the world's most advanced AI companies manage their most sensitive resource: information. For the employees at the forefront of the AI revolution, the tool they helped create may now be the very thing watching their every move.