Ilya Sutskever Pivots: Incremental AI Deployment Now Safest Path to Superintelligence

The AI pioneer now champions incremental deployment of superintelligence, shifting from isolated development to societal adaptation and fundamental research.

November 26, 2025

Ilya Sutskever, a central architect of the modern artificial intelligence revolution and former chief scientist of OpenAI, has signaled a significant evolution in his thinking on the safest path to creating superintelligence. In a notable departure from a more insulated development approach, Sutskever now advocates for the incremental deployment of advanced AI systems. This change in perspective from one of the industry's most respected and safety-conscious minds suggests a new phase in the high-stakes quest to build machines that surpass human intellect, intertwining the pursuit of raw capability with the pragmatic need for societal adaptation and feedback. The shift comes after a tumultuous period that saw Sutskever depart from OpenAI, the company he co-founded, and establish a new venture with an explicit focus on safety above all else.

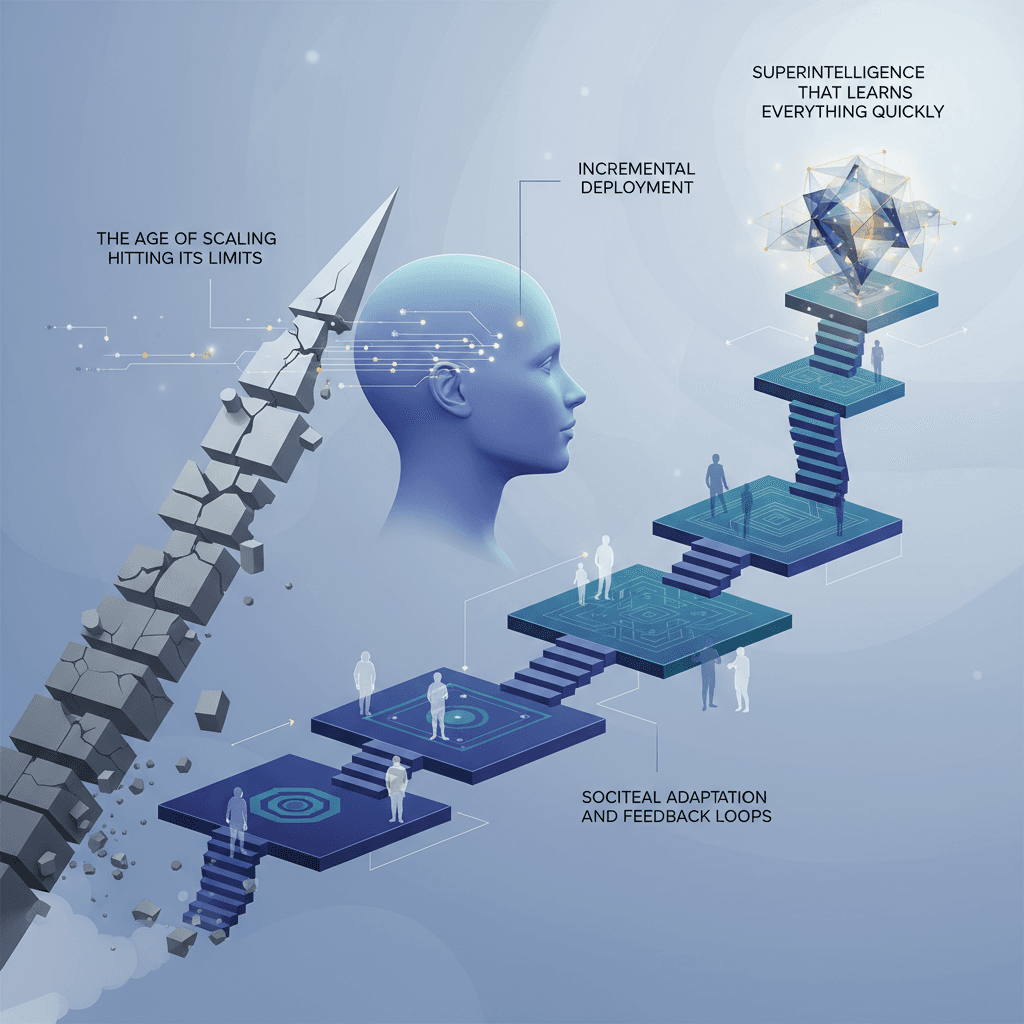

This newly articulated philosophy centers on the idea that the era of achieving transformative AI progress simply by "scaling" up models with more data and computing power is ending.[1][2] Sutskever has argued that the industry is transitioning from an "age of scaling" to an "age of research," where fundamental breakthroughs, not just brute force, will be required to overcome the limitations of current models.[1][2] A key part of this new era, in his view, is the necessity of deploying AI capabilities incrementally. He suggests that introducing powerful AI to the world in stages is crucial, not only as a potential safety measure but as a way to make the abstract concept of superintelligence tangible for the public and for policymakers.[1] This iterative approach aims to change how people understand and prepare for advanced AI, a stark contrast to the initial mission of his new company, Safe Superintelligence Inc., which was founded with the promise that its "first product will be the safe superintelligence, and it will not do anything else up until then".[3]

Sutskever's evolving viewpoint is inextricably linked to his dramatic exit from OpenAI and the founding of Safe Superintelligence Inc. (SSI) in June 2024.[3][4] His departure followed a period of intense internal conflict at OpenAI in late 2023, where he played a key role as a board member in the temporary ouster of CEO Sam Altman.[3][5] The internal disagreement reportedly stemmed from deep-seated tensions between the company's accelerating commercial ambitions and Sutskever's growing concerns about the safety and alignment of increasingly powerful AI systems.[5][6] After Altman's swift reinstatement, Sutskever stepped down from the board and eventually left the company to start SSI alongside Daniel Gross and Daniel Levy.[3] SSI was launched with a singular, clear mission: to pursue the development of superintelligence in a research-focused environment, insulated from the product deadlines and commercial pressures that characterize labs like OpenAI.[4][7][8] This safety-first mandate attracted extraordinary investor confidence, with SSI raising billions of dollars and achieving a multi-billion dollar valuation without a product, a testament to Sutskever's reputation.[3][1][9]

The shift towards incremental deployment is underpinned by a more nuanced definition of what superintelligence might actually be. Sutskever has moved away from the idea of a system that is built to "know everything" before its release. Instead, he conceptualizes it as a system that can "learn everything quickly".[1] This reframing has profound implications for development strategy. Rather than constructing an omniscient AI in a lab, the goal becomes creating a powerful learner and then educating it through ongoing engagement with the world.[1] This aligns with his warnings that current AI architectures are hitting a wall, particularly in their ability to generalize knowledge as flexibly as humans do.[1] He contends that simply adding more data and compute to existing methods is yielding diminishing returns, and that the next leaps will come from new research into achieving human-like learning.[1] This focus on research over scaling is the core bet behind SSI, which he argues has comparable research compute to rivals once their significant product and inference costs are subtracted.[1]

The evolution of Ilya Sutskever's strategy represents a critical moment for the artificial intelligence industry. As a co-inventor of AlexNet, which helped ignite the deep learning boom, and a guiding force behind the GPT series, his views carry immense weight.[3] His declaration that the scaling paradigm is maturing and must now be supplemented by fundamental research challenges the prevailing industry consensus. More importantly, his pivot to advocating for incremental deployment, a modification of his own new company's initial rigid stance, reflects a pragmatic acknowledgment of the immense challenge of building superintelligence responsibly. It suggests a belief that the alignment of such systems cannot be solved in a vacuum but requires a careful, iterative process of real-world interaction and societal co-evolution. This change in thinking from one of AI's most influential figures may signal a broader shift in how leading labs approach the development of transformative technology, seeking a difficult balance between accelerating progress and ensuring that humanity can safely navigate its arrival.