GPT-5 Directs Autonomous Lab, Slashing Protein Production Costs by 40%.

Using GPT-5 as scientific director, this autonomous lab cut protein synthesis costs by 40% in six months.

February 6, 2026

The biotechnology sector has entered a new phase of research and development, marked by the collaboration between OpenAI and biotech giant Ginkgo Bioworks to create an autonomous laboratory where the advanced Large Language Model (LLM), GPT-5, directs experimental science. This partnership has resulted in a closed-loop system that autonomously designs, executes, analyzes, and iteratively refines biological experiments, demonstrating the potential for artificial intelligence to act as a scientific director, rather than just an assistant. The immediate and measurable result of this AI-driven lab has been a significant reduction in the cost of cell-free protein synthesis (CFPS), a widely used process fundamental to biotechnology and pharmaceutical research.

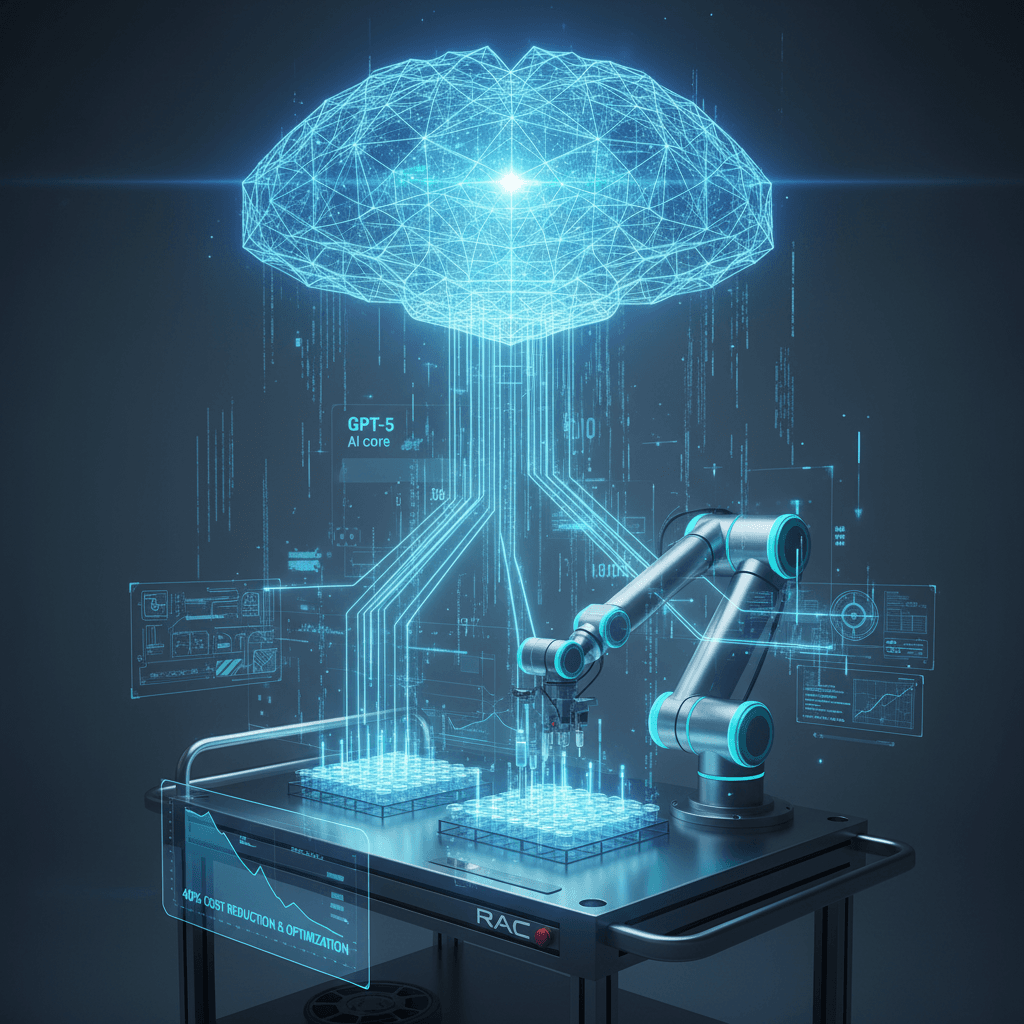

The key breakthrough is the establishment of a fully autonomous scientific workflow, where the GPT-5 model assumes the cognitive role of a scientist by generating hypotheses and experimental designs, while Ginkgo's robotic cloud laboratory handles the physical execution. The architecture pairs OpenAI's reasoning model with Ginkgo Bioworks’ Reconfigurable Automation Cart (RAC) platform and Catalyst automation software, which together form the physical infrastructure of the lab[1][2][3]. GPT-5 was provided with internet access, data analysis packages, and prior experimental metadata, allowing it to operate like an experienced experimental scientist[4]. For instance, GPT-5 would propose batches of experimental designs in the 384-well plate format, and these designs would be programmatically translated for execution on the robotic carts[5][3]. Critically, the interface included built-in validation checks, such as a Pydantic schema, to filter out scientifically invalid or physically impossible designs, ensuring the AI's proposals were practical for robotic execution[1][5][3]. Following execution, data and metrics were fed back to GPT-5 for interpretation and hypothesis generation for the next round, completing the closed-loop cycle of scientific discovery[5].

Over six months and six iterative rounds of experimentation, the autonomous system executed over 36,000 unique cell-free protein synthesis reactions, generating nearly 150,000 data points[1][4][2]. The core objective was to optimize the cost efficiency of CFPS, a process critical for producing materials like therapeutics, diagnostics, and novel materials outside of living cells. The AI-driven approach successfully achieved a 40% reduction in the specific cost of protein production, relative to the existing state-of-the-art benchmark[6][7][4][8]. Specifically, the system reduced the cost of producing superfolder green fluorescent protein (sfGFP), a common benchmark, from $698 per gram to $422 per gram in total reaction costs[1][7][2]. This cost reduction stemmed from the AI's ability to identify novel reagent combinations and small adjustments in parameters like buffering and energy regeneration components that humans had not previously tested at this scale[1]. Beyond the cost savings, the model also achieved a 27% increase in protein production titer, which is the concentration of protein produced[6][3]. The ability to test such a massive number of combinations—far exceeding what a human team could accomplish—demonstrates the unique advantage of the autonomous lab in separating scientific signal from noise[1]. The successful optimization was so effective that Ginkgo Bioworks has already begun selling the AI-improved reaction mix through its reagents store, providing a direct commercial validation of the research[7][4].

Despite the clear successes, the limitations and future challenges of this autonomous approach are significant. While GPT-5 managed the experimental design and data interpretation, human oversight was still essential for tasks like preparing reagents, system monitoring, and the initial loading and unloading of materials, meaning the lab is not yet fully lights-out autonomous[1][3]. Furthermore, the scope of the AI's direction was tightly focused on optimizing a single, well-defined process—cell-free protein synthesis—within a highly automated, pre-configured environment[8]. Scaling this degree of autonomy to more complex, multi-stage, or entirely novel scientific problems in diverse laboratory settings remains a considerable hurdle. The system's reliance on a closed-loop feedback mechanism is a strength, but it also dictates that the AI’s learning is constrained to the physical constraints and capabilities of the robotic lab it is connected to, as enforced by the validation schema[5]. Another notable consideration is the transparency of the scientific process; to address this, GPT-5 was programmed to generate human-readable lab notebook entries, which documented its analysis, rationale, and observations, offering scientists a window into the AI's reasoning[1][4].

The implications of the GPT-5-driven autonomous lab extend far beyond the biotech industry, signaling a fundamental shift in the methodology of scientific research. This demonstration represents one of the most concrete examples yet of an AI system closing the loop on physical experimentation at a meaningful scale, fulfilling the promise of AI-driven scientific discovery[2]. By delegating the iterative, often laborious experimental work to the AI and robotics, human scientists are freed to focus on generating insight, creativity, and judgment, addressing the current bottleneck in life science research, which is often the slow, manual generation of high-quality experimental data[9][10][8]. The success of this collaboration aligns with broader national strategies, such as the U.S. Department of Energy's Genesis Mission, which seeks to integrate AI-driven robotic laboratories with supercomputers and scientific datasets[11][9][4]. The ability of an LLM to not only process information but to translate its reasoning into executable, real-world protocols and then learn from the physical world ushers in an era where AI models may soon be considered true collaborators or even directors in the most complex scientific endeavors, significantly accelerating the pace of innovation across fields from materials science to medicine.

Sources

[1]

[3]

[5]

[6]

[8]

[9]

[10]

[11]