Garbage In, Garbage Out: Bad Data Dooms 85% of AI Projects

The real reason AI projects fail: Poor data quality, not advanced models, is costing companies billions.

September 23, 2025

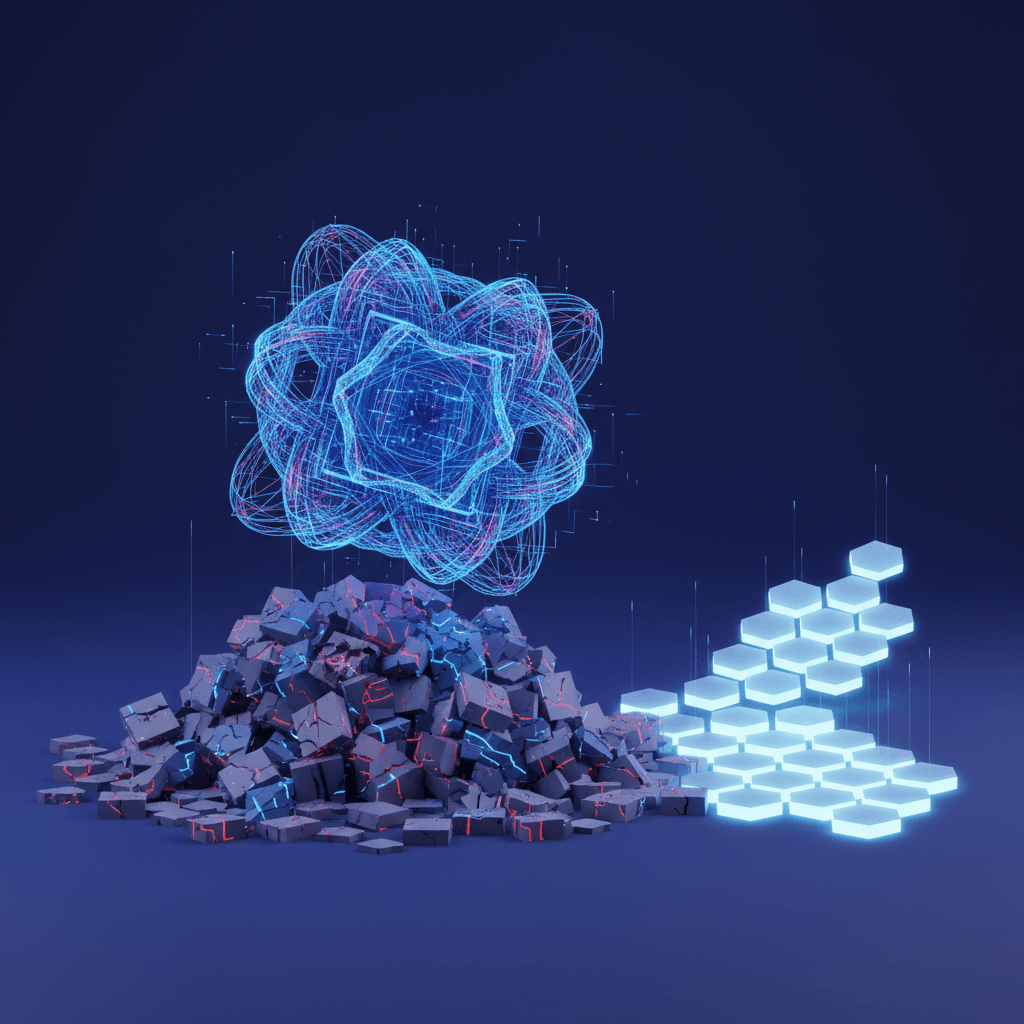

As companies globally pour billions into artificial intelligence, a stark reality is emerging from the trenches of implementation: the vast majority of these ambitious projects are failing to move beyond the experimental stage. This widespread stalling of innovation is not due to a failure of algorithms or a lack of computing power, but a far more fundamental issue—the quality of the data feeding these complex systems. The old adage of "garbage in, garbage out" has found a new and costly relevance in the age of AI, where flawed or incomplete data is the primary culprit behind an estimated 70 to 85 percent of initiatives failing to reach production.[1][2] This critical dependency is forcing a strategic reset in boardrooms and IT departments alike, turning the focus from the glamour of AI models to the foundational necessity of a robust data strategy. For many, project success now hinges directly on solving the data quality crisis first.

Martin Frederik, a regional leader at data cloud giant Snowflake, emphasizes that the frequent failure to translate promising proofs-of-concept into revenue-generating tools stems from a foundational misunderstanding. "There's no AI strategy without a data strategy," Frederik states simply.[3] He argues that many leaders mistakenly treat the technology as the end goal itself, rather than a vehicle for achieving specific business outcomes. When projects get stuck, the reasons are often disappointingly familiar: a misalignment with business needs, siloed teams unable to collaborate effectively, or, most commonly, chaotic and untrustworthy data.[3] AI applications, agents, and models are only as effective as the data they are built on. Without a unified, well-governed data infrastructure, even the most sophisticated models are destined to fall short.[3] The high failure rate, which some studies place as high as 87%, is not necessarily a definitive failure of AI itself, but rather a painful "part of the maturation process" as organizations learn this crucial lesson.[4][3]

The concept of data quality extends across several key dimensions, each of which can derail an AI initiative if neglected. These dimensions include accuracy, ensuring data reflects real-world conditions; completeness, meaning critical data variables are not missing; consistency, so that data formats and values are uniform across different systems; and timeliness, ensuring the data is relevant to the current environment.[5][6] When an AI model is trained on data that is inaccurate, incomplete, or biased, it will inevitably produce erroneous, unusable, or even dangerous results.[7][8] This has tangible consequences, such as flawed business decisions, operational inefficiencies, and a damaged reputation.[4] The financial toll is significant, with poor data quality costing organizations an average of $12.9 million per year, according to a report from Gartner.[4] For instance, an AI-driven fraud detection model trained on transaction data with errors will fail to perform accurately, while a predictive maintenance model fed incomplete equipment readings will be unreliable.[9]

To navigate this challenge and turn AI experiments into enterprise-grade solutions, the focus must shift to creating a solid data foundation. This involves implementing robust data governance frameworks to manage the entire data lifecycle, from verification and cleansing to regular audits.[7] Platforms like Snowflake are addressing this need by providing integrated environments where data can be unified and managed effectively, allowing AI capabilities to be built directly alongside the trusted data they rely on.[10] The goal is to make AI easy, efficient, and trusted by ensuring the data that fuels it is reliable.[10][11] This requires a strategic approach where data quality is not an afterthought but a prerequisite, enabling AI systems to distinguish when to respond confidently versus when to seek clarification, a vital capability in preventing the spread of misinformation.[10] Ultimately, organizations that prioritize data integrity and establish a culture of data literacy are the ones that will gain a significant competitive advantage, successfully harnessing the transformative power of AI to drive growth and innovation in an increasingly data-driven world.[9][12]