Engineers Decouple AI Logic, Delivering Reliable and Scalable Enterprise Agents

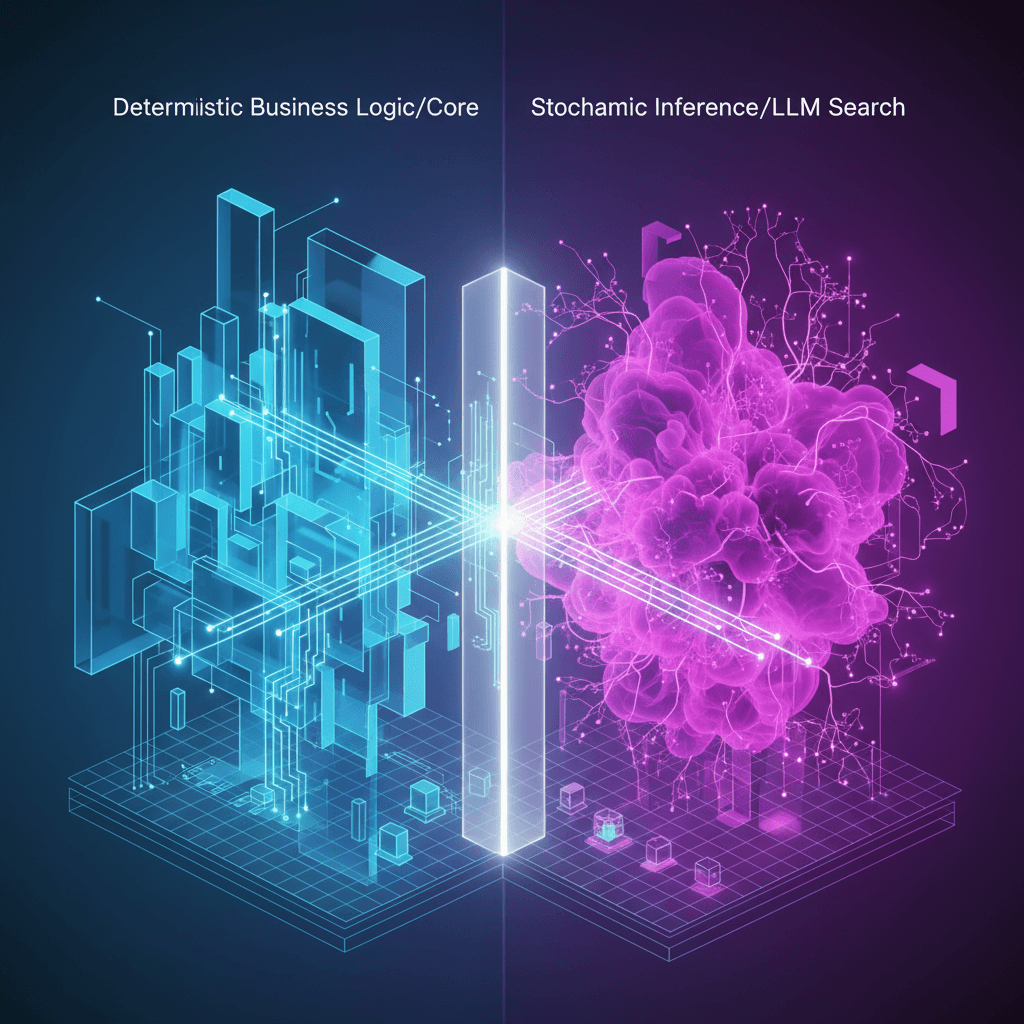

Decoupling deterministic business logic from stochastic LLM search unlocks production-grade reliability and governance for AI agents.

February 6, 2026

In the rapidly evolving landscape of artificial intelligence, the transition of generative AI prototypes into reliable, production-grade agents has introduced a critical engineering challenge: reliability and scalability. Large Language Models (LLMs) are fundamentally stochastic, meaning a prompt that yields a correct output once may fail on a subsequent attempt. This inherent unpredictability creates a significant hurdle for enterprise deployment, where mission-critical tasks demand deterministic and auditable execution. The emerging solution is an architectural paradigm shift: the systematic separation of an AI agent's core business logic from its inference and search mechanisms, a decoupling that promises to unlock a new level of scalability and governance for agentic AI systems.

The root of the scalability problem lies in what is known as the entanglement problem. In initial AI agent designs, development teams often embed core business workflow—the "happy path" of what the agent should do—directly within the code that manages the LLM's unpredictable behavior[1]. This includes wrapping LLM calls in complex error-handling loops, retries, branching logic, and "best-of-N" sampling strategies to mitigate the stochastic nature of the model's output[1]. The result is a monolithic codebase where the logic defining the business task is inextricably mixed with the logic defining the *inference-time strategy*, or how the system navigates uncertainty[1]. This design is fragile, difficult to test, and accrues technical debt rapidly, becoming a bottleneck to long-term maintenance and scaling[2].

The decoupled architecture addresses this challenge by establishing a clear control boundary between the two primary functions of an AI agent: the core, deterministic business logic and the non-deterministic search and inference strategy. The business logic defines the mandated sequence of steps, operational rules, and constraints that must be followed to complete a task, essentially serving as a "skeleton" or "functional core" for the application[3]. This logic is testable, auditable, and deterministic, ensuring that an agent's execution is governable and adheres to compliance standards[2][4]. Conversely, the LLM, or the "intelligence" component, is treated as an advisory function that proposes outcomes or actions based on its probabilistic nature[2]. This separation is crucial; the stochastic output of the LLM is prevented from directly triggering side effects in the production environment without first being validated against the deterministic business rules[2].

The implications for developers are profound, introducing a more principled approach to engineering agentic workflows. Researchers from institutions including MIT CSAIL and Caltech have introduced a programming model called Probabilistic Angelic Nondeterminism (PAN) and its Python implementation, ENCOMPASS, to formalize this separation[1][5]. This model allows developers to write the agent's workflow as if the unreliable operations always succeed (the "angelic" part)[5]. The underlying framework then compiles this program into a *search space*—a tree of possible execution paths—which an external runtime engine explores using inference-time search strategies like best-of-N sampling or tree search[5]. This effectively abstracts away the complexity of handling LLM failure. One case study on the ENCOMPASS framework demonstrated up to an 80% reduction in coding effort required for implementing search and error-handling mechanisms in agents tasked with code translation or discovering transformation rules, enabling programmers to quickly identify the best-performing strategy with little change to the core logic[6].

Furthermore, the decoupling extends beyond the core agent to its tools and external resources, maximizing performance and efficiency. In a single-agent, monolithic design, the entire system—including the LLM—must scale together, leading to resource waste[7]. By adopting a microservices-based architecture where individual components, such as data analysis tools or API connectors, are deployed as separate, independent services, each component can be scaled according to its unique demand profile[7]. This *independent scalability* ensures that resource-intensive components, like the GPU-powered LLM inference service, only scale up when actively required, while less demanding agent layers maintain a minimal footprint[1]. This granular scaling capability directly translates into significant cost savings, as organizations only pay for the computational power that is actively being used[1]. Moreover, this modular approach—often referred to as Layered Decoupling—boosts resilience, as the failure of one tool or component does not cause a total system breakdown, thereby improving fault tolerance and overall system uptime[8][9]. It also enables *polyglot development*, allowing different teams to build tools in the most appropriate programming language, further increasing development velocity and team autonomy[7].

Ultimately, separating the deterministic logic from the stochastic search process fundamentally redefines the scaling of AI agents from a software perspective. It moves the focus from constant, complex prompt engineering and error-handling to robust, modern software architecture principles that prioritize clear control boundaries, observability, and modularity[4][2]. For the industry, this architectural commitment ensures that AI agents can evolve from unreliable prototypes into reliable, auditable, and cost-efficient components of enterprise infrastructure, capable of maintaining high performance benchmarks, such as response times under three seconds and error rates below five percent, in high-load, real-world environments[8]. This shift is essential for unlocking the transformative potential of agentic AI across critical business functions, enabling autonomous systems that are both intelligent and trustworthy.

Sources

[2]

[3]

[4]

[5]

[6]

[8]

[9]