DeepMind's Agentic Vision Transforms Gemini Into Active Investigative AI Agent

Agentic Vision uses executable code to actively investigate images, achieving crucial accuracy gains in complex visual tasks.

January 28, 2026

Google DeepMind has introduced a foundational shift in how large multimodal models interpret visual information with the launch of "Agentic Vision" in its Gemini 3 Flash model. This new capability transforms the model's interaction with images from a passive, single-pass analysis into an active, investigative process, fundamentally altering the frontier of computer vision systems[1][2]. Rather than merely describing what it sees in a static glance, Gemini 3 Flash is now equipped to actively interrogate an image by generating and executing code, a breakthrough that promises to significantly enhance accuracy and reliability across complex visual tasks[3][4]. The move represents a major evolutionary step in the development of artificial intelligence, transitioning multimodal models from advanced perception systems to truly autonomous visual agents[2][5].

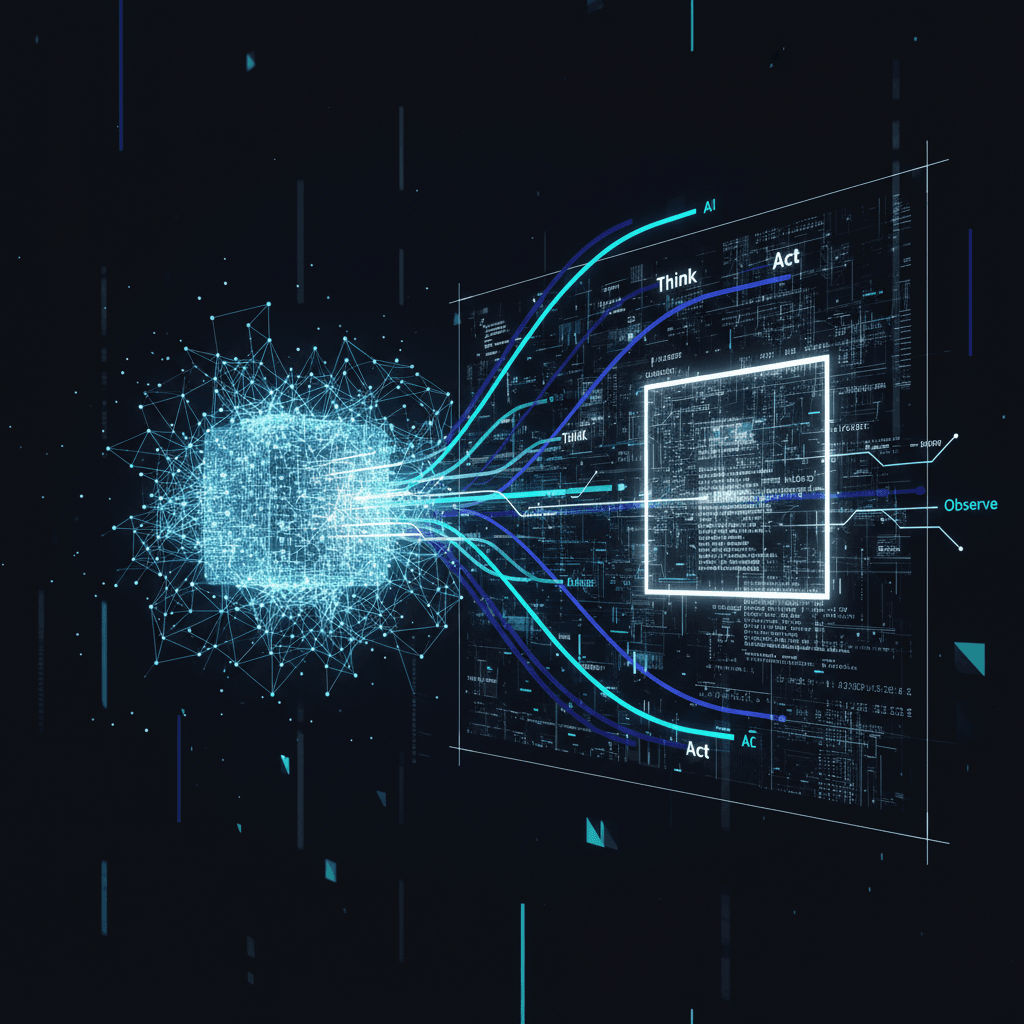

The core of Agentic Vision is the systematic integration of visual reasoning with executable Python code, enabling the model to overcome a critical limitation of previous frontier AI: the inability to handle fine-grained details[1][6]. Traditional vision models are often forced to guess or infer information if they miss a tiny, distant, or complex detail, such as a serial number on a microchip or small text on a distant sign, leading to visual hallucinations and inaccuracies[6][2]. Agentic Vision bypasses this probabilistic guessing by establishing an iterative "Think-Act-Observe" loop[3][6][2]. In the "Think" phase, the model first analyzes the user’s request and the initial image, formulating a multi-step plan to extract the necessary visual evidence[6][2]. The "Act" phase is where the model generates and executes Python code, which can perform various manipulations such as cropping, zooming in on specific areas, rotating images, running calculations, or annotating the visual with bounding boxes and labels[3][1][4]. Finally, in the "Observe" phase, the modified or transformed image is added back into the model's context window, allowing the AI to re-examine the updated visual data with a clear focus before proceeding with its reasoning or generating a final response[6][1][2]. This continuous loop ensures that the model’s answers are grounded in verifiable, pixel-perfect visual evidence, greatly improving the model’s ability to handle intricate and high-resolution visuals[6][4].

Initial performance metrics and early adoption underscore the immediate, tangible impact of this agentic approach on vision-based tasks[3][2]. Google DeepMind has reported that enabling code execution with Gemini 3 Flash results in a consistent quality improvement of between five and ten percent across a majority of vision benchmarks[3][1]. This quantitative leap in performance is critical for high-stakes applications where precision is paramount[7]. For example, a construction planning startup has already integrated this capability to iteratively crop and analyze high-resolution sections of building plans, such as roof edges, reporting an accuracy improvement of five percent in checking blueprints for code compliance[3][1]. Beyond intricate inspection, the model can also utilize its code-execution tool for tasks like visual arithmetic, plotting data from high-density tables, and reliably summing line items on a receipt—tasks that have historically caused traditional language models to struggle or hallucinate[4][2]. The ability to perform explicit visual math through code execution replaces the internal, probabilistic estimates of a model with deterministic and verifiable computation, moving the field past one of its persistent roadblocks[2][7].

The introduction of Agentic Vision firmly establishes a new paradigm in the competitive landscape of generative AI and holds profound implications for the future of autonomous systems[6][2]. While the concept of models utilizing code or tools to enhance reasoning is not unique—with similar capabilities being explored by competitors—DeepMind’s dedicated focus on visual investigation marks a significant specialization of agentic behavior[3][2]. By treating image understanding as an active, goal-directed investigation, Gemini 3 Flash is being positioned as a powerful foundation for building more complex and reliable AI agents[5][8]. The ability to automatically decide when to zoom and inspect fine details is already an implicit feature, and future plans include further expanding the model’s toolset with capabilities like web and reverse image search to even further ground its understanding of the world[2]. This trajectory suggests a future where AI agents can execute complex, multi-step workflows not just in text, but across all modalities, mirroring a more human-like investigative process of gathering, manipulating, and verifying evidence to arrive at a definitive conclusion[6][4][2]. The technology is now available to developers via the Gemini API in Google AI Studio and Vertex AI, and is being rolled out to users of the Gemini application, democratizing access to this frontier capability and immediately accelerating the creation of a new class of high-accuracy visual applications[2][9][10].

Sources

[4]

[5]

[6]

[7]

[8]

[9]

[10]