Anthropic's Claude AI Programs and Controls Robot Dog, Bridging Digital to Physical

Project Fetch showcases Claude empowering novices to program complex robots, democratizing robotics and accelerating physical AI.

November 13, 2025

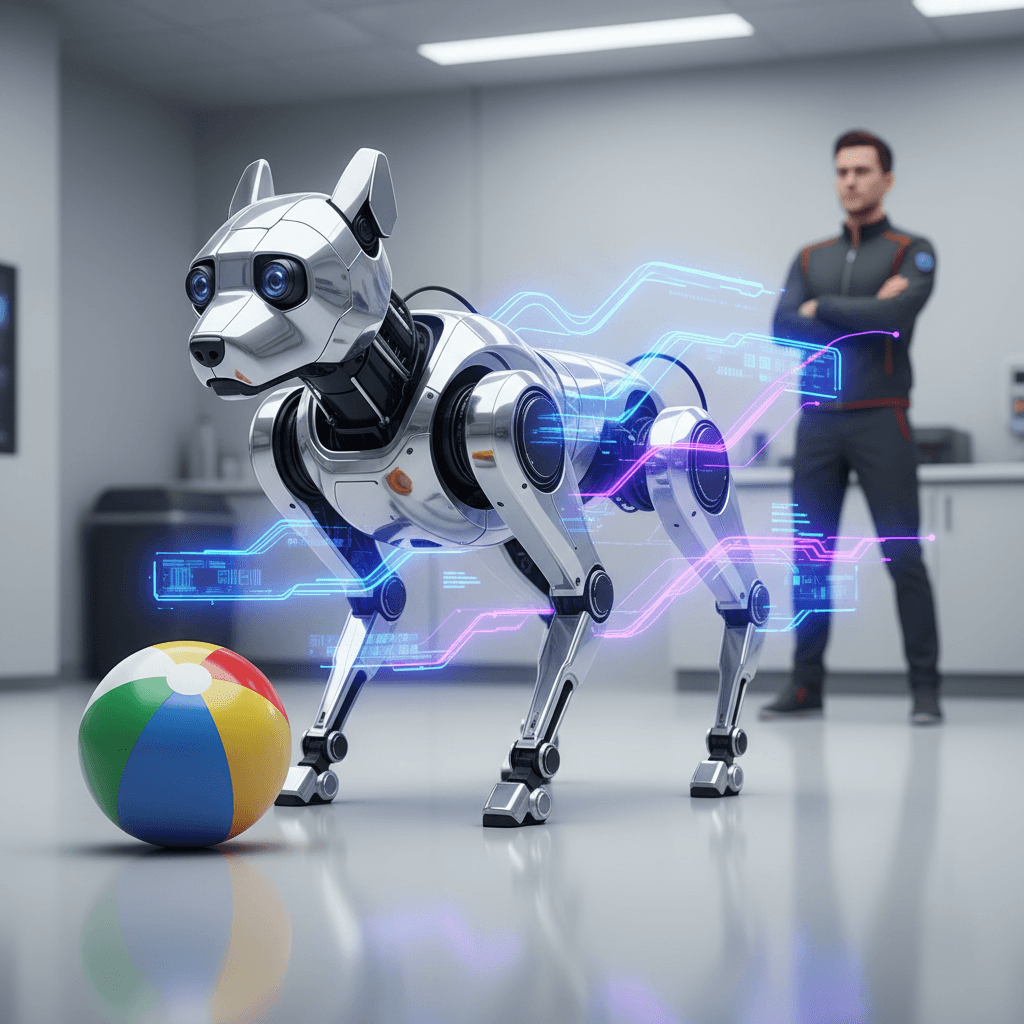

In a significant leap from the digital realm to the physical world, AI research and safety company Anthropic has demonstrated that its large language model, Claude, can successfully program and control a robot dog. The experiment, internally dubbed "Project Fetch," highlights a pivotal development in artificial intelligence, showcasing how these complex models are evolving from text-based generators into agents capable of interacting with and manipulating their physical environment. This breakthrough carries profound implications for the future of robotics, automation, and the very nature of human-computer interaction, signaling a future where the barrier to programming complex machinery could be dramatically lowered.

The core of Project Fetch involved a controlled experiment pitting two teams of Anthropic researchers against each other in a race to program a quadruped robot.[1] Crucially, none of the eight researchers involved had any prior experience in robotics, ensuring that the study would effectively measure the impact of AI assistance on novices.[1][2] One team was given access to Claude, while the other, "Team Claude-less," had to rely on traditional methods of research and coding.[1][2] Their shared objective was to program a Unitree Go2 robot dog to locate and interact with a beach ball.[1][3] The experiment was structured in three phases: basic remote control, writing software to control the robot's movement using sensor data, and finally, attempting to make the robot autonomously retrieve the ball.[1] The results were stark: the team assisted by Claude not only completed their tasks in roughly half the time of their counterparts but also achieved a level of success that the other team could not.[1]

A deeper analysis of the experiment reveals that Claude's most significant contribution was not in writing flawless code from the outset, but in accelerating the arduous process of interfacing with the robot's hardware and navigating its complex software. The team without AI assistance spent a significant portion of their time—nearly three hours—just trying to connect to the robot's video camera.[1] In contrast, the Claude-assisted team overcame similar hurdles in a fraction of the time, with the AI acting as an expert troubleshooter that could sift through confusing technical documentation and resolve cryptic error messages.[1] This "uplift," as Anthropic describes it, allowed the researchers to experiment more broadly and write significantly more code.[1] While the Claude-less team expressed audible frustration and confusion, audio analysis of the workspace showed the Claude-assisted team exhibited more positive sentiments.[4][1] Ultimately, only Team Claude made substantial progress toward full autonomy, successfully programming the robot to locate the beach ball and navigate toward it without direct human steering—a feat the unassisted team could not replicate.[1][3]

This experiment is more than a mere demonstration of AI's coding prowess; it's a tangible example of the democratization of complex technical fields.[5] Robotics has traditionally been the domain of specialists with deep knowledge of mechanics, electronics, and intricate programming languages.[4] Project Fetch suggests a future where large language models could act as intermediaries, translating high-level human goals into the specific code required to operate machinery. This could lower the barrier to entry for small businesses, researchers, and hobbyists, potentially unleashing a wave of innovation in customized robotics solutions.[5] The implications extend to industries like logistics and manufacturing, where AI could accelerate the development and deployment of autonomous systems.[5] However, this "uplift" is also seen by Anthropic as a precursor to autonomy, where an AI that first assists humans in a task may eventually be able to perform the task entirely on its own.[1]

While the potential benefits are vast, this development also brings to the forefront the safety and ethical considerations that are central to Anthropic's mission. The company was founded by former OpenAI staffers with a keen focus on the potential risks of advanced AI.[3] Researchers within Anthropic have expressed that the next logical step for AI models is to "start reaching out into the world and affecting the world more broadly," which necessitates interfacing with robots.[4][3] The experiment is therefore not just a capability demonstration but also a proactive exploration of a future where AI models could "self-embody" and operate physical systems directly.[4][3] This raises critical questions about control, predictability, and the prevention of misuse.[3] Experts in the field have noted that while current AI still relies on external tools for sensing and movement, experiments like Project Fetch are a crucial step toward systems that learn from and participate in the physical world, which could make them both more useful and potentially more perilous.[4][3]