Wikipedia forces Big Tech to pay for AI training data access

In a pivotal shift, Big Tech pays for reliable Wikipedia data, protecting the future of open knowledge.

January 15, 2026

A pivotal shift in the foundational economics of the artificial intelligence boom has been cemented by the Wikimedia Foundation, the non-profit organization that operates Wikipedia. In a landmark set of agreements, some of the world's largest AI developers, including Amazon, Meta, Microsoft, Mistral AI, and Perplexity, have formally joined Google as paying clients for the Wikipedia content they have long relied on for free. These agreements leverage the Wikimedia Enterprise API, a commercial product designed to transition the relationship between the open-knowledge resource and the technology giants from one of uncompensated data scraping to a formal, paid, and sustainable partnership. This new dynamic is a direct response to the generative AI revolution, which has simultaneously validated Wikipedia’s status as a critical information source while severely undermining the volunteer-driven encyclopedia’s traditional operational model.

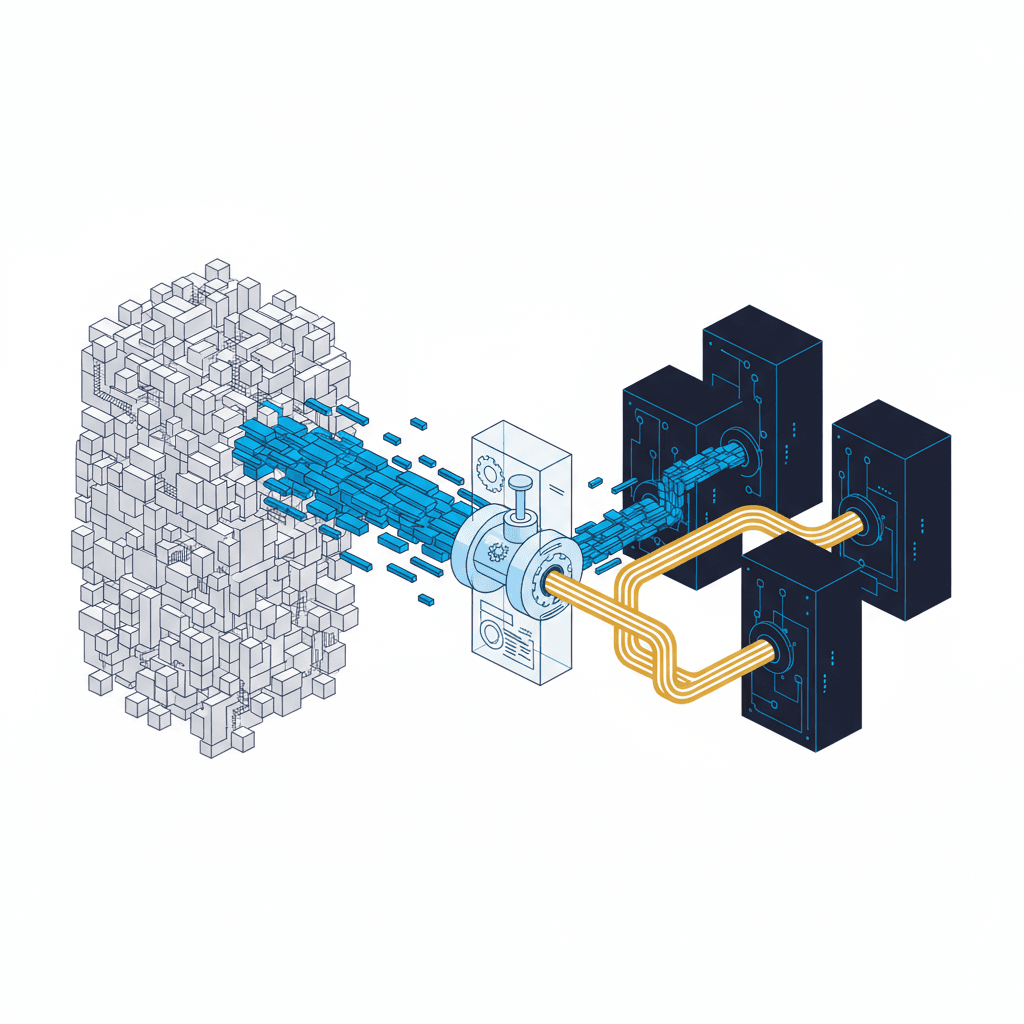

The new paid partnerships center not on the acquisition of content rights, which remain free and open under Creative Commons licenses, but on the delivery mechanism and reliability of the data itself. For two decades, technology companies harvested Wikipedia’s more than 65 million articles using simple bots and large-scale data dumps, a method that was technically permissible under the open-source license but often strained the foundation’s server infrastructure. The Wikimedia Enterprise API, launched in an effort to professionalize this exchange, provides clients with a high-volume, high-speed, and rigorously structured data stream, complete with service-level agreements and dedicated technical support.[1][2][3][4][5][6] This service allows companies like Microsoft and Meta to efficiently feed clean, up-to-date, and machine-readable data directly into their large language models, powering products from AI assistants like Copilot and Alexa to the foundational training sets for their generative models.[7][4][6]

The decision by these multi-billion dollar corporations to begin paying for data they previously accessed at no cost is motivated by a mix of technical necessity, ethical considerations, and a recognition of the platform's indisputable value. Lane Becker, the president of Wikimedia Enterprise, articulated this shift, stating that Wikipedia is a "critical component of these tech companies' work that they need to figure out how to support financially."[1][3] For the AI developers, the Enterprise API offers a superior product: structured, clean data that is easier and faster to integrate than the raw, messy content dumps, while simultaneously mitigating legal and ethical risks associated with unsanctioned data scraping in an increasingly regulated environment.[7][3] The financial terms of these specific new agreements were not disclosed, but the revenue generated represents a vital, diversified income stream for the Wikimedia Foundation, which historically has relied almost entirely on small, individual donations to cover its massive operational costs.[1][3][6][8]

This commercial pivot is fundamentally a defense mechanism for the sustainability of open knowledge in the age of generative AI. The same technology that makes Wikipedia’s content so valuable to Big Tech is also actively threatening the non-profit’s core operating model. The rise of AI-powered search overviews and chatbots—often citing Wikipedia without requiring a click-through—has led to a noticeable decline in direct user engagement with the website. The Wikimedia Foundation reported an 8% year-on-year decrease in real human traffic between March and August, a trend directly attributed to the impact of generative AI on how people seek information.[9][10][11][12] This decline in human readership presents a significant challenge: fewer direct visits mean a smaller pool of potential donors and less visibility for the community of volunteers whose unpaid labor creates and curates the content.[11] Wikipedia founder Jimmy Wales acknowledged the dilemma, welcoming the use of the human-curated data for AI training, but adding that the companies "should probably chip in and pay for your fair share of the cost that you're putting on us."[13][8]

The implication of these paid partnerships extends far beyond Wikipedia’s balance sheet, setting a powerful and potentially industry-altering precedent for the entire digital content ecosystem. The movement of dominant AI players toward a licensed model, even when the underlying content is openly licensed, establishes a market-based valuation for high-quality, structured, and reliably-delivered data. This shift legitimizes the idea that while content may be free to share and adapt, the industrial-scale process of turning it into a commercial AI product warrants compensation to the original platform.[7] This model offers a blueprint for other media organizations and open-knowledge repositories facing similar traffic and revenue losses from generative AI summarization. As regulatory scrutiny on AI training data intensifies globally, formal, contractual agreements that ensure attribution and compensate the original creators may become the industry standard, fostering a more transparent and sustainable development environment for AI technology.[7] However, the model may also create a new divide, with only large, well-resourced platforms like Wikipedia able to build the necessary commercial API infrastructure to monetize their content, leaving smaller, non-commercial content creators with little recourse against traffic and revenue cannibalization by AI systems. The new agreements represent a structural evolution: a necessary transaction to protect the world's largest repository of free knowledge by ensuring that those who benefit most from its industrial reuse also contribute to its long-term viability.[1][13]