Tesla Robotaxis Spark Federal Probe Over Dangerous AI Driving

Tesla's AI robotaxis face federal inquiry after viral videos expose dangerous, erratic driving, raising questions of safety and public trust.

June 26, 2025

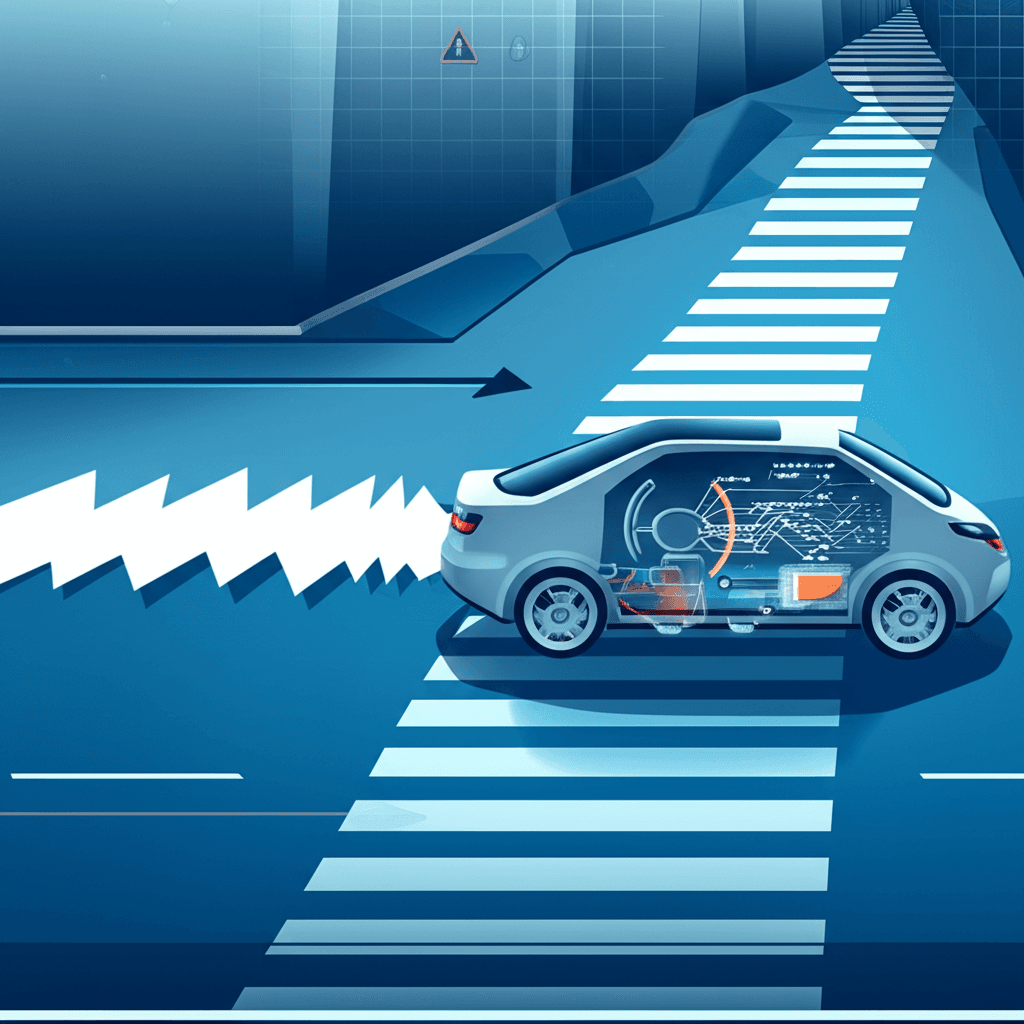

Federal regulators are intensifying their scrutiny of Tesla after a series of startling videos emerged online showing the company's newly launched AI-controlled robotaxis exhibiting erratic and dangerous driving behavior. The National Highway Traffic Safety Administration (NHTSA) has contacted the electric vehicle maker to gather more information following the public rollout of its paid robotaxi service in Austin, Texas.[1][2][3][4] The incidents, captured by passengers and posted on social media, have cast a harsh spotlight on the readiness of Tesla's autonomous driving technology and raised significant questions about public safety and the future of the artificial intelligence industry. This inquiry adds to an existing and broader investigation into Tesla's "Full Self-Driving" (FSD) software, signaling a critical juncture for a technology that its CEO has touted as revolutionary.[3][5]

The incidents that triggered the federal inquiry were documented during the initial launch of the robotaxi service, which involved a limited number of Tesla Model Y vehicles.[6][7] In one widely circulated video, a robotaxi is seen in a designated left-turn-only lane but proceeds straight through an intersection, entering a lane of opposing traffic.[6][8][4] The vehicle's steering wheel is shown jerking erratically as the car swerves multiple times before correcting itself, all while continuing on the wrong side of the road for approximately 10 seconds.[9][4] Fortunately, there was no oncoming traffic at the moment.[8] In another recorded event, a robotaxi braked abruptly and repeatedly in the middle of a road, apparently in response to the flashing lights of parked police cars that were attending to an unrelated incident nearby.[6][10][8] Other videos and accounts have reported the autonomous vehicles speeding and stopping unexpectedly in intersections.[7][11][12] While some passengers who recorded these events downplayed the immediate danger, asserting they did not feel unsafe, the footage has been alarming enough to capture the attention of federal safety officials and technology experts.[6][8]

The NHTSA confirmed that it is aware of the incidents and is in contact with Tesla to gather additional information, a standard step when potential safety defects arise.[6][13][2] The agency clarified that while it does not pre-approve new automotive technologies, it has the authority to investigate potential safety risks and enforce recalls if necessary.[7][13][11] "Following an assessment of those reports and other relevant information, NHTSA will take any necessary actions to protect road safety," the agency stated.[6][11] This recent inquiry is part of a larger, ongoing investigation into Tesla's FSD technology.[3] That broader probe, which affects roughly 2.4 million Tesla vehicles, was initiated after a series of crashes occurred in low-visibility conditions, including incidents involving sun glare and fog, one of which was fatal.[3][14][9][15][16] Regulators have previously expressed concern that Tesla's marketing and public statements may imply its vehicles are fully autonomous, conflicting with owner's manuals that state human supervision is required.[17]

The governmental scrutiny comes at a pivotal moment for Tesla and the burgeoning autonomous vehicle sector. Tesla's CEO has repeatedly made ambitious promises about the imminent arrival of a fleet of millions of robotaxis, a vision central to the company's future valuation.[6][5] The Austin test, initially offered to a select group of investors, analysts, and influencers, was intended to be a milestone, the "culmination of a decade of hard work," as described by the CEO.[12] Despite the celebratory launch, the documented failures highlight the immense challenges of developing AI that can consistently and safely navigate the complexities of real-world driving.[12][4] While some analysts view these issues as inevitable growing pains for a new technology that will eventually be resolved, other experts argue that the system's erratic performance makes it unfit for public road testing without trained safety drivers.[6][4] The incidents also put Tesla in a challenging competitive position, as rivals like Waymo, a subsidiary of Google's parent company Alphabet, already operate established self-driving taxi services in several cities, including Austin, seemingly with a stronger safety record.[10][1]

The ultimate implications of the NHTSA's investigation extend far beyond Tesla's stock price or its competition with other autonomous vehicle developers. The core issue at stake is public trust.[7] For AI-driven technologies to be widely adopted, especially in a critical application like transportation, they must demonstrate a level of safety and reliability that surpasses human capabilities. The viral videos of swerving and braking robotaxis threaten to erode that trust before it is fully formed.[7][18] The "black-box" nature of some complex AI systems makes it difficult to predict and explain their failures, posing a significant challenge for both developers and regulators.[7] The path forward for the entire AI and autonomous driving industry depends on a transparent and rigorous approach to safety, where technological advancement is carefully balanced with public welfare. The outcome of this federal inquiry into Tesla's robotaxis will undoubtedly be a defining chapter in that ongoing story, shaping the regulations, consumer confidence, and the very trajectory of artificial intelligence in our daily lives.

Sources

[3]

[5]

[6]

[7]

[8]

[9]

[10]

[11]

[13]

[16]

[17]

[18]