Semantic Tokens End AI Gibberish: Zhipu Launches Image Model That Can Write.

Zhipu’s GLM-Image uses semantic tokens and LLM reasoning to master text, trained entirely on domestic silicon.

January 15, 2026

Zhipu AI, a prominent force in the Chinese artificial intelligence landscape, has introduced GLM-Image, an open-source image generation model that addresses one of the most persistent and frustrating shortcomings of generative AI: the inability to reliably render text within images. This breakthrough is underpinned by an innovative architectural approach and a new concept the company calls "semantic tokens," which fundamentally changes how the model perceives and plans the visual content it creates. The 16-billion-parameter model is not just a high-fidelity image generator; it represents a significant leap in the AI's capacity for visual-linguistic reasoning, positioning it as a potent rival to proprietary systems from major global technology firms.

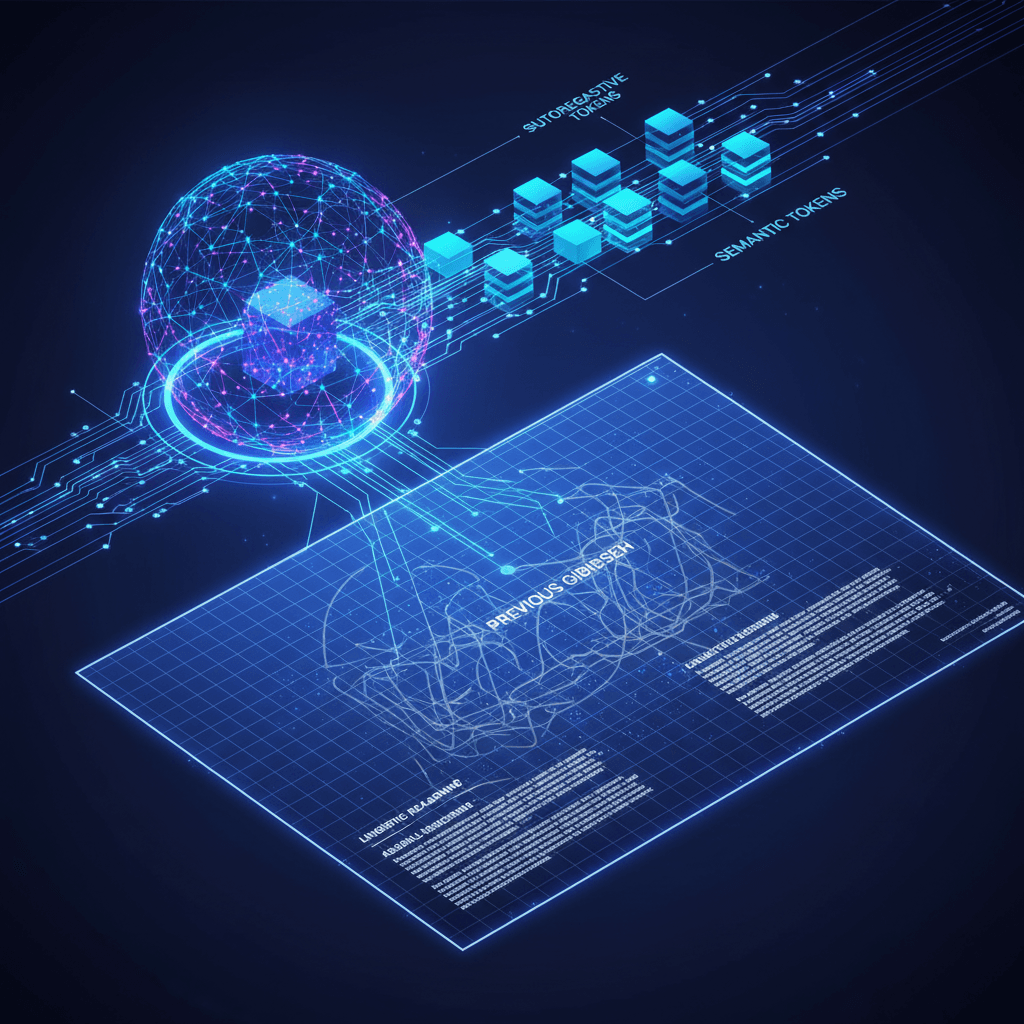

The core of Zhipu AI's innovation lies in a hybrid architecture that decouples the image generation process into specialized modules for reasoning and rendering. Unlike traditional end-to-end diffusion models, which often struggle with complex instruction following and precise text rendering, GLM-Image employs a two-stage pipeline. The first stage is a 9-billion-parameter autoregressive generator, initialized from the company's GLM-4 language model, that functions as the system’s "brain." This module takes the user's prompt and sequentially generates a compact semantic representation of the desired image. The second stage is a 7-billion-parameter diffusion decoder, which then takes this high-level semantic blueprint and renders the final high-resolution image, which can range from 1024 to 2048 pixels[1][2]. This separation ensures that the overall layout, content, and crucial textual elements are locked in by the language model before the diffusion model begins the process of filling in the high-frequency visual details[3][1][4].

The concept of "semantic tokens" is central to the autoregressive module's success in planning the image. Conventional VQVAE (Vector Quantized Variational Autoencoder) tokens used in earlier models typically prioritize visual reconstruction, which means they are more focused on color and texture and less on abstract meaning. Zhipu AI’s semantic tokens, by contrast, are designed to carry not only color information but also explicit meaning, effectively identifying whether a specific area in the latent space represents a face, a background element, or, most critically, a block of text[1]. This explicit semantic encoding allows the model to learn much faster by providing a clearer, more structured understanding of the scene's content. When a prompt requires text, the autoregressive generator can parse the instruction and sequentially generate the tokens that represent the precise text, ensuring the layout and content are semantically consistent before the rendering stage even begins[4].

The practical implications of this innovation are most clearly demonstrated in the model’s superior performance in rendering complex text. The common frustration of "AI gibberish" or garbled characters in generated signs and infographics is a direct consequence of diffusion models treating text as mere visual patterns rather than meaningful language. GLM-Image tackles this head-on by integrating a specialized Glyph Encoder text module within its diffusion decoder[5][6]. This, combined with the layout-first semantic planning, results in significantly higher accuracy. On the CVTG-2K (Complex Visual Text Generation) benchmark, GLM-Image reportedly achieved a word accuracy score of 0.9116, a substantial lead over the 0.7788 score posted by Google's proprietary Nano Banana Pro model[3]. This level of reliability is a generational improvement for generating text-heavy assets such as technical diagrams, slides, and posters, where accuracy is paramount[3][7].

Beyond the technical architecture, the release of GLM-Image carries broader geopolitical and industrial significance. Zhipu AI has stated that the entire process—from data preprocessing to large-scale training—was completed using a domestically produced computing platform, specifically Huawei’s Ascend Atlas 800T A2 servers and Ascend 910 AI processors[8][5][7]. This achievement serves as a powerful proof-of-concept, demonstrating the feasibility of training a state-of-the-art, industrial-grade model of 16 billion parameters entirely outside of the Nvidia hardware ecosystem that has largely dominated global AI development[5][7]. By successfully building and open-sourcing a model of this complexity on domestic silicon, Zhipu AI has signaled a maturation in alternative compute infrastructures, proving that non-Nvidia hardware can sustain the long-term, grueling stability required for cutting-edge large model training[7].

The open-source nature of GLM-Image further amplifies its potential impact. Released under a permissive license, which permits self-hosting, modification, and unrestricted commercial use, the model directly challenges the dominance of closed, proprietary systems[3][6]. By providing a powerful, high-fidelity tool that excels in text and complex knowledge-intensive generation, Zhipu AI is democratizing access to capabilities that were previously restricted to the largest and most well-resourced technology companies[3]. This open-source strategy is designed to foster a robust ecosystem of developers and enterprises, accelerating innovation in areas like enterprise automation, digital content creation, and business intelligence, particularly in scenarios where precise semantic understanding and complex information expression are critical requirements[6][2]. The model's capacity for knowledge-intensive generation, where the AI must leverage specific facts or history to create the image, reinforces its utility in professional and academic contexts[7].

The introduction of GLM-Image and its semantic token innovation marks an important inflection point in the evolution of generative AI. By integrating the structural reasoning capabilities of a large language model with the detail-rendering power of a diffusion decoder, Zhipu AI has effectively solved the long-standing "spaghetti text" problem, thereby opening up new possibilities for AI-driven visual content creation. Furthermore, its development on domestically produced hardware underscores a critical shift in the global AI landscape, proving that world-class models can be built on diverse computing platforms. The success of GLM-Image provides compelling evidence that the future of cutting-edge, enterprise-ready AI innovation will increasingly be driven by novel architectural designs that prioritize semantic understanding and by a global competition in full-stack AI infrastructure.

Sources

[2]

[4]

[7]

[8]