Runway introduces GWM-1, AI's major leap into simulating entire realities.

Runway’s GWM-1 and Gen-4.5 usher in an era where AI doesn't just create, but simulates reality itself.

December 12, 2025

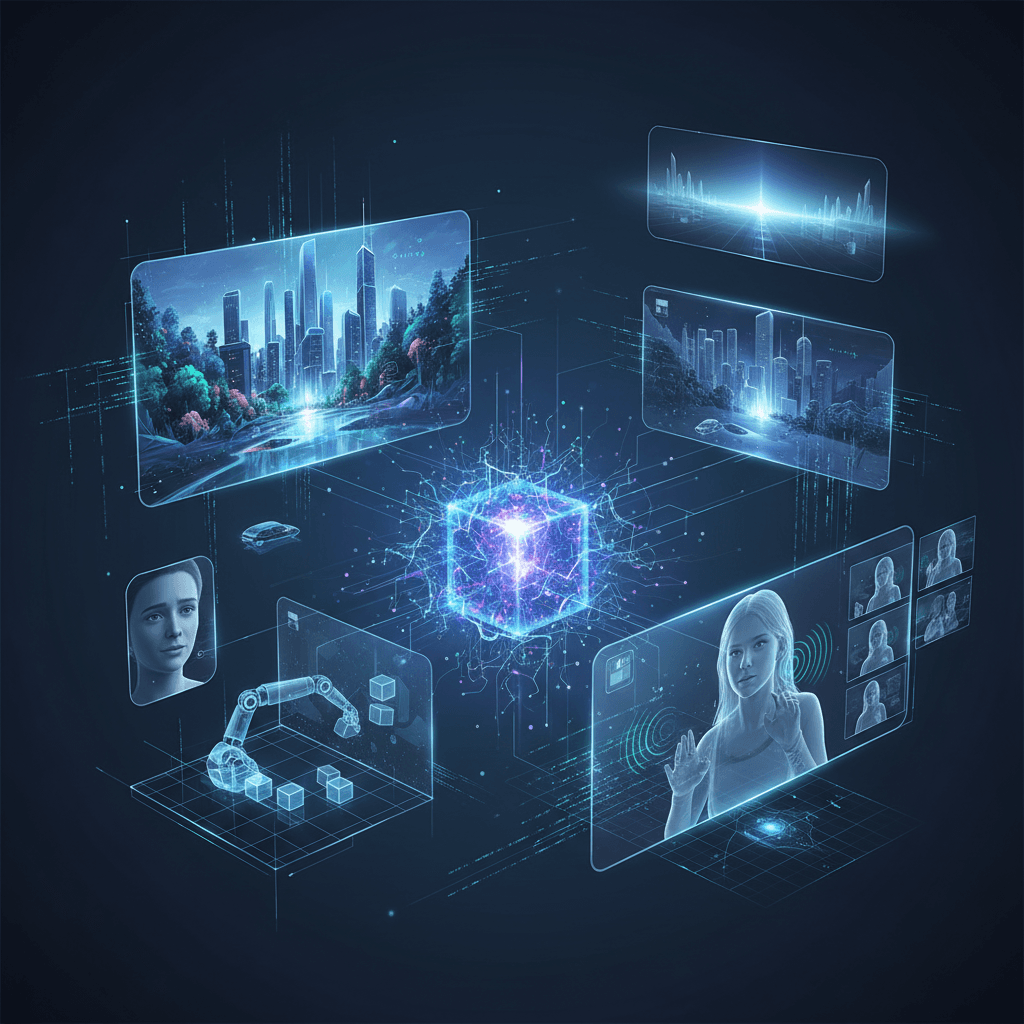

AI research company Runway has significantly escalated the race toward more sophisticated generative technologies, unveiling its first "General World Model," GWM-1, alongside a major upgrade to its flagship video generation model, Gen-4.5. This dual announcement signals a strategic push beyond mere content creation into the realm of realistic world simulation, positioning Runway at the forefront of a pivotal shift in artificial intelligence. The new developments aim to bridge the gap between generating plausible video and creating interactive, persistent virtual environments, a move with profound implications for robotics, gaming, and the future of synthetic media.

At the heart of the announcement is GWM-1, an interactive AI system designed to build an internal representation of an environment and simulate future events within it.[1] Unlike traditional video generation models that create a sequence of images based on a prompt, a world model aims to understand and replicate cause and effect, physics, and spatial consistency over time.[2][3] Runway's approach is to learn these complex dynamics by predicting video pixel by pixel, frame by frame, believing that with enough scale and data, the model will develop a deep understanding of how the world works.[4] Built upon the architecture of Gen-4.5, GWM-1 generates interactive simulations at 24 frames per second in 720p resolution, which users can control in real-time through inputs like camera movements or direct commands.[5] This marks a formal entry by Runway into a field pursued by tech giants like Google's DeepMind, positioning world models as a critical step beyond large language models, which lack a fundamental understanding of the physical world.[6][1] To demonstrate its capabilities, Runway has launched GWM-1 with three specialized variants: GWM-Worlds for creating infinite and explorable environments for gaming or VR; GWM-Avatars for producing realistic, conversational characters with natural expressions and lip-syncing for education or customer service; and GWM-Robotics, a tool for generating synthetic data to train and test robots in diverse, simulated scenarios without the risks and costs of real-world hardware.[5][7] While these are currently separate, the company's long-term vision is to merge them into a single, unified system.[1]

Concurrent with the debut of its world model, Runway has rolled out substantial upgrades to its Gen-4.5 video model, dramatically enhancing its utility for creators and positioning it as a more comprehensive production tool. The most significant of these updates is the introduction of native audio generation and editing capabilities.[6] This allows users to generate video with synchronized dialogue, sound effects, and ambient sound directly within the platform, eliminating the friction of using separate tools for audio and video and improving elements like lip-sync and pacing.[8] Furthermore, Gen-4.5 now supports long-form, multi-shot storytelling, enabling the creation of cohesive sequences up to one minute long.[9] This feature maintains character consistency across different shots and complex camera angles, addressing a key challenge in generative video.[5] These additions bring Gen-4.5 closer to being an all-in-one video creation suite, bolstering its competitive stance against rivals.[6][2] The upgraded model is being rolled out first to enterprise customers before becoming available to all paid users.[10]

The dual release firmly places Runway in an intensifying arms race among leading AI labs to master not just generative media but the underlying principles of the physical world.[8] The industry is rapidly moving from experimental research to deployable, production-grade tools.[6] Runway's GWM-1 is seen as a more "general" system than some contenders, framed as a foundational model for training AI agents across various domains.[6][8] This push into world modeling is echoed by major players like Google and OpenAI, who are also investing heavily in systems that can simulate reality.[4] The advancements in Gen-4.5, particularly native audio, also reflect a broader industry trend, with competitors like Kling AI and others recently shipping models with synchronized audio-visual capabilities.[6][11] The progress has been rapid, with Runway's Gen-4.5 recently topping independent leaderboards for text-to-video generation, even ahead of formidable models from Google and OpenAI.[12] This competitive pressure is accelerating innovation, transforming AI video tools from creative novelties into viable instruments for industrial-level production.[9]

In conclusion, Runway's introduction of GWM-1 and the significant enhancements to Gen-4.5 represent more than just an incremental update; they signify a fundamental ambition to simulate reality. By developing a General World Model, the company is tackling one of AI's most profound challenges: teaching machines to understand the world in the way humans do, through observation and interaction. This technology holds the potential to revolutionize fields far beyond media and entertainment, offering powerful new tools for scientific discovery, robotics training, and the creation of immersive virtual worlds. The addition of native audio and long-form video generation to Gen-4.5 makes professional-quality AI video production more accessible and integrated than ever before. As Runway and its competitors continue to push the boundaries of what these systems can do, they are not just creating better tools for making content, but are laying the groundwork for a future where the line between the real and the simulated becomes increasingly blurred.

Sources

[3]

[4]

[7]

[9]

[11]

[12]