Robots Abandon Cloud: Warehouse Automation Pivots to Edge AI for Real-Time Speed

The latency trap: Warehouse robots cannot afford the physics of delay, forcing a swift pivot to Edge AI.

January 13, 2026

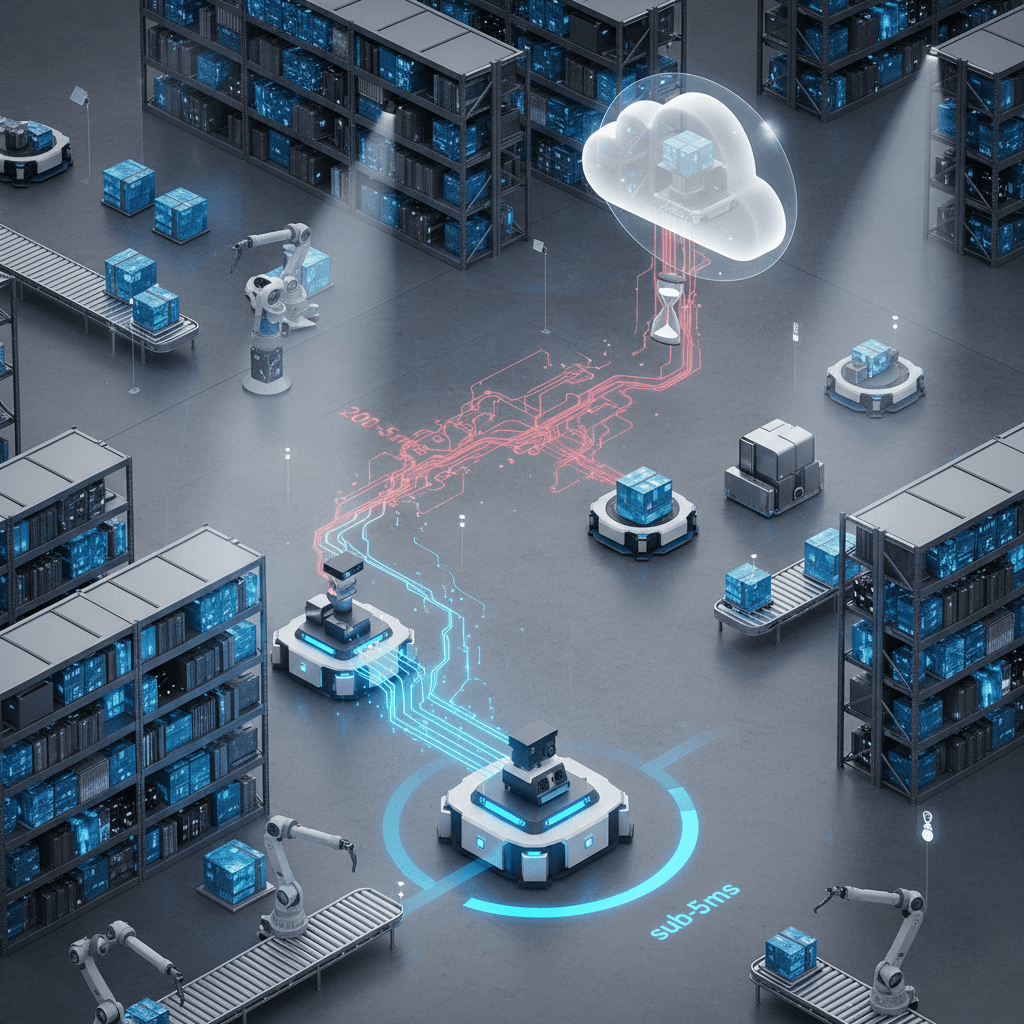

The prevailing dogma of enterprise IT—to migrate all computing and intelligence to the cloud—is running into an immovable object on the warehouse floor: the physics of real-time automation. A growing number of smart logistics operators are actively pivoting away from centralized cloud architectures, recognizing that the "latency trap" posed by distant servers is the single greatest bottleneck to true next-generation automation. This fundamental shift sees the future of high-speed fulfillment relying on Edge AI, which deploys processing power directly onto the autonomous systems themselves, enabling sub-millisecond decision-making that is impossible to achieve over a wide-area network.

The core of the problem lies in the critical demands of autonomous mobile robots (AMRs) and automated sorting systems. A warehouse AMR moving at a brisk 2.5 meters per second, operating in a highly dense facility alongside human workers and heavy machinery, requires instantaneous perception and response to avoid accidents and maintain flow. In a traditional cloud-based setup, a robot's on-board sensors, such as LiDAR or cameras, capture data, which must then be compressed, packeted, and transmitted via Wi-Fi and fiber optics to a remote data center—sometimes hundreds of miles away—for analysis by a complex AI model. The resulting instruction, such as "Stop" or "Reroute," must then travel all the way back to the robot. This round-trip, despite the speed of light and fiber networks, introduces a network delay, or latency, that is simply too long for safety-critical and high-throughput applications. Even a flicker of 200 milliseconds, a blink of an eye in human terms, can effectively blind a robot, turning a smooth operation into a potential collision and a major slowdown during peak fulfillment periods.[1]

This unavoidable latency translates directly into crippling operational inefficiencies and safety risks. For tasks like real-time obstacle avoidance, dynamic path planning, and precision object handling, the difference between a 200-millisecond response and a two-millisecond response is the difference between a viable system and a liability. Edge AI directly addresses this by bringing the compute power and the trained AI inference models—the brains—onto the device or a local server within the facility. This allows data to be processed near the source, minimizing the time between data acquisition and action to under five milliseconds, according to industry benchmarks.[2] By eliminating the network transmission delay, edge computing allows warehouse robots to make quick decisions crucial for applications like autonomous navigation and real-time interaction with their surroundings.[3] This low-latency capability is what unlocks the "hard real-time" requirement for demanding industrial and mobile robotics applications.[4]

The transition to edge AI extends far beyond simple speed; it fundamentally changes the nature of data handling, security, and scalability in logistics. Cloud-only architectures often struggle with the sheer volume of high-definition video and sensor data generated by a large fleet of AMRs and IoT devices, creating a "bandwidth bottleneck" that becomes prohibitively costly and slows down operations exactly when peak performance is needed, such as during holiday seasons.[1] Edge systems, however, process, filter, and analyze the data locally, sending only critical insights or aggregated information upstream to the cloud for long-term storage or high-level business intelligence, significantly reducing network strain and cloud storage costs.[5][6] Moreover, local processing enhances data sovereignty and compliance, as sensitive warehouse operational data remains within the facility’s network, mitigating security and privacy concerns associated with transmitting raw information over the open internet.[7][6]

This decentralization of intelligence is also driving innovation in how robotic fleets learn and collaborate. Technologies like federated learning are being deployed at the edge, allowing individual robots to learn from their local environment—for example, discovering that a specific type of shrink-wrap confuses its sensors—and then securely share only the refined model weights, or "enhancements," back to a master model in the cloud.[1][4] This collective evolution enables every robot in the fleet to immediately benefit from the localized learning without the massive bandwidth consumption of sending all raw data to the cloud. The cloud remains essential for the initial training of the most complex, large-scale AI models and for long-term predictive analytics, but the immediate, mission-critical execution—the inference—now belongs to the edge. This hybrid model, utilizing the cloud for "deep intelligence" and global data storage while the edge handles real-time processing, is the emerging architecture for industrial automation.[4][8]

The industry pivot is creating a strong demand for specialized hardware and software capable of running sophisticated AI models with limited power consumption directly on-device. This is translating into a significant growth opportunity for the Edge AI chipset market, which is projected by some analysts to surpass the cloud AI chipset market in revenue.[9] Furthermore, this shift is transforming the role of network connectivity; while technologies like 5G are often touted as the panacea for low-latency cloud connectivity, their true value in the warehouse is as an enabler for the edge, providing a robust, high-bandwidth local network infrastructure that facilitates the mesh communication between edge devices and gateways, not necessarily for constant long-distance communication with a distant cloud server.[1] The transition of smart warehouses abandoning the centralizing appeal of the cloud for the practical necessity of the edge signifies a maturing of the AI industry, where real-world physical constraints and business demands are dictating a more distributed, localized, and resilient future for artificial intelligence in logistics.