Qwen AI Solves Identity Drift, Revolutionizing Consistent Character and Perspective Editing

Qwen AI solves "identity drift," delivering production-ready fidelity for characters, group photos, and complex perspective shifts.

December 24, 2025

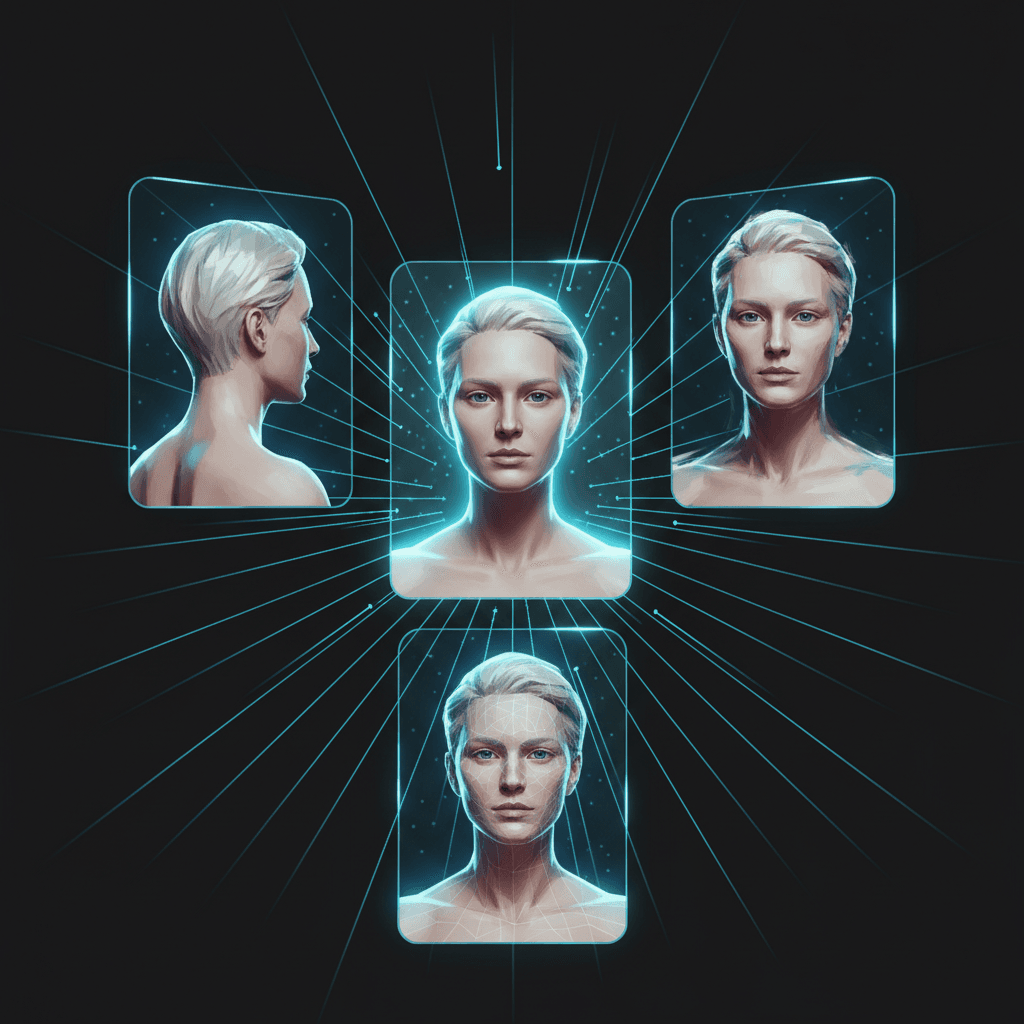

The field of generative artificial intelligence for image editing has reached a new inflection point with the latest release from the Qwen team, a development powered by Alibaba Cloud, which dramatically improves the consistency and fidelity of character identity during complex visual manipulation. This update to the Qwen image editing model, specifically seen in iterations like Qwen-Image-Edit-2511, directly tackles one of the most persistent challenges in AI-driven visual modification: preventing the subject's face or key features from "drifting" or changing appearance when the background, style, or perspective is altered. The new capabilities signal a critical advancement for professional creative workflows, moving AI editing from impressive parlor trick to a production-ready tool capable of maintaining visual continuity across multiple scenes or shots.

The core of the enhancement lies in better preservation of facial identity and visual characteristics, supporting intricate edits on portraits and even group photos. Previous generations of image editing models often struggled with what is known as "identity leakage," where the AI, in the process of fulfilling an editing prompt, would subtly or drastically change the person's face. The Qwen model update mitigates this image drift, allowing for highly imaginative edits—such as changing a person's pose, converting them into a different portrait style, or altering their environment—while ensuring the subject remains recognizably the same individual[1][2]. This improved character consistency is a key feature in the newest iterations, making the model valuable for tasks ranging from old photo restoration to meme generation, where maintaining a specific likeness is paramount[3][4].

A significant leap in the new version, Qwen-Image-Edit-2511, is the robust handling of multi-person and group photo consistency[1][2]. While single-subject editing had seen earlier improvements, the challenge of maintaining identity for multiple individuals within a single frame, or coherently fusing separate person images into a unified group shot, represents a major technical hurdle cleared by the model[1][5]. The model’s architecture, which is built on the 20-billion-parameter Qwen-Image foundation model, combines visual and semantic control, utilizing components like a Variational Autoencoder (VAE) for visual appearance and a large language model component for semantic understanding[6][7]. This sophisticated integration allows the AI to understand the high-level concept of a person's identity and apply that continuity constraint throughout an edit, even when performing complex semantic changes[6].

Beyond character fidelity, the update includes a major focus on photorealistic control over cinematic elements, specifically lighting and camera angles[8][9]. The model, described as a "camera-aware AI editor," can reconstruct the underlying three-dimensional geometry of a scene from a single two-dimensional input image[9][10]. This geometric reasoning allows users to prompt for complex viewpoint changes, such as moving the camera to a top-down view, a wide-angle shot, or rotating the perspective up to 180 degrees, all while maintaining subject identity and realistically re-rendering the scene's lighting and texture[9][11][10]. This is a breakthrough in generative editing, as earlier models often produced "warped distortion" or inconsistent results when attempting dramatic perspective shifts[9]. The integration of community-developed Low-Rank Adaptations (LoRAs), such as those for 'Lightning Enhancement' and 'Multiple Angles,' directly into the base model further democratizes these advanced controls, allowing for realistic lighting control and new viewpoint generation out-of-the-box[1][12][10].

The implications of this enhanced model extend broadly across several industries. For filmmaking and animation, the ability to generate consistent reverse-angle shots or quick pre-visualization (pre-viz) frames of a character from various perspectives without losing their likeness fundamentally streamlines the creative and production pipeline[9][13]. Storyboard artists and game developers gain a powerful tool for creating consistent multi-view character turnarounds[9][10]. For e-commerce and industrial design, the model can transform a single product image into a studio-ready, multi-angle render set with accurate material replacement and geometric design support, eliminating the need for extensive physical photography or 3D modeling for simple variations[1][2][12]. Furthermore, the model’s improvements to text editing consistency, including support for modifying fonts, colors, and materials in both English and Chinese, reinforce its utility for creating and editing complex digital posters and marketing materials[3][6][4][7].

This move by Qwen and Alibaba Cloud intensifies the competition among major AI players like Google, Meta, and OpenAI, which are all racing to perfect generative editing capabilities. While models like those in the Adobe Firefly suite offer strong professional features, the Qwen update’s explicit focus on solving the character identity and geometric consistency problems at a foundational level sets a new, high standard for what users can expect from image editing AI. It signals a maturation of the technology, where the focus shifts from simply generating a convincing image to generating a high-fidelity, controllable, and production-ready asset that maintains continuity across an entire project. As the Qwen model continues to be open-sourced and openly accessible through platforms like Hugging Face and ModelScope, its advancements will rapidly influence the development and adoption of professional-grade AI tools globally[5][7].

Sources

[1]

[2]

[3]

[4]

[7]

[10]

[11]

[12]

[13]