OpenAI unlocks massive profit, pushing AI compute margin to 70%.

OpenAI’s compute margin soared to 70%, validating its core business model and pressuring AI rivals.

December 22, 2025

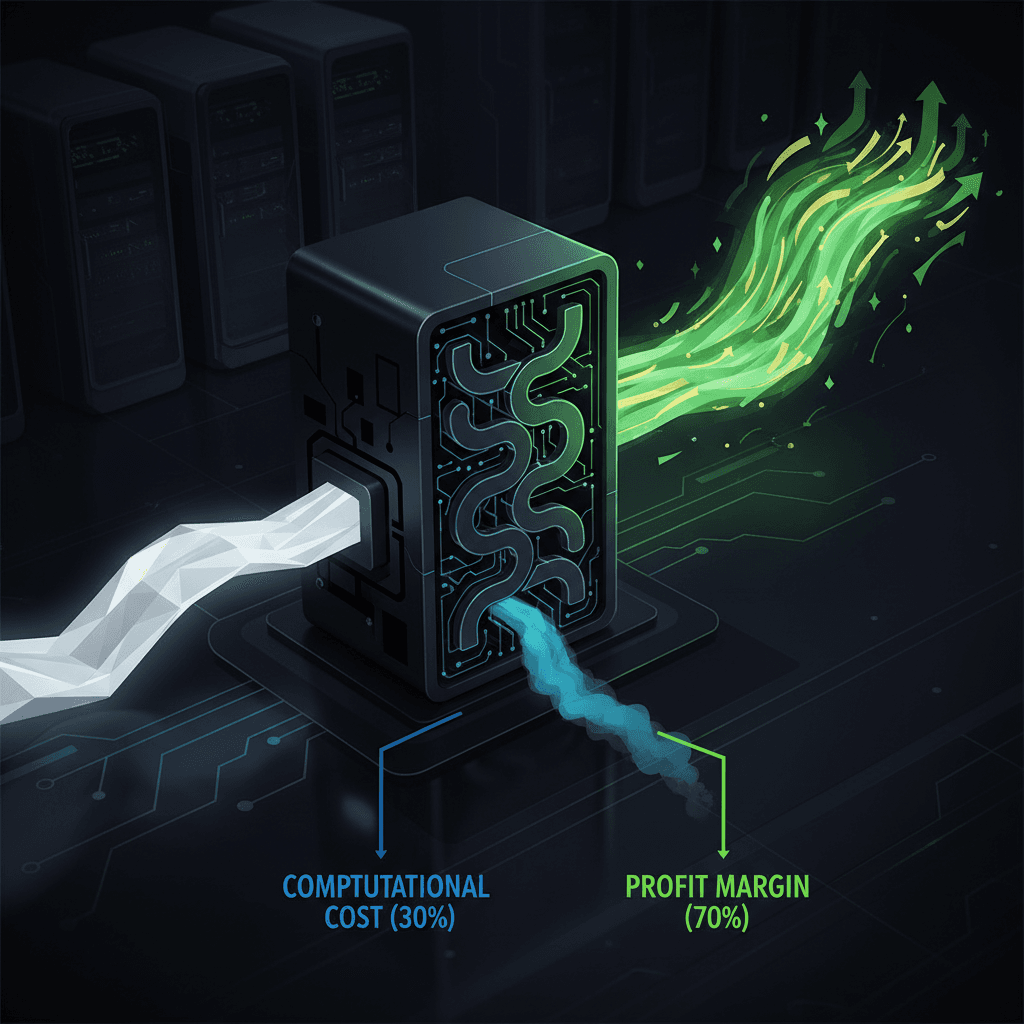

A powerful transformation is underway at OpenAI, where the financial foundation of its generative artificial intelligence services is reportedly undergoing a dramatic shift toward sustainability. Internal company metrics suggest the tech titan has made major strides in dramatically improving the profitability of its core AI services, signaling a critical maturation point for the entire large language model industry. This efficiency leap is reflected in the company’s internal "compute margin," an in-house metric that tracks the share of revenue remaining after deducting the server and processing costs necessary to run AI models for paying users.

According to reports citing internal financial data, OpenAI’s compute margin has seen a colossal increase over a relatively short period. In January 2024, this margin stood at approximately 35%, a figure typical of a bleeding-edge technology with punishing operational costs. By the end of 2024, the figure had climbed to 52%, and by October 2025, it reportedly soared to an impressive 70%.[1][2][3][4] This near-doubling of the margin for its paid products in less than two years underscores a successful campaign to convert groundbreaking technology into a viable, high-efficiency business engine.[5][1][6] The improvement suggests that for every dollar of revenue generated from a paying customer, a much smaller portion is now consumed by the raw computational cost of serving the request, leaving a significantly larger share for reinvestment and profit.[6][4]

The remarkable efficiency gains are not attributed to a single factor but to a combination of technological optimization and strategic business moves. On the technical front, a key driver is the optimization of the AI models themselves, which allows for lower costs per token of output.[1][7] This includes the deployment of more efficient models utilizing techniques like quantization compression, sparse activation, and dynamic batching, which collectively reduce the computational power required for inference—the process of a model generating a response.[7] Furthermore, the company’s massive infrastructure strategy, including efforts around the "Stargate" supercomputing center and the reported development of custom AI chips, is a long-term play to reduce dependence on expensive, commercial cloud-rented computing power.[7] By cutting rental costs and leveraging greater economies of scale afforded by its primary partner, the firm is successfully squeezing more output from its server spending.[1] A corresponding business strategy has been the focus on monetizing its user base more effectively, specifically by increasing the proportion of high-value enterprise API customers and users on its pricier subscription tiers, such as GPT Enterprise.[5][1][7]

While the margin improvement is a powerful indicator of operational health, it provides only one piece of the complex puzzle that is OpenAI’s financial picture. The metric is specific to paid users, who, though growing rapidly, still represent a fraction of the hundreds of millions of people who access the free version of its services.[2][8][6][9] Despite the soaring compute margin, the company has yet to post an overall profit, as it continues to pour billions into ambitious, unprecedented infrastructure projects and foundational AI research.[5][2][10] Chief Executive Officer Sam Altman has openly acknowledged that the company’s training costs continue to grow rapidly, and the firm’s long-term financial projections include multi-billion dollar losses for several more years before potential profitability is achieved, perhaps near 2030.[4][11] However, the improved margin provides crucial validation for investors in a company that was recently valued at over half a trillion dollars and is reportedly seeking a new funding round of up to $100 billion.[5][1][8] The efficiency is a bullish signal that the core product’s unit economics can indeed support the staggering capital expenditures required for the firm’s audacious vision.[4]

The significance of OpenAI’s compute margin surge extends far beyond its own balance sheet, sending a shockwave through the competitive AI landscape. The company’s 70% compute margin on paid accounts reportedly exceeds that of its key rival, Anthropic, which is projected to reach around 53% by the end of the same period.[1][2][8][4] This head-start in operational efficiency puts immense pressure on all competitors—from funded startups like Anthropic to tech giants like Google and Meta—to rapidly optimize their own model inference and cloud infrastructure.[7] Anthropic, for its part, is also on a steep efficiency trajectory, with its own compute cost structure drastically improving from a negative margin last year, illustrating an industry-wide scramble to optimize unit economics.[4] The focus is shifting from simply achieving state-of-the-art model performance to achieving performance at a lower operational cost.[7] For the industry, the development suggests that the era of "money-burning" AI may be transitioning into a more capital-efficient phase, where proprietary models must not only be powerful but also fiscally disciplined to survive in a fiercely competitive market. The ability to deploy models at a high margin will ultimately determine which companies can sustain the massive, long-term research and development expenses necessary to maintain a lead in the AI arms race.[5][12]

Sources

[3]

[4]

[6]

[7]

[8]

[9]

[10]

[11]

[12]