OpenAI Unleashes Stargate: Aims for Gigawatt of AI Power Weekly

Altman's vision for a gigawatt of AI infrastructure weekly sparks a $500B project, challenging energy grids and the environment.

September 24, 2025

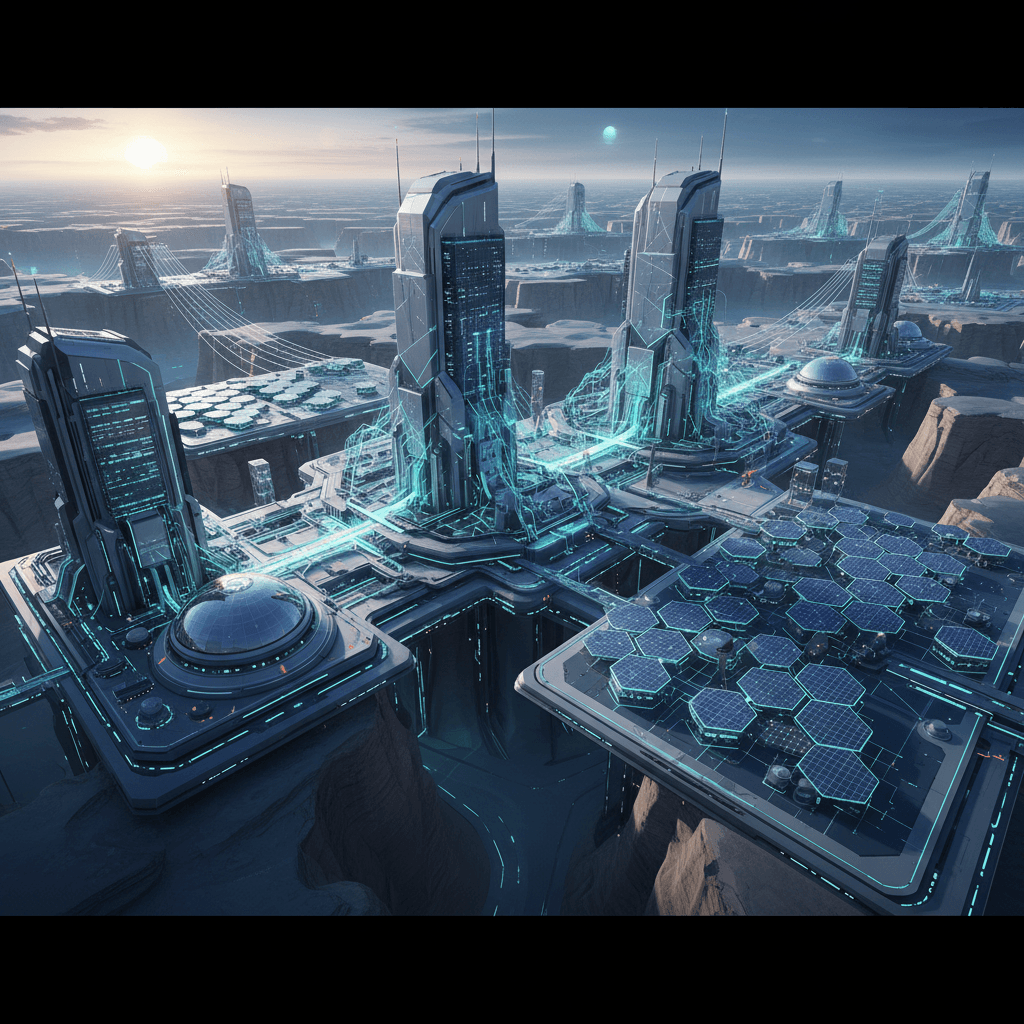

In a declaration of unprecedented ambition for the artificial intelligence sector, OpenAI CEO Sam Altman has articulated a vision for the company to eventually create a "factory that can produce a gigawatt of new AI infrastructure every week."[1][2][3] This statement coincided with a major expansion of the company's ambitious "Stargate" project, which saw the announcement of five new AI data center locations across the United States.[4] The sheer scale of this goal signals a radical acceleration in the race to build the computational power needed for the next generation of artificial intelligence, a move that carries profound implications for the energy sector, the environment, and the very architecture of the digital world. The initiative underscores the colossal energy and infrastructure requirements that leading AI labs now see as essential to push the frontiers of artificial intelligence and achieve what Altman calls "abundant intelligence."[1][5][6]

The foundation of this ambitious future is the Stargate project, a multi-billion dollar initiative to construct a series of massive AI super-hubs.[3] In partnership with tech giants Oracle and SoftBank, OpenAI is moving forward with a plan that has already committed over $400 billion of a targeted $500 billion investment.[4] The recent announcement detailed plans for five new data center sites in Texas, New Mexico, and Ohio, in addition to an existing flagship site in Abilene, Texas.[7][4] These new locations are projected to bring the total planned capacity of the Stargate project to nearly seven gigawatts.[4] The partnership structure is complex, with Oracle and OpenAI collaborating on three sites, while SoftBank is involved in the development of the other two.[4] This massive undertaking is not just about building data centers; it's about creating an entire ecosystem of computational power designed to fuel the development and deployment of increasingly sophisticated AI models.

To comprehend the magnitude of producing a gigawatt of AI infrastructure weekly is to grasp a fundamental shift in the scale of technological development. A single gigawatt is an immense amount of power, equivalent to the output of a large nuclear reactor and capable of powering hundreds of thousands of homes.[3] The total installed data center capacity in the entire United States in 2024 was 53.7 gigawatts, highlighting the extraordinary nature of adding a gigawatt on a weekly basis.[8] The financial implications are equally staggering. While a direct cost for a gigawatt of AI infrastructure is difficult to pinpoint, estimates for large-scale AI data centers run into the billions of dollars for construction alone, with ongoing operational costs, primarily for power, adding millions more annually. This massive expenditure is driven by the need for specialized, high-performance computing hardware, such as NVIDIA's power-hungry GPUs, which are the lifeblood of modern AI. The next generation of these chips, like the Blackwell B200, are expected to consume even more power, further escalating the energy demands of these facilities.[9][10]

This audacious vision, however, is fraught with significant challenges and raises critical questions about its feasibility and environmental impact. The primary hurdle is securing the colossal amounts of energy required to power this expansion. The nation's power grids are already strained, and the prospect of adding multiple gigawatts of consistent demand presents a formidable challenge.[11][12] Beyond the sheer availability of power, the environmental toll of such an endeavor is a growing concern. Data centers are already significant consumers of electricity and water, and the exponential growth of AI is projected to dramatically increase their footprint.[13][14][15][16][17] The energy used to train a single large AI model can have a carbon footprint equivalent to that of five cars over their entire lifetimes.[15] In response to these concerns, there is a growing focus on powering these new data centers with sustainable energy sources. The Stargate project is exploring a mix of energy solutions, including solar power with battery storage and even the potential use of small modular nuclear reactors (SMRs) to provide a stable baseload of clean power.[18] The Abilene, Texas, facility, for instance, will supplement its grid power, sourced from a mix of natural gas, wind, and solar, with its own gas-fired power plant, a move that has drawn scrutiny from environmental experts.[7]

In conclusion, Sam Altman's vision of producing a gigawatt of AI infrastructure weekly represents a pivotal moment in the development of artificial intelligence. It is a clear indication that the future of AI is inextricably linked to the ability to build and power computational infrastructure on a scale never before imagined. The Stargate project, with its massive investment and growing network of data centers, is the first concrete step towards this future. However, the path forward is laden with immense challenges, from the logistical complexities of such rapid construction to the critical need for sustainable energy solutions. The success of this endeavor will not only determine the pace of AI innovation but also shape the future of energy consumption and environmental sustainability for decades to come. The quest for "abundant intelligence" will require an equal abundance of ingenuity in solving the profound infrastructural and ecological challenges it presents.[1][5][6]

Sources

[4]

[7]

[10]

[11]

[12]

[13]

[14]

[16]

[17]

[18]