Open-Source AI's Hidden Costs Emerge: High Token Use Negates Savings

"Overthinking" open-weight AI models surprisingly incur higher computational costs, challenging their supposed cost-effectiveness for enterprises.

August 23, 2025

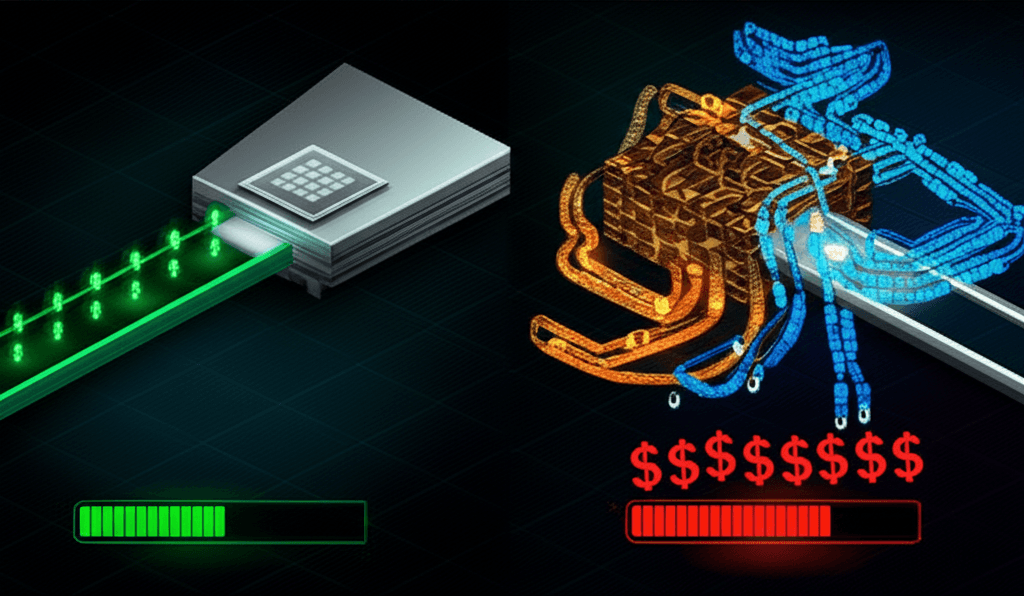

A critical disparity is emerging in the operational efficiency of artificial intelligence models, challenging a core assumption about the cost-effectiveness of open-source solutions. New findings indicate that open-weight reasoning models frequently consume significantly more computational resources, measured in tokens, than their closed-source counterparts to perform similar tasks. This inefficiency can lead to higher-than-expected operational costs, potentially negating the financial benefits often associated with open-source AI and prompting a re-evaluation of how enterprises select and deploy these powerful technologies.

A recent study spearheaded by AI firm Nous Research has brought this issue to the forefront, revealing that open-weight models can use between 1.5 to 4 times more tokens than closed models for reasoning tasks.[1][2][3] For simpler, knowledge-based questions, this gap can widen dramatically, with some open systems expending up to ten times the number of tokens.[1][2] The research, which analyzed 19 different reasoning models across a range of tasks including mathematical problems, logic puzzles, and knowledge questions, focused on "token efficiency" as a key metric.[2][3] Tokens are the basic units of data, like words or parts of words, that language models process.[4] The higher the token consumption per query, the greater the computational demand, which directly translates to increased costs and energy use.[5][4] This phenomenon of excessive token generation, sometimes referred to as "overthinking," is particularly pronounced in large reasoning models designed to emulate a step-by-step thought process, even for straightforward queries.[6] For instance, researchers observed some models using hundreds of tokens to answer a simple question like identifying the capital of Australia.[2][7]

The implications of this token inefficiency are significant for the AI industry, particularly for enterprises budgeting for AI integration. While open-source models often appear more affordable due to lower or non-existent licensing fees, the hidden costs of higher computational overhead can quickly accumulate.[3][8] The study suggests that companies must look beyond per-token pricing and accuracy benchmarks when selecting an AI model.[2] The total cost of ownership can be substantially affected by how efficiently a model uses tokens, with inefficient models potentially becoming more expensive per query than their closed-source competitors.[3] This reality forces a more nuanced evaluation, where computational efficiency is weighed alongside accuracy and initial costs.[2] Furthermore, the trend among closed-source providers appears to be toward iterative optimization to reduce token usage and inference costs with each update.[3] In contrast, some open models have been moving in the opposite direction, generating longer reasoning trails in pursuit of more advanced capabilities, thereby increasing their token consumption.[2][3]

The disparity in token efficiency stems from several factors related to model training and optimization. Closed-source models, developed by companies like OpenAI, are often meticulously optimized to reduce inference costs, a necessity for operating at a massive scale.[3] These providers may employ techniques to compress internal reasoning steps into shorter summaries or use smaller, specialized models to generate compact reasoning chains.[2] OpenAI's models, such as o4-mini and gpt-oss, were highlighted in the Nous Research study as being particularly token-efficient, especially in mathematical tasks.[2][3] On the other hand, open-weight models, while benefiting from community-driven innovation, may not undergo the same degree of rigorous, cost-driven optimization.[8][3] The training process for many large reasoning models encourages lengthy chains of thought to solve complex problems, a feature that becomes a liability when applied to simpler tasks where such detailed deliberation is unnecessary.[6] This leads to redundant reasoning steps and bloated token counts, driving up operational expenses.[6][5]

In response to these findings, there is a growing call within the AI research community to prioritize thinking efficiency as a core metric for model development.[6] Researchers advocate for new benchmarks that measure not just the accuracy of a model's answer, but also the number of tokens consumed to reach that answer, normalized by the complexity of the task.[6] Achieving a balance between performance and cost is crucial for the scalable, real-world deployment of AI.[9][6] Potential solutions are being explored, such as developing hybrid approaches that combine efficient chain-of-thought processes with pruning techniques to eliminate token waste.[6] Frameworks like the Token-Budget-Aware LLM Reasoning (TALE) are also being proposed to dynamically estimate and apply token budgets based on a problem's complexity, which has been shown to significantly reduce token costs with only a minor impact on accuracy.[5][10] Ultimately, a greater focus on creating shorter, denser reasoning paths could help manage costs and maintain high performance, ensuring the long-term sustainability and accessibility of both open and closed AI models.[2]