Nvidia Spends $20 Billion, Acquires Groq Talent to Bypass Antitrust

Nvidia's $20 billion "license" is a strategic acquisition designed to bypass regulatory review and absorb key inference technology.

December 28, 2025

The semiconductor giant Nvidia has initiated a complex transaction with AI chip startup Groq, characterized publicly as a $20 billion "non-exclusive licensing agreement" but which carries all the material hallmarks of a complete acquisition, representing a pivotal moment in the battle for AI hardware dominance. While both companies stress the non-exclusive nature of the license for Groq's core technology, the movement of a massive portion of the startup's human capital to Nvidia paints a clear picture of a strategic takeover. Reports indicate that Groq founder and CEO Jonathan Ross, President Sunny Madra, and an estimated 80 to 90 percent of the engineering workforce will be joining Nvidia, effectively absorbing the intellectual brain trust responsible for the competing chip architecture[1][2][3][4][5]. The deal, which constitutes the largest in Nvidia's history, also sees Groq shareholders receiving a staggering payout at nearly triple the company's $6.9 billion valuation from a funding round just three months prior, further underscoring the transaction's financial magnitude[6][7][8][9].

Groq's primary competitive advantage lies in its specialized Language Processing Unit (LPU), an accelerator chip explicitly designed for the "inference" stage of artificial intelligence—the process where trained models run and respond to user requests, such as in chatbots or autonomous systems[10][1][11][12]. Groq’s architecture, which utilizes on-chip Static Random-Access Memory (SRAM) instead of the High Bandwidth Memory (HBM) common in Nvidia’s GPUs, offers a solution to the memory bottlenecks that plague many AI workloads[7][1][12]. This design enables deterministic, ultra-low-latency performance, with some benchmarks showing Groq's LPUs processing between 300 and 500 tokens per second on models like Llama 2, significantly faster than the roughly 100 tokens per second managed by a standard GPU setup[13]. This technological edge was seen as the most credible emerging threat to Nvidia’s dominance, particularly as the AI industry is rapidly pivoting from the "training" phase to the "utility" phase, where the cost and speed of inference will dictate market leadership[1][11][9][4].

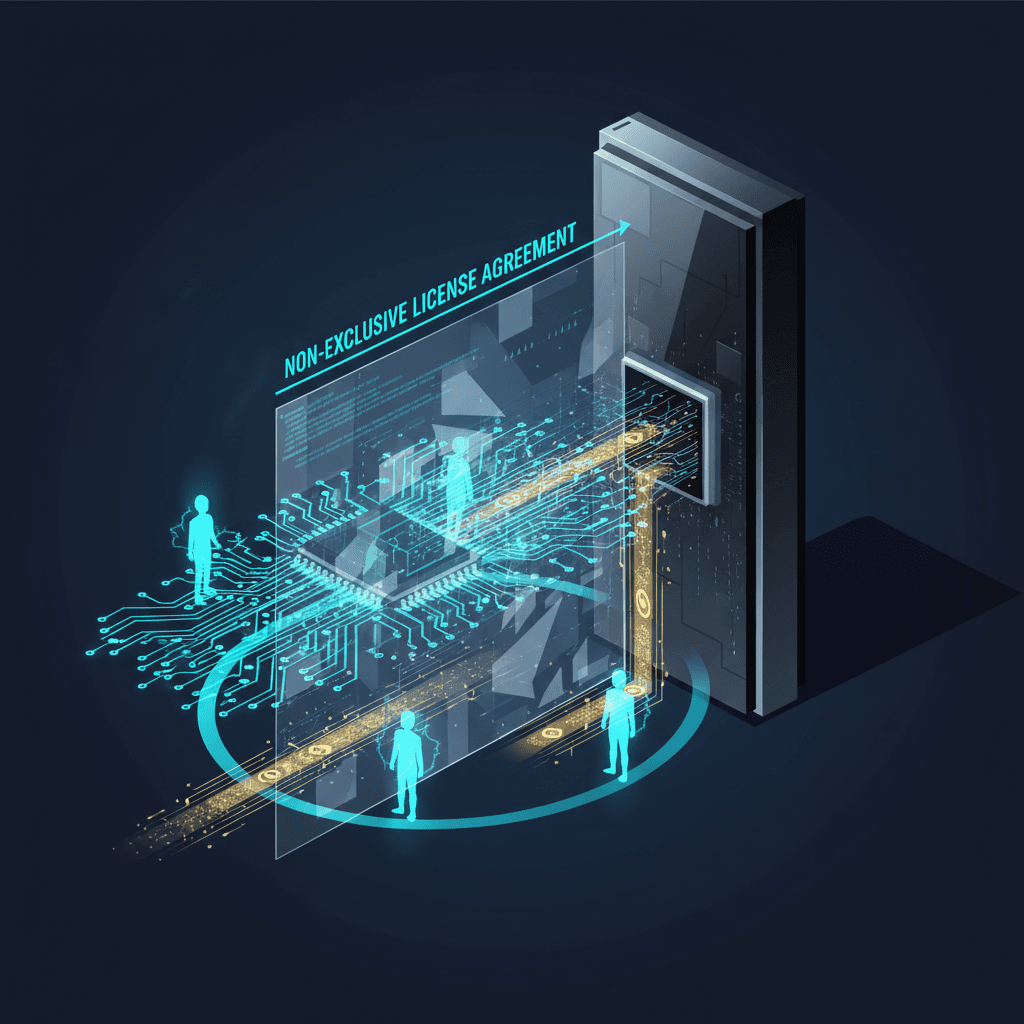

The structure of the $20 billion transaction, which couples a non-exclusive license with a massive "acqui-hire" of talent, is widely viewed as a deliberate strategic maneuver by Nvidia to sidestep regulatory scrutiny[11][3][4]. Had the company pursued a traditional acquisition of Groq, the deal would almost certainly have triggered a lengthy Hart-Scott-Rodino antitrust review by regulatory bodies like the Federal Trade Commission and the Department of Justice[4][5]. Given Nvidia's already entrenched, near-monopoly position in the AI hardware market, a straightforward takeover of a potent competitor like Groq would have faced intense opposition and potential blocking, similar to the regulatory challenges that have plagued other large tech mergers. The "License and Acqui-hire" (L&A) model allows Nvidia to immediately integrate Groq's low-latency processors into its own AI factory architecture and absorb the talent without the protracted 18-month waiting period typical of full antitrust reviews[7][1][3]. This novel deal-making playbook has been increasingly utilized by Big Tech firms to acquire critical AI talent and technology, as seen in similar but smaller transactions involving Microsoft and Inflection AI, and Google and Character.AI[2][3].

The implications of this transaction for the broader AI industry are profound and multifaceted. For Nvidia, the deal is a defensive and offensive masterstroke that solidifies its competitive moat[11][13]. It not only integrates the fastest-in-class inference technology into the Nvidia ecosystem—thereby widening the gap against rivals like Advanced Micro Devices and bespoke chipmakers—but it also brings the architect of their biggest competitor's custom silicon, Jonathan Ross, under its own roof[10][1][13][2]. Ross was a key figure in the development of Google’s original Tensor Processing Unit (TPU), giving his talent a unique significance[7][2][3]. Integrating the LPU logic into the CUDA software environment ensures developers do not have to leave Nvidia’s platform for ultra-fast, real-time AI agents, further tightening the company's ecosystem lock-in[1][11]. Critically, the absorption of Groq's technology also addresses total cost of ownership concerns for data center operators, as the speed and resulting energy efficiency of the LPUs could lead to a significant improvement in energy consumption per task[1][13][12].

For the startup ecosystem, the deal sends a clear, if unsettling, message: emergent, disruptive competitors in the AI hardware space face a high probability of being neutralized by the industry leader before they can achieve true scale[11][13][3]. While Groq itself is set to continue operating its GroqCloud business independently under a new CEO, the departure of the founding visionaries and core engineering team leaves the remaining entity a diminished competitor[10][7][2]. The nearly threefold premium paid to shareholders, however, offers a massive windfall that may incentivize other venture-backed startups to pursue similar licensing-and-talent-transfer arrangements, even if it means sacrificing long-term independence and market competition[6][5]. This strategic move, backed by Nvidia's vast war chest—which generated over $22 billion in free cash flow in the prior quarter alone—demonstrates a willingness to spend aggressively to maintain a dominant position in the evolving AI landscape, making it clear that speed and talent trump all else in the next phase of the artificial intelligence arms race[6][13][9].