NVIDIA Secures Groq's Inference Speed and Top AI Talent to Cement Dominance.

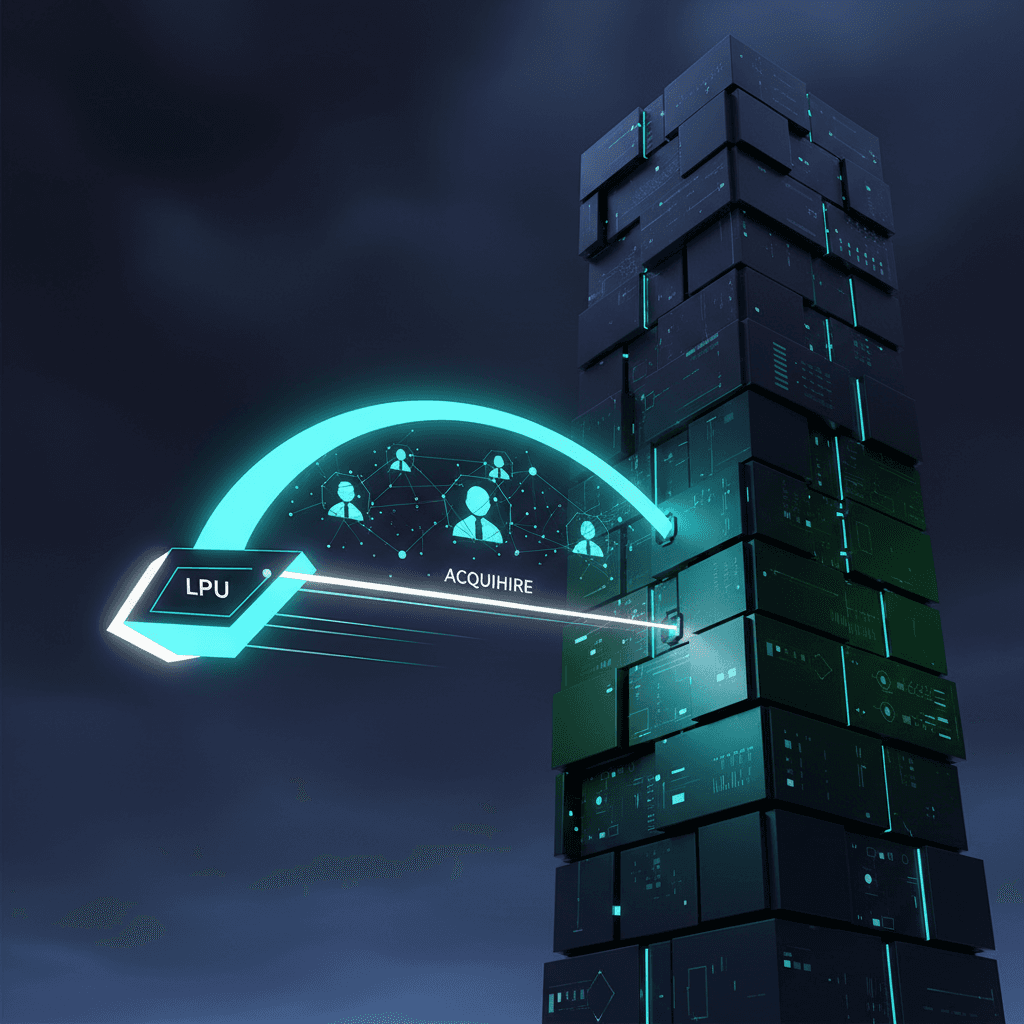

NVIDIA licenses Groq's low-latency LPU technology and absorbs core talent in an inference 'acqui-hire' to reshape real-time AI.

December 25, 2025

A landmark deal in the specialized world of AI hardware has seen Groq, a prominent startup focused on high-speed inference, enter into a non-exclusive licensing agreement with the industry behemoth, NVIDIA. This strategic arrangement is set to fundamentally reshape the competitive landscape for running large language models (LLMs) and other real-time AI applications, with Groq's core technology and key leadership transitioning to NVIDIA's ecosystem. The centerpiece of the agreement involves the non-exclusive licensing of Groq's inference technology, alongside the hiring of the startup’s founder and CEO, Jonathan Ross, President Sunny Madra, and other senior team members who will join NVIDIA to help advance and scale the licensed technology.[1][2][3]

This move, which was initially reported as a full $20 billion acquisition of Groq, was clarified by the companies as a non-exclusive licensing and talent acquisition deal, though sources connected to the startup's investors suggest an overall transaction value potentially in the billions for the assets and intellectual property (IP).[4][5][3][6] The arrangement is strategically significant, reflecting a new form of corporate consolidation in the AI sector—often referred to as an "acquihire" to avoid the intense regulatory scrutiny typically associated with a complete acquisition of a potential rival.[6][7] Groq, which was valued at $6.9 billion in a funding round just months prior, will continue to operate as an independent company, with its cloud service, GroqCloud, remaining uninterrupted under the leadership of new CEO Simon Edwards, formerly the company's CFO.[1][4][8][9] The non-exclusive nature of the license means Groq can still offer its technology and services to other vendors, including major cloud customers of NVIDIA like Microsoft and Amazon, an important distinction likely intended to mitigate antitrust concerns.[2][9]

The primary asset in the deal is Groq's innovative Language Processing Unit (LPU), a specialized processor architecture built from the ground up to excel at AI inference—the phase of running a trained model to generate predictions or responses.[10][7] Inference is becoming a crucial bottleneck in the AI value chain, as it is required every time a user interacts with a generative AI application, such as ChatGPT. Groq's LPU is distinct from NVIDIA's general-purpose parallel GPU architecture, featuring a fully deterministic, ultra-wide data path design and integrating large on-chip SRAM as primary weight storage, which dramatically reduces latency.[2][11][10] The company has long positioned its architecture as a superior alternative for certain workloads, claiming its chips can achieve significantly faster inference speeds—up to 10 times—while consuming a fraction of the power compared to traditional GPUs.[2][11] This deterministic, low-latency performance is vital for real-time applications like interactive search, coding assistance, and conversational AI agents.[11] The integration of Groq's low-latency processors into the NVIDIA AI factory architecture, as indicated by a reported internal memo from NVIDIA CEO Jensen Huang, is aimed at extending NVIDIA’s platform to serve an even broader range of AI inference and real-time workloads.[4]

The movement of key talent is arguably as critical as the technology license. Groq founder Jonathan Ross is a figure of considerable renown in the AI chip world, having previously led the development of the Tensor Processing Unit (TPU) during his tenure at Google.[2][11][12] His work on the TPU laid the foundation for Google’s internal AI infrastructure, establishing him as one of the architects behind the few credible alternatives to the NVIDIA hardware stack.[12] The absorption of Ross, Madra, and other key Groq personnel into NVIDIA is a powerful strategic maneuver to cross-pollinate expertise around compiler design, scheduling, and the low-level optimizations essential for latency-reducing inference techniques.[11] This infusion of specialized talent is intended to complement and potentially revolutionize NVIDIA’s existing CUDA ecosystem, TensorRT library, and Triton Inference Server, positioning NVIDIA for an "energy efficiency revolution" in its next-generation architectures like Blackwell Ultra or Rubin.[2][11] By securing this expertise, NVIDIA directly addresses a looming competitive threat in the inference market, where specialized startups and hyperscalers creating custom chips pose a growing challenge to its dominance.[9][6]

The implications of this transaction are profound for the entire AI industry. For NVIDIA, it is a defensive play that locks down one of the most promising inference architectures and a team of world-class experts, strengthening its already dominant position.[5][6][11] Analysts view the deal as strategic in nature, recognizing that while NVIDIA leads in AI training, the inference market is more diversified and open to competition.[5] By integrating Groq’s specialized capabilities, NVIDIA is signaling that it no longer intends to rely solely on general-purpose GPUs to capture the rapidly scaling, multi-billion-dollar inference segment, which is projected to drive significant future revenue growth as AI shifts from training to real-time deployment.[9] For Groq, the deal provides a significant financial return for investors and employees, while allowing the GroqCloud business to continue operating independently, potentially offering its technology to the broader market without the pressure of having to build out a massive competitive hardware manufacturing and software ecosystem alone.[2][3][13] The arrangement could spur a wave of similar "acquihires" across the technology landscape, as major players prioritize securing specialized AI talent and innovative IP to maintain a competitive edge. Ultimately, the collaboration between two leaders in AI hardware—one known for its vast ecosystem and the other for its specialized speed—has the potential to redraw expectations for real-time AI performance and re-energize competition up and down the accelerator stack starting in the coming years.[3][11]