Nvidia Nemotron 3 Dumps Pure Transformer Design for Unprecedented Agentic Efficiency.

The Mamba-MoE hybrid architecture achieves 1-million-token context, democratizing powerful, cost-effective agentic AI.

December 17, 2025

The latest generation of artificial intelligence models is shifting from mere conversational assistants to autonomous agents capable of executing complex, multi-step workflows, a transition that places an unprecedented premium on computational efficiency and the ability to process vast amounts of data. Nvidia’s new Nemotron 3 family of open models directly addresses this challenge by abandoning the pure Transformer architecture—the dominant design in large language models—in favor of a sophisticated hybrid incorporating Mamba state-space models and a Mixture-of-Experts framework. This architectural pivot is a calculated move to democratize high-performance AI by achieving a massive 1 million-token context window and dramatic inference efficiency without the resource consumption typically associated with such scale.

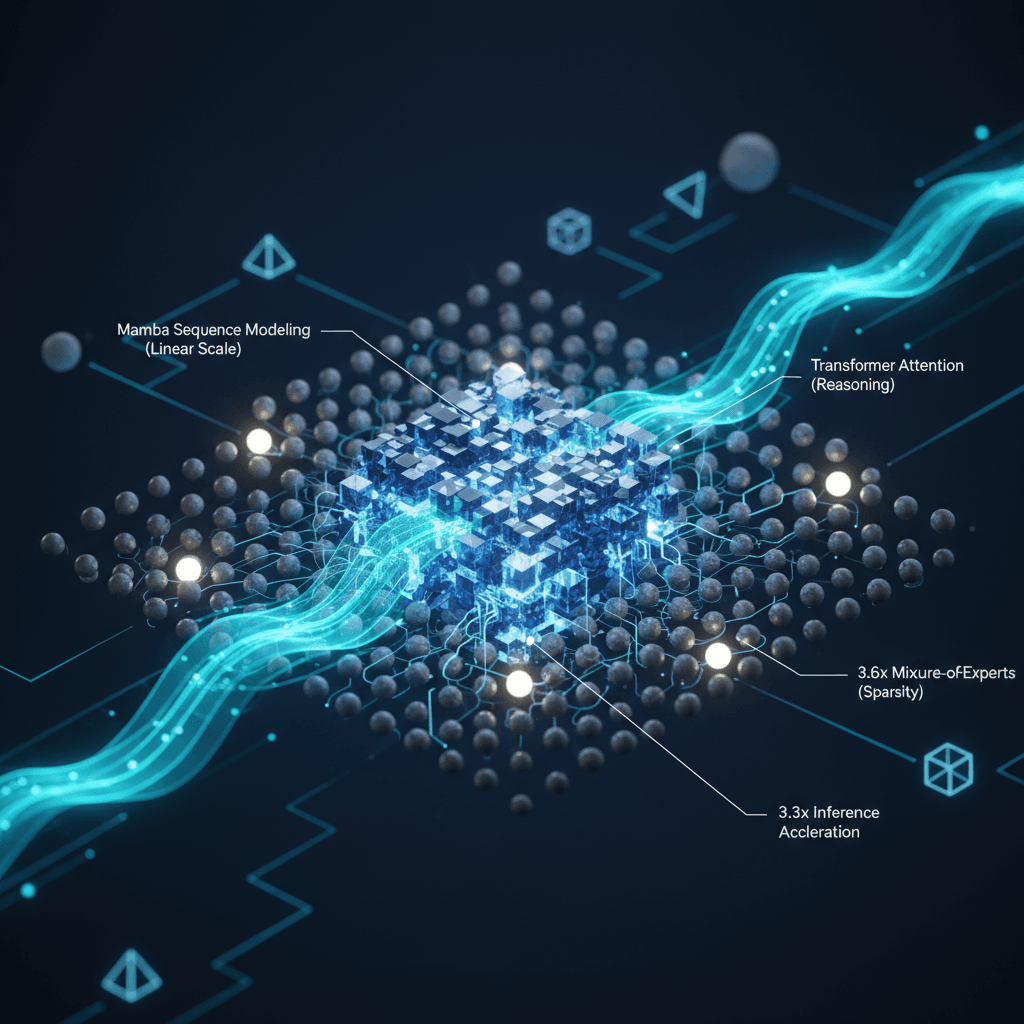

The innovation at the core of Nemotron 3 is the fusion of three distinct architectural components: the Mamba state-space sequence modeling layer, Transformer attention mechanisms, and a sparse Mixture-of-Experts (MoE) design. Traditional Transformer models, while excellent for reasoning, are computationally intensive due to the self-attention mechanism, which scales quadratically with sequence length, making long context windows exponentially more expensive and slower. The Mamba-2 layers, however, excel at tracking long-range dependencies with low-latency, thanks to their linear scaling with sequence length. By interleaving Mamba layers with Grouped-Query Attention (GQA) Transformer layers, the Nemotron 3 architecture is engineered to balance high-throughput, long-range context handling from Mamba with the precise, token-level reasoning capabilities of the Transformer component. This synergy enables the model to process full code bases, long technical specifications, and multi-day conversations within a single pass, which is essential for agentic AI that must maintain persistent memory and coherence across long-running operations.[1][2][3][4]

The efficiency gains are further magnified by the integration of a Mixture-of-Experts (MoE) design, replacing the standard dense feed-forward network layers found in classic Transformers. This sparse activation mechanism is a key factor in keeping computational costs manageable. For instance, the Nemotron 3 Nano, the first model in the family to be released, has a total of 31.6 billion parameters, yet its sparse MoE layer structure means only approximately 3.6 billion parameters are actively engaged per token during a forward pass. This sparsity allows the model to achieve the reasoning quality of a much larger model while maintaining the speed and cost profile of a smaller one. Specifically, Nemotron 3 Nano has been shown to deliver up to 3.3 times higher inference throughput than similarly-sized open-source models like Qwen3-30B and 2.2 times higher than models like GPT-OSS-20B in generation-heavy settings, according to independent benchmarks. Such efficiency is critical for the economic viability of complex, multi-agent systems where numerous agents collaborate, demanding a high tokens-to-intelligence rate.[5][1][2][6][3]

The focus on agentic AI is a clear strategic move by Nvidia to position its models at the forefront of the industry’s next evolutionary phase. As organizations transition from single-model chatbots to multi-agent workflows—systems where multiple specialized AI agents, such as retrievers, planners, and tool executors, cooperate—the challenges of communication overhead, context drift, and escalating inference costs become acute. Nemotron 3’s 1 million-token context window is designed to solve these issues by allowing agents to retain entire evidence sets, history buffers, and multi-stage plans in a single context, significantly improving factual grounding and reliability in long-horizon workflows. The models are further optimized for this use case through reinforcement learning (RL) trained across interactive environments, which aligns the models to real-world, multi-step agentic tasks. The family is being rolled out in three sizes—Nano (available now), Super, and Ultra—to cater to diverse compute needs, from smaller form-factor GPUs like the L40S to the high-scale performance of the upcoming Ultra model, which will also incorporate advanced techniques like Latent MoE and NVFP4 training format for further memory reduction and acceleration.[5][1][7][8][3]

This architectural shift has significant implications for the broader AI ecosystem. By introducing an open, highly efficient, and high-accuracy model family, Nvidia is not only reinforcing its position as a hardware powerhouse but is also becoming a key provider of foundational open-source AI software. The release includes open weights, datasets, and training recipes, giving developers the transparency and flexibility to customize and deploy the models across their own infrastructure, a necessity for enterprise-scale sovereign AI and specialized agent development. The competitive advantage demonstrated by Nemotron 3 Nano in its class, as evidenced by its leading performance in reasoning, coding, and multi-step agentic tasks, signals a major maturation in the open-source LLM landscape. The new model challenges the notion that pure Transformer models are the only viable path to advanced AI, suggesting that hybrid, sparse architectures are the future for cost-effective, high-throughput deployment, especially as the industry moves definitively toward high-volume, real-time multi-agent systems.[5][1][9][10][7][3]

Sources

[2]

[3]

[4]

[6]

[7]

[8]

[9]

[10]