NTT's tsuzumi 2 Unlocks Sustainable Enterprise AI with Single-GPU Power

NTT's tsuzumi 2 delivers sustainable, high-performance AI for enterprises, running powerfully and privately on a single GPU.

November 20, 2025

A fundamental tension lies at the heart of enterprise artificial intelligence deployment: organizations require increasingly sophisticated language models to drive innovation and efficiency, yet they often balk at the immense infrastructure costs and energy consumption associated with frontier systems. This challenge has left many businesses watching the AI revolution from the sidelines. However, a new class of lightweight yet powerful large language models (LLMs) is emerging to resolve this constraint, and Japanese telecommunications giant NTT is positioning itself at the forefront of this movement with its proprietary model, tsuzumi. The recent launch of its second iteration, tsuzumi 2, which is capable of running on a single GPU, is powering a new wave of enterprise AI adoption in Japan by demonstrating that high performance does not have to come with a hyperscale price tag. Early deployments are showing that this approach can match the performance of much larger models in specific business cases, all while drastically reducing operational costs and addressing critical data privacy concerns.

The core innovation of NTT's tsuzumi 2 is its deliberate design to be lightweight and efficient, directly confronting the primary barriers to enterprise AI adoption.[1] Unlike the massive, general-purpose models from global tech giants that often require clusters of dozens or even hundreds of power-hungry GPUs, tsuzumi 2 is engineered to operate effectively on a single graphics processing unit.[2] This distinction is not merely a technical footnote; it represents a paradigm shift in making advanced AI accessible. The immense electrical consumption and operational expenditure required by large-scale models render them impractical for a significant portion of the market, particularly for companies with tight budgets or those in regions with constrained power infrastructure.[1][2] NTT itself has highlighted the unsustainable trajectory of AI's energy demands, with one whitepaper from its data division warning that AI workloads could account for more than half of data center power consumption by 2028.[2] By engineering a model that dramatically cuts down on infrastructure and energy needs, NTT is offering a sustainable alternative that makes sophisticated AI viable for a broader range of enterprises, from universities to financial institutions.[1][2]

This focus on efficiency does not come at the expense of capability; in fact, tsuzumi 2 demonstrates competitive performance, particularly in its native Japanese and in specialized business domains. NTT claims the model delivers performance "on par with or exceeding that of larger models" in specific business deployments.[2] For instance, an internal evaluation of tsuzumi 2 for handling financial-system inquiries revealed that it matched or surpassed the performance of leading external models despite its significantly smaller infrastructure footprint.[1][3] On benchmarks like MT-bench, tsuzumi 2 has shown an ability to perform nearly as well as ultra-large-scale models on a variety of tasks, showcasing its capacity to handle complex user requests.[4] This high performance-to-resource ratio is a key determinant for enterprise adoption, where total cost of ownership is a critical factor.[1] Built from the ground up by NTT, the model boasts a particular strength in Japanese language processing, giving it a distinct advantage over Western models that have been merely adapted for the Japanese market.[2] Furthermore, tsuzumi 2 has been reinforced with specialized knowledge for the financial, medical, and public sectors, enabling it to deliver top-tier results in these high-demand fields.[3][5]

The practical benefits of this lightweight approach are clearly illustrated in early enterprise deployments, where issues of cost, data security, and privacy are paramount. A prominent example is Tokyo Online University, which adopted tsuzumi 2 to power an on-premise AI platform.[1][3] A crucial requirement for the university was to keep all student and staff data within its own campus network, a common need in education and other regulated industries concerned with data sovereignty.[1] Deploying a massive, cloud-based model from an external provider was not a viable option due to these data privacy constraints. Because tsuzumi 2 can run on a single GPU, the university was able to deploy the system on its own servers, avoiding both the capital expenditure for a large GPU cluster and the significant ongoing electricity costs.[1] After validating that the model could handle complex context understanding and long-document processing at a production-ready level, the university implemented it to enhance course Q&A, support the creation of teaching materials, and provide personalized student guidance.[1][3] This case study highlights how on-premise deployment of a lightweight model solves the dual challenges of data security and prohibitive cost, enabling organizations to leverage AI while maintaining full control over their sensitive information.

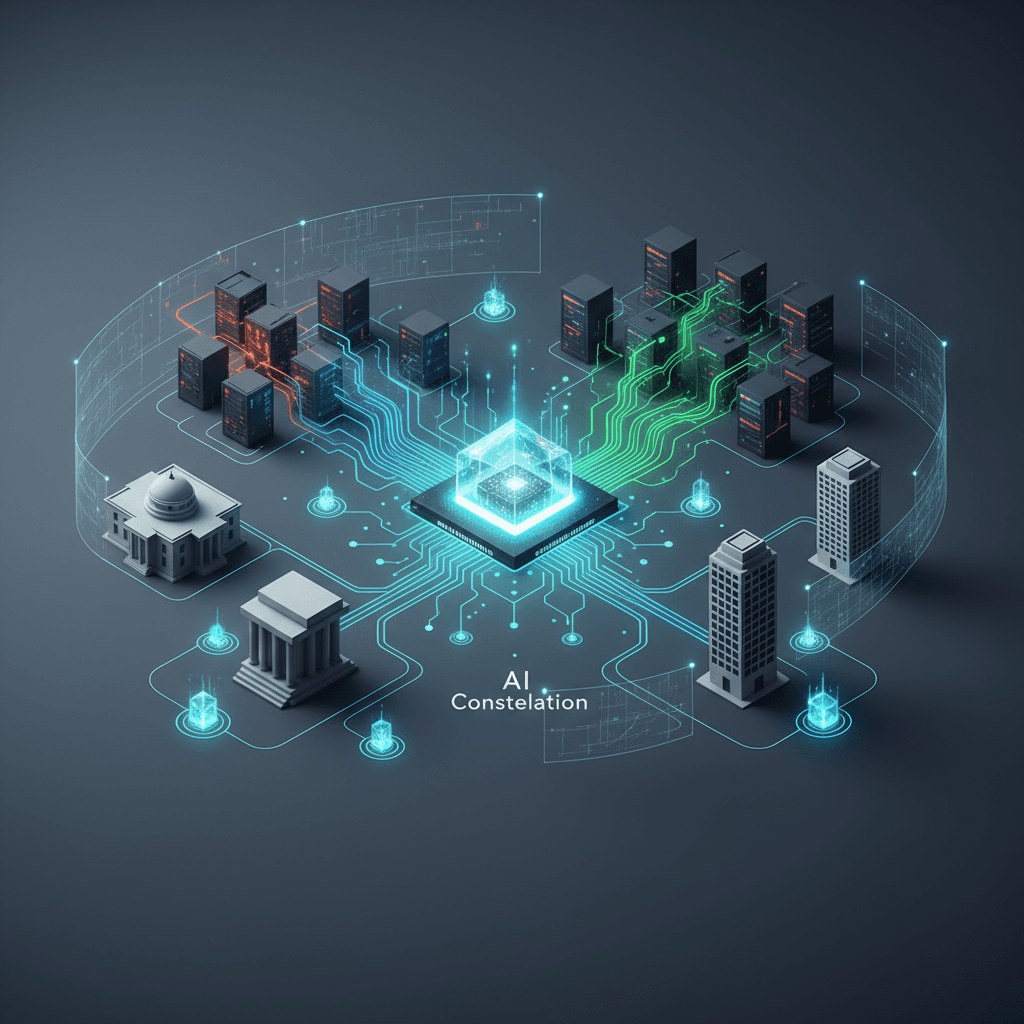

The launch of tsuzumi 2 signals a broader strategic direction for NTT and holds significant implications for the AI industry in Japan and beyond. As a "purely domestic LLM," tsuzumi appeals to Japanese organizations with strict data residency requirements and concerns about sending sensitive corporate information to foreign AI providers.[3] This positions NTT as a key player in the nation's push to enhance its sovereign AI capabilities. Beyond individual deployments, NTT envisions a future built on the concept of an "AI Constellation."[6][7][8] This architecture involves connecting numerous small, specialized AI models like tsuzumi, each an expert in a specific domain, to work together as an intelligent collective.[9][7][8] This modular, decentralized approach stands in stark contrast to the strategy of building a single, monolithic, all-knowing model.[6] By distributing workloads across a network of efficient AIs, NTT aims to solve complex societal problems through collective intelligence while maintaining a sustainable and resource-efficient footprint.[9][8] This vision challenges the prevailing "bigger is better" ethos in the AI world and charts a course for a more accessible, secure, and sustainable AI-powered future for enterprises.