New 'Force Prompting' AI Creates Realistic Video with Physical Control

Brown and DeepMind's 'force prompting' lets AI create dynamic, realistic video from simple physical nudges, without complex 3D models.

June 3, 2025

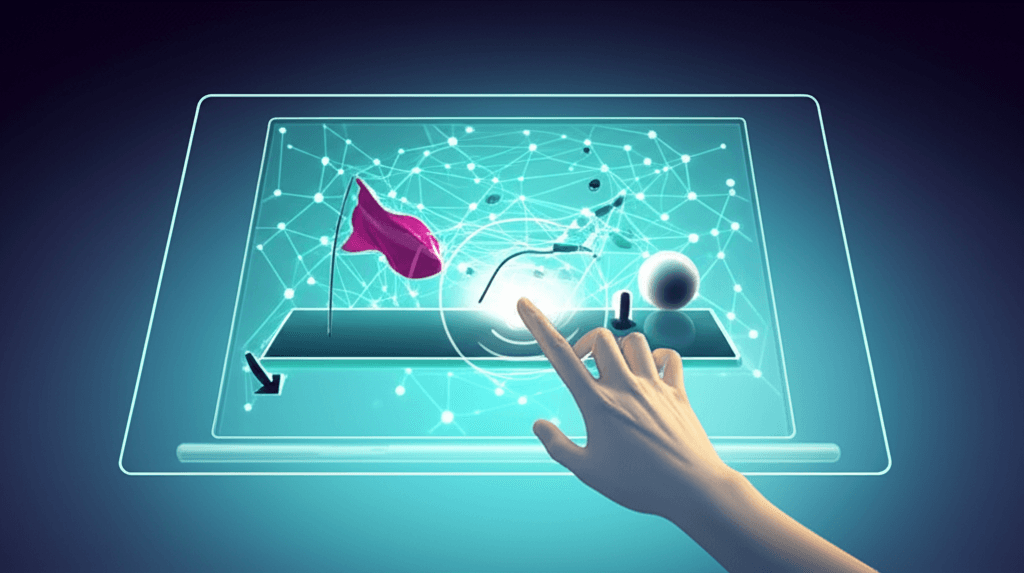

A collaborative effort between researchers at Brown University and Google DeepMind has introduced a novel approach called "force prompting," enabling generative video models to produce remarkably realistic motion by responding to artificial physical forces.[1][2] This innovative technique allows for the creation of dynamic video sequences without relying on traditional 3D models or complex physics engines during the inference stage, potentially revolutionizing how realistic animations and simulations are generated.[1][3][4][5] The system can interpret user-defined forces, such as a gentle poke on a plant or the sweeping effect of wind across a landscape, and translate these inputs into visually coherent and physically plausible motion in the generated video.[1][3][4] This marks a significant step towards building more intuitive and controllable "world models"—AI systems capable of simulating and understanding the physical dynamics of our environment.[1][3]

The core idea behind force prompting is to use physical forces directly as a control signal for video generation.[1][3] Users can specify these forces in two primary ways: as localized point forces, like an instantaneous nudge applied to a specific region of an image, or as global force fields, such as a sustained wind affecting the entire scene uniformly.[1][6][2][7] These forces, described by their direction and strength, are translated into vector fields that are fed into the video generation model.[2] The AI then leverages the vast knowledge of visual and motion priors embedded within its original pre-trained architecture to render how objects in the scene would realistically react to these applied forces.[1][4][5] For instance, the model can simulate a flag fluttering in response to a global wind prompt or a ball rolling when subjected to a localized poke.[1][7] The research team built upon an existing powerful video model, CogVideoX-5B-I2V, and augmented it with a ControlNet module specifically designed to process this physical control data, which is then passed into a Transformer architecture.[2] This allows the system to generate sequences, for example, of 49 frames per video, that adhere to the specified force dynamics.[2]

A key challenge in developing such a system is the acquisition of suitable training data that pairs video sequences with precise force annotations.[1][3][4] Collecting such data from the real world is fraught with difficulties, particularly in accurately measuring and recording the diverse forces at play.[1][4] Synthetic data generation also presents hurdles, often limited by the visual quality and domain diversity of available physics simulators.[1][4] The researchers ingeniously overcame this by using the Blender physics simulator to generate high-quality synthetic training videos.[1][7][3] For global forces, approximately 15,000 videos were created, featuring elements like flags fluttering, while for local forces, around 23,000 videos depicting objects like plants or balls being poked were synthesized.[2][7][4] Despite the relatively limited size of this training dataset and the synthetic nature of the demonstrations (focusing on a few object types like flying flags or rolling balls), the force prompting model exhibited remarkable generalization capabilities.[1][7][4][5] It can successfully simulate forces across a wide array of geometries, settings, materials, and object affordances not explicitly seen during training, and even shows initial indications of understanding concepts like mass.[1][6][7][4] This strong generalization is attributed by the researchers to two key elements: the visual diversity within the training data with respect to the control signal, and the strategic use of specific text keywords during the training process, which appear to help the model better understand and respond to force control signals.[1][7][4][5] Impressively, the model can be trained in just a single day using four Nvidia A100 GPUs, making these advanced techniques potentially accessible for broader research and application.[8][2][7][4]

The implications of force prompting for the AI industry, particularly in video generation and simulation, are substantial. This method offers a more intuitive and direct way to control the physical behavior within generated videos compared to traditional text-based prompting or complex trajectory-based control systems, which often require specifying the location of pixels across every frame.[8][2] Human evaluations have demonstrated that force prompting exhibits superior adherence to physical instructions while maintaining realistic motion and visual quality when compared to text-conditioned baselines.[8][5] It has also shown competitive performance against methods like PhysDreamer, which explicitly use physics simulation during generation, outperforming it in force adherence and achieving comparable motion realism.[8][4] Unlike systems that necessitate 3D assets or active physics simulators at the point of inference, force prompting integrates this physical understanding into the model itself, streamlining the generation process.[1][3][4][5] This could significantly lower the barrier to creating realistic physical animations, opening up new possibilities for content creators, game developers, and VFX artists who may lack deep expertise in physics simulation.[9][10][11] The technology could enhance realism in virtual environments for training simulations, improve special effects in film and media, and even contribute to fields like robotics by providing a better understanding of intuitive physics for agent interaction with the world.[12][9] The public release of the datasets, code, and model weights, along with interactive video demos, further encourages exploration and development in this domain.[1][8][7][4]

Despite its innovative approach and promising results, force prompting is not without its limitations. The researchers acknowledge that the system's performance is fundamentally constrained by the underlying physical understanding of the pretrained video model it's built upon; force prompting focuses on controlling existing physical capabilities rather than endowing the model with new, more profound physics comprehension.[6] Failures can occur, particularly when the prompted physical motion is "out-of-domain" for the base model, meaning it involves scenarios or object interactions vastly different from what the model has learned.[6][13] Visual fidelity or physical realism can also suffer if the intent of the force prompt strongly conflicts with the video prior's inherent biases or learned patterns.[6] For example, in scenarios involving wind blowing hair, the model might sometimes cause faces to reorient based on wind direction, likely reflecting correlations observed in the training data where hair typically blows backward relative to a face.[6] Further research will be needed to address these challenges, potentially by incorporating more diverse and complex physical interactions in training, or by developing architectures that can reason more deeply about cause and effect in physical systems. The current work, however, represents a significant step toward more controllable and physically aware generative models.[6]

In conclusion, the development of force prompting by the Brown University and DeepMind team offers a significant advancement in AI-driven video generation. By enabling direct physical force inputs to guide motion, this technique sidesteps the need for external physics engines or detailed 3D models during video creation, allowing for efficient generation of realistic physical interactions.[1][2][4] Its ability to generalize from limited synthetic data to a wide range of scenarios underscores the power of leveraging the priors in large pretrained models.[1][6][5] While limitations exist, the introduction of force-aware video generation opens exciting new avenues for creative expression, simulation, and the development of AI systems with a more intuitive grasp of the physical world, potentially transforming industries from entertainment to engineering.[6][9][10]

Sources

[4]

[5]

[6]

[7]

[8]

[9]

[10]

[12]

[13]