Mythic Secures $125M to Challenge NVIDIA with 100x More Efficient AI Chips

Mythic secures $125M to fuse compute and memory, promising 100x energy efficiency for next-generation AI.

December 18, 2025

The intensifying race to build the computational backbone of the global artificial intelligence economy has a formidable new challenger, as Mythic, a Palo Alto-based chip startup, secured a $125 million funding round to scale its radically energy-efficient AI chips. The oversubscribed round, led by DCVC and featuring strategic investors including Honda Motor Co. and Lockheed Martin Corp., is poised to fuel Mythic's efforts to directly challenge the dominance of NVIDIA’s Graphics Processing Units, claiming a staggering 100 times greater energy efficiency in AI inference workloads. The company’s ambition is to address what its CEO, former NVIDIA veteran Taner Ozcelik, calls the "insatiable, ruinous energy consumption" of modern AI, deploying its Analog Processing Units across data centers, automotive systems, robotics, and defense.

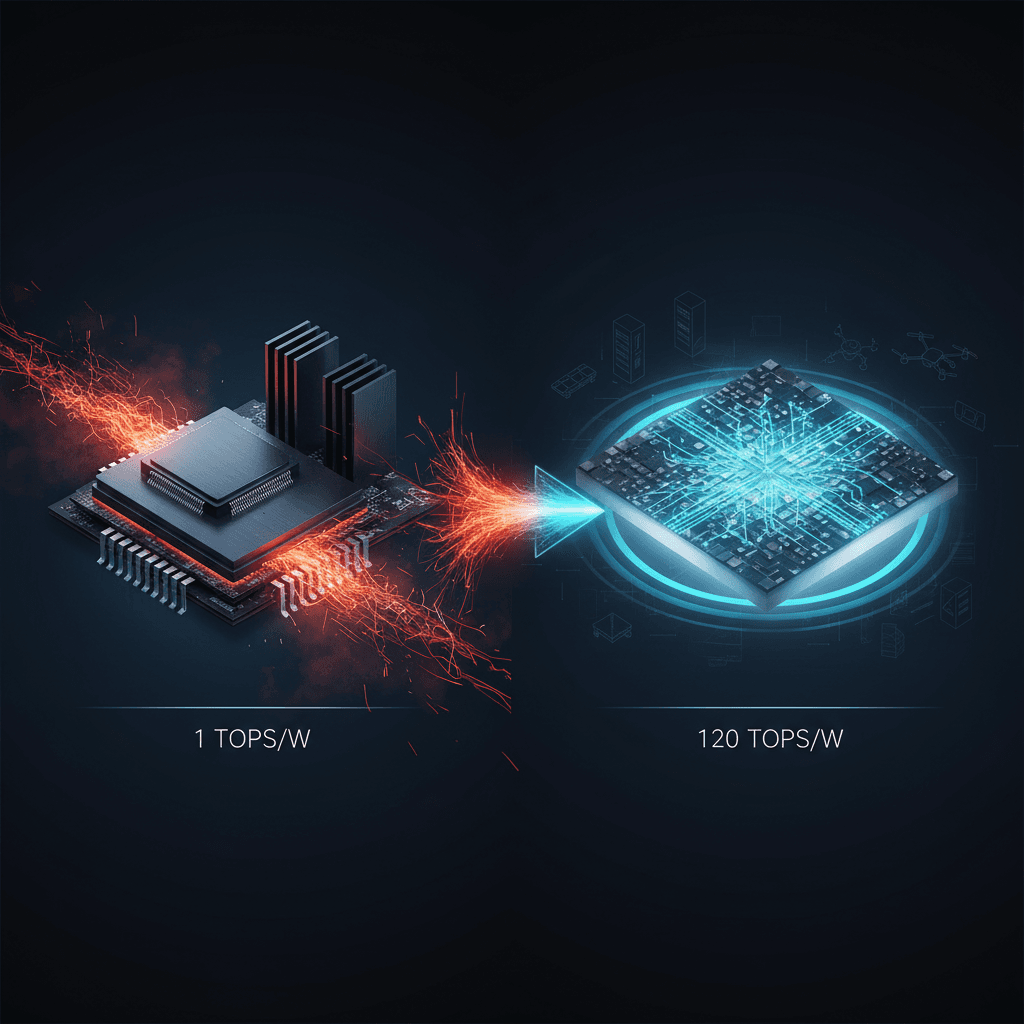

Mythic’s core innovation lies in its fundamental departure from the prevailing digital, Von Neumann architecture used by industry-standard GPUs. Traditional digital chips suffer from a memory bottleneck—the constant, energy-intensive movement of data back and forth between the processor and separate memory modules, a process that accounts for a significant portion of total power consumption. In contrast, Mythic's Analog Processing Units, or APUs, leverage analog compute-in-memory technology, a breakthrough that fuses compute and memory onto a single plane. The architecture stores AI model parameters directly within the memory array, utilizing memory elements as tunable resistors, performing the essential matrix multiplication operation of neural networks by supplying inputs as voltages and collecting outputs as currents. This allows for hundreds of thousands of multiply-accumulate operations to occur in parallel, completely eliminating the costly data movement that cripples digital efficiency[1][2][3]. The company claims its current APU architecture delivers 120 trillion operations per second (TOPS) per watt, a metric the company positions as 100x better than today's top-of-the-line GPUs for AI inference, including memory transfers[4][5]. The unprecedented performance-per-watt is validated in production silicon and has been demonstrated on key AI models[6][7].

This leap in energy efficiency carries profound implications for the exploding Large Language Model (LLM) market and the increasingly crucial field of edge AI. For data centers grappling with soaring electricity costs, the economic argument for Mythic’s APUs is compelling. Internal benchmarks suggest the APUs could enable up to 750 times more tokens per second per watt than high-end NVIDIA GPUs when running 1T parameter LLMs[5]. Furthermore, the company projects massive cost advantages, with internal benchmarks showing benefits of up to 80x in cost per million tokens, reaching a cost as low as four cents per million tokens in 1T parameter models[5]. By collapsing the energy and cost barrier, Mythic aims to democratize access to and deployment of sophisticated AI models[8]. The company’s long-term vision includes enabling LLMs on par with models like GPT-3+ to run locally on low-power devices, such as smartphones, without an internet connection, at approximately 1/100th the cost of contemporary solutions[6].

Beyond the hyperscale data center, the technology’s power-sipping nature targets mission-critical applications where energy budget and physical size are paramount. The company is strategically focused on four trillion-dollar industries: data centers, automotive, robotics, and defense[6][8]. The inclusion of strategic investors like Honda Motor Co. and Lockheed Martin Ventures underscores the potential impact on autonomous systems and defense applications[9][10][7]. In the automotive sector, the APUs promise to provide 100x more AI performance in vehicles, leading to smarter sensors and more intelligent planning capabilities[8]. For the defense industry, the ability to pack a powerful, ultra-low-energy AI engine into a sensor system can dramatically extend the run-time and capability of drones and autonomous military systems[7][5]. Mythic has also introduced a sub-one-watt sensing platform called Starlight, which integrates its APUs into image sensors, improving signal extraction and low-light performance by up to 50x, a critical advantage in mission-critical and surveillance use cases[11][5].

While NVIDIA maintains an overwhelming market share, particularly in AI training, Mythic’s focus on energy-efficient inference—the stage where trained models are deployed to make predictions—positions it as a specialized, high-performance alternative. The company's leadership, which includes CEO Taner Ozcelik, who founded and led NVIDIA's automotive business for a decade, provides significant industry credibility[6][12][4]. The company has made efforts to ease adoption through a comprehensive software toolkit that helps developers adapt and optimize AI models using techniques like quantization, with the goal of complementing, rather than outright replacing, GPUs in the broader computing ecosystem[4][5]. The fresh capital infusion, which follows a period of intense restructuring and architectural rebuilding under new leadership, signals a significant vote of investor confidence in the company's patented analog computing approach[6][4][7]. As the global AI race pivots toward issues of efficiency, cost, and physical deployment, Mythic’s Analog Processing Units present a compelling case for a new era of accelerated, energy-parsimonious computing that could redefine the landscape of AI inference from the cloud to the edge.