Mercedes CLA commercializes NVIDIA AI stack, enabling complex urban reasoning.

NVIDIA DRIVE and Mercedes-Benz launch AI-defined mobility, bringing 508 TOPS of compute power and advanced urban reasoning to the compact CLA.

January 6, 2026

The production debut of NVIDIA DRIVE AV software in the new Mercedes-Benz CLA marks a pivotal moment in the transition to AI-defined vehicles, transforming the compact car segment into a proving ground for sophisticated, safety-critical technology. The CLA, notable as the first Mercedes-Benz model built on the automaker’s new, proprietary MB.OS platform, introduces enhanced Level 2 (L2++) driver-assistance features to a mass-market vehicle, setting a new benchmark for software-enabled mobility. This full-stack integration has already delivered tangible results, with the CLA recently earning a five-star safety rating from the European New Car Assessment Programme (Euro NCAP), a score significantly bolstered by the performance of its active safety features in accident mitigation and avoidance.[1][2]

At the core of this advanced capability is the NVIDIA DRIVE AV software, a comprehensive, full-stack solution that manages the complex tasks of perception, planning, and control. The vehicle's in-car compute system is powered by the NVIDIA DRIVE platform, which includes the NVIDIA Orin-X chip. This accelerated compute system is capable of processing data at up to 508 trillion operations per second (TOPS), a level of performance essential for real-time AI decision-making.[3][4] This massive computational power is fed by a sophisticated sensor suite comprising approximately 30 sensors, including cameras, radar, and ultrasonic sensors, which together generate a robust, 360-degree environmental perception.[3][5] The DRIVE AV architecture itself is engineered for both intelligence and paramount safety, utilizing a dual-stack approach: an AI end-to-end stack for core driving functions runs parallel to a classical safety stack. The classical stack, built on NVIDIA's Halos safety system, provides essential redundancy and safety guardrails, ensuring that the vehicle operates within predefined safety parameters and offers a critical fail-safe layer, an architectural choice designed to meet stringent safety certification requirements.[1][2][5]

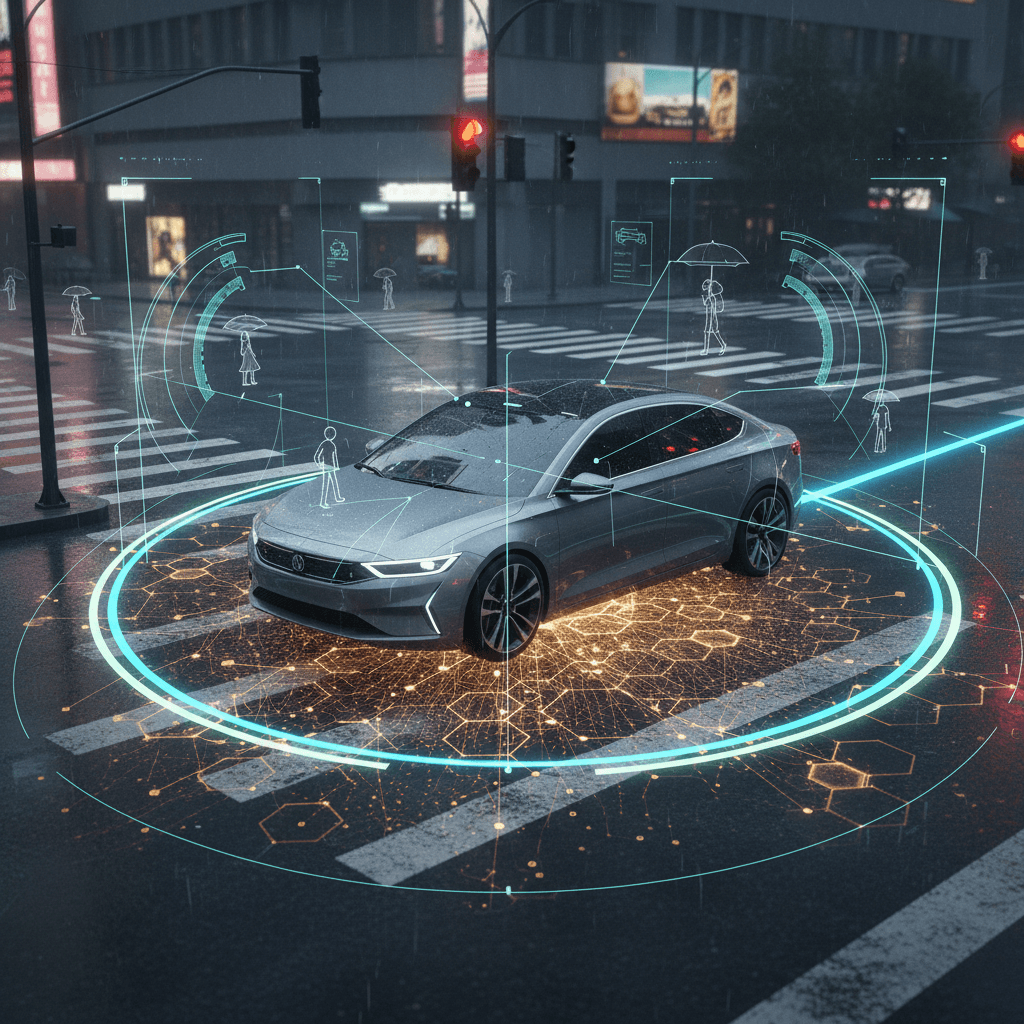

The advanced driver-assistance system, marketed by Mercedes-Benz as MB.DRIVE ASSIST PRO, leverages deep learning models to enable expanded Level 2 functionality. For the driver, this translates into advanced assistance capabilities, including point-to-point urban navigation, which allows the vehicle to autonomously manage city intersections, turns, and traffic lights under driver supervision.[2][3] This is a significant competitive step, moving beyond predictable highway driving to tackle the "long tail" of complex, unexpected urban scenarios that historically challenge autonomous systems. The AI models are designed to interpret the driving environment holistically, including the ability to understand and proactively respond to vulnerable road users such as pedestrians, cyclists, and scooter riders.[2] Furthermore, the system incorporates automated parking functions capable of navigating constrained and dynamic scenarios with AI-based precision and allows for cooperative steering between the system and the driver.[2][6]

A central component of NVIDIA's innovation in this system is the integration of its newly unveiled 'Alpamayo' family of open-source Vision-Language-Action (VLA) models. This AI breakthrough introduces advanced "reasoning" capabilities to autonomous vehicles, enabling them to process and understand visual inputs, assess complex or rare driving situations, and generate appropriate driving responses, much like human chain-of-thought reasoning.[7][8][9] This move is indicative of a broader industry shift, with NVIDIA's Vice President of Automotive, Ali Kani, positioning the company as the "intelligence backbone" for the automotive sector as it embraces "physical AI."[1] The concept transcends a one-time product launch; the partnership and the underlying MB.OS platform are structured to support over-the-air (OTA) software updates, allowing the vehicle to be perpetually improving and programmable throughout its lifecycle.[1][3]

The entire deployment is underpinned by a robust, "cloud-to-car" development and validation loop. This industrial-scale framework utilizes NVIDIA DGX systems for training the DRIVE AV foundation models on massive, diverse datasets. The trained models are then rigorously validated in high-fidelity simulation environments like NVIDIA Omniverse and Cosmos, which enable the testing of billions of virtual miles and rare, hazardous edge cases that would be impractical to replicate in the real world.[2][5] This closed-loop approach of data collection, training, simulation, and deployment facilitates rapid iteration on driving algorithms and ensures the system’s high level of safety and accuracy.[1][6] For the AI industry, this production launch in a high-volume Mercedes-Benz model validates the feasibility of deploying a full-stack, AI-centric solution at scale and firmly establishes NVIDIA as a key enabler in the burgeoning robotics market. The integration of advanced reasoning models and a future-proof architecture, with a clear path from Level 2++ to Level 4 autonomy, signifies a decisive step away from traditional, siloed software development toward a holistic, AI-defined transportation future. The CLA’s debut is not merely the launch of a new car, but the commercial realization of an industrial digital stack designed to make every vehicle a continuously learning, software-driven machine.[9][10]

Sources

[1]

[3]

[4]

[5]

[6]

[9]

[10]