LLMs Become AI Simulators, Unlocking the Next Generation of Autonomous Agents

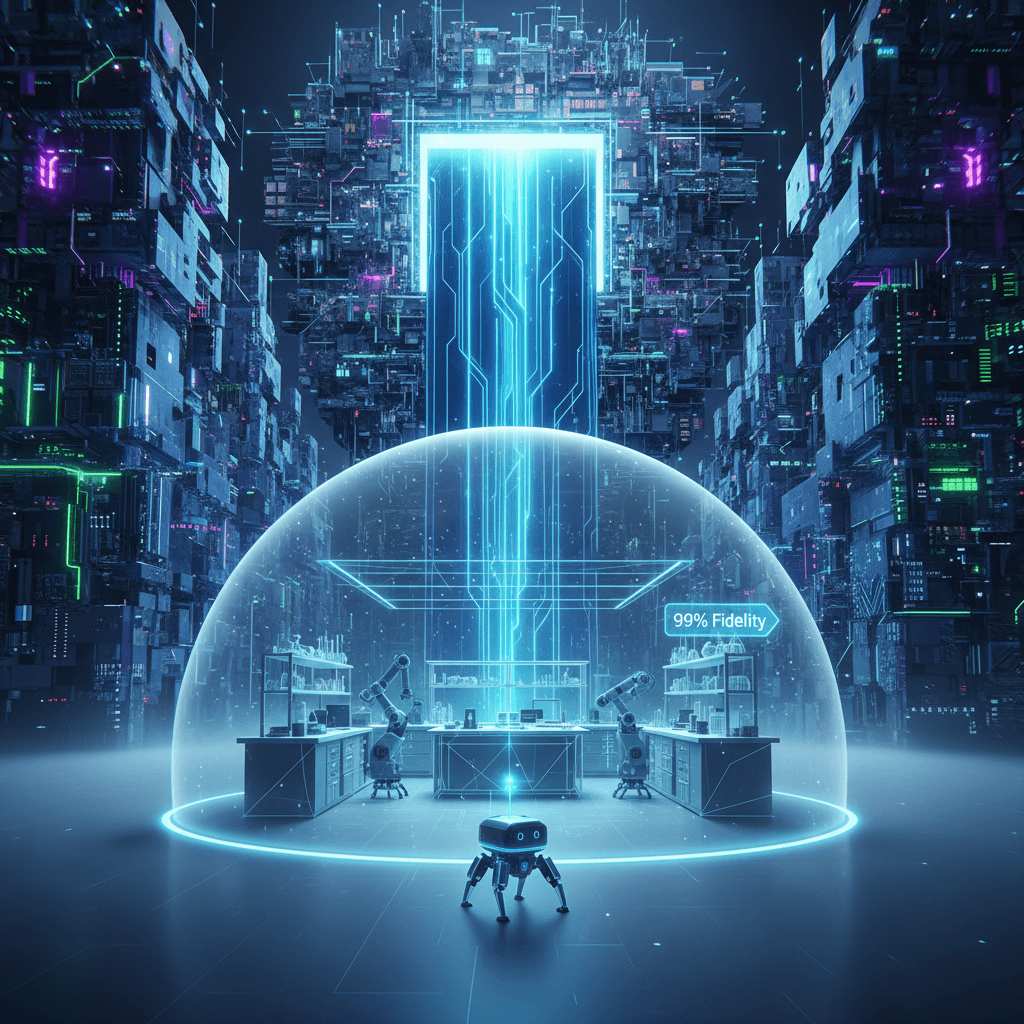

Research transforms LLMs into 99% accurate internal world models, generating scalable experience for autonomous agents.

January 1, 2026

The development of truly autonomous artificial intelligence agents has long been hampered by a fundamental constraint: the difficulty and expense of gathering vast amounts of real-world training experience. For agents designed to operate in complex, stateful environments—from robotics and industrial automation to sophisticated personal assistants—learning requires repeated, consequence-rich interaction, which real-world settings are often too limited, rigid, and costly to provide at scale. However, new research from an international team of scientists spanning institutions including the Southern University of Science and Technology, Microsoft Research, Princeton University, and the University of Edinburgh, has found a powerful potential solution by demonstrating that large language models (LLMs) can be adapted to serve as highly accurate "world models" for agent training. This discovery suggests a path to alleviate the current training bottleneck, positioning LLMs not just as conversational interfaces, but as the internal simulators essential for nurturing the next generation of generalist AI.[1]

The concept of a "world model" is foundational to the functioning of an intelligent agent. It is essentially an internal simulator or mental representation that encapsulates the dynamics of an environment, enabling the agent to reason about how its actions will affect the world before executing them.[2][3] Unlike traditional, rule-based simulation engines that require extensive, brittle engineering to build a custom environment, the new research posits that the massive scale and inherent common sense knowledge of LLMs make them a viable, flexible alternative. The LLM, which is typically trained to predict the next word or token in a sequence, can be reframed to predict the next state of an environment given the current state and a proposed action.[1] This predictive capability is what transforms the language model into an experience generator, allowing an agent to acquire skills through simulated interaction rather than solely relying on costly, slow, or dangerous trial-and-error in a physical space. This process of internal deliberation, where an agent simulates future outcomes, is a critical step towards more human-like decision-making in AI.[4][5]

The study's findings provide compelling evidence for this transformation, detailing how targeted fine-tuning can unlock a high degree of simulation accuracy. In experiments involving structured test environments, which included simulations of complex household and laboratory settings, two open-source models—Qwen2.5-7B and Llama-3.1-8B—were fine-tuned to predict state transitions.[1] The results showed that these models achieved approximately 99 percent accuracy in predicting the resulting state after an agent performed an action.[1] The research team assessed the LLMs’ ability to perform the two critical functions of a world model: precondition prediction (determining if an action is applicable in the current state) and effect prediction (predicting the new state after the action is executed).[2][3] By leveraging synthetic data generation techniques, the researchers demonstrated that fine-tuning could induce the LLMs to align their generated precondition and effect knowledge with a human understanding of world dynamics.[2][3] This high level of fidelity, achieved across a set of five distinct test environments, underscores the potential for LLMs to generate realistic, diverse agent trajectories that can effectively replace or augment real-world data collection, thereby enabling more robust reinforcement learning.[1][6]

This research carries significant implications for the future of the AI industry, primarily by offering a pathway to scalable agent training. The traditional method of training reinforcement learning agents is a laborious cycle of interaction with a real or custom-built virtual environment, which severely limits the diversity and volume of training data.[1][7] By using an LLM as a world model, developers can leverage its powerful generative capabilities to synthesize vast amounts of diverse, high-quality training data, dramatically reducing the time and resources needed for development.[8][6] For instance, the Simia-SFT (Supervised Fine-Tuning) and Simia-RL (Reinforcement Learning) frameworks proposed in related work show that an LLM-based simulator can generate diverse agent trajectories from small seed sets, making agent training both environment-agnostic and scalable.[8][6] Furthermore, the study suggests that scaling the performance of these world models depends on a combination of both data volume and model size, reinforcing the ongoing push for more powerful foundational models.[1] The ability to train agents in a simulated environment first means that an agent can develop cognitive architectures for memory, reasoning, and tool use before ever being deployed in a real-world setting.[7] This is a profound shift, transforming the development process from a hardware-constrained, real-time-limited endeavor into a purely computational one.

Looking forward, this paradigm shift towards LLM-based world modeling is expected to accelerate the transition of AI from simple assistants to generalist, autonomous agents. The development of robust world models is widely considered a non-negotiable step toward achieving generalized AI, as the ability to forecast the consequences of an action is a core component of intelligent planning.[9] By demonstrating that this essential component can be built upon the existing infrastructure of large language models, the research offers a critical engineering shortcut. It replaces the heavy and brittle nature of bespoke environment engineering with a flexible, LLM-based simulation that can be quickly adapted to new tasks and domains.[8][6] This new capability is poised to enable breakthroughs in fields such as robotics, complex planning, and multimodal AI, where agents need to reason about physical and conceptual interactions with high fidelity. The findings validate the vision of creating experience-based AI training systems where agents can develop skills through internal deliberation and simulation, thereby greatly enhancing their effectiveness and reliability across a wide array of unpredictable, real-world scenarios.[1][10] The path to autonomous, general-purpose agents appears to be running directly through the expansive, generative landscapes of the large language model.

Sources

[2]

[5]

[7]

[8]

[9]

[10]