Insurance embeds deep AI: Carriers must implement explainable systems to manage bias.

Deep AI integration revolutionizes underwriting and claims, demanding strict governance and Explainable AI for trust.

December 18, 2025

The integration of artificial intelligence into the operational heartbeat of the insurance sector represents a fundamental shift from niche automation to core business enablement. While the finance function in many organizations was often the initial proving ground for automation technologies, AI is now directly woven into the day-to-day operational work of underwriting, claims, and customer service, moving far beyond its former role as a background modelling capability. This deep operational embedding of AI, particularly advanced technologies like Large Language Models (LLMs) and computer vision, is delivering massive returns on investment, but it also introduces critical challenges related to compliance, bias, and human trust that insurers must systematically address to ensure truly effective adoption.

The most transformative impact of AI is visible in the industry's two most critical functions: underwriting and claims processing. Carriers are leveraging AI for intelligent document processing (IDP) to rapidly replace old scan-and-index systems, with LLMs now handling optical character recognition (OCR), indexing, entity extraction, and document summarization for both claims and underwriting submissions. This automation is credited with cutting manual processing times for documents like attending physician statements from days to mere minutes, delivering substantial efficiency gains. In underwriting, AI analyzes vast amounts of structured and unstructured data, including historical claims, customer behavior, litigation trends, and even external data like market changes, to create a more comprehensive risk profile, which allows for more accurate risk selection and pricing accuracy[1][2]. An estimated 40% of underwriters' time is spent on non-core and administrative activities, a loss of efficiency that is estimated to represent up to $160 billion over a five-year period across the industry; AI-driven solutions are directly targeting this administrative burden[3]. By automating submission ingestion, data enrichment, and triage, intelligent underwriting allows human experts to focus on the nuanced evaluations of submissions most likely to drive profitable bound premium[3].

The claims lifecycle has likewise been revolutionized by direct AI involvement. Machine learning algorithms, coupled with computer vision, are increasingly used for straight-through processing, where minor claims—such as those for minor car damage—can be submitted via a mobile application, analyzed from photos, and approved for payment within hours without human intervention[4]. This 'no-touch' claims processing not only accelerates settlement time, which is a key driver of claimant discontent, but also allows claims professionals to spend time on the more complex or nuanced parts of the process, maintaining a critical human-centric approach to intricate cases[1][3]. Furthermore, AI plays a crucial role in mitigating risk and financial loss through real-time fraud detection and prevention. By examining customer and claims data for patterns and inconsistencies that indicate fraudulent activity, AI systems flag suspicious cases for further investigation, helping to catch fraud before significant financial losses occur[5][6].

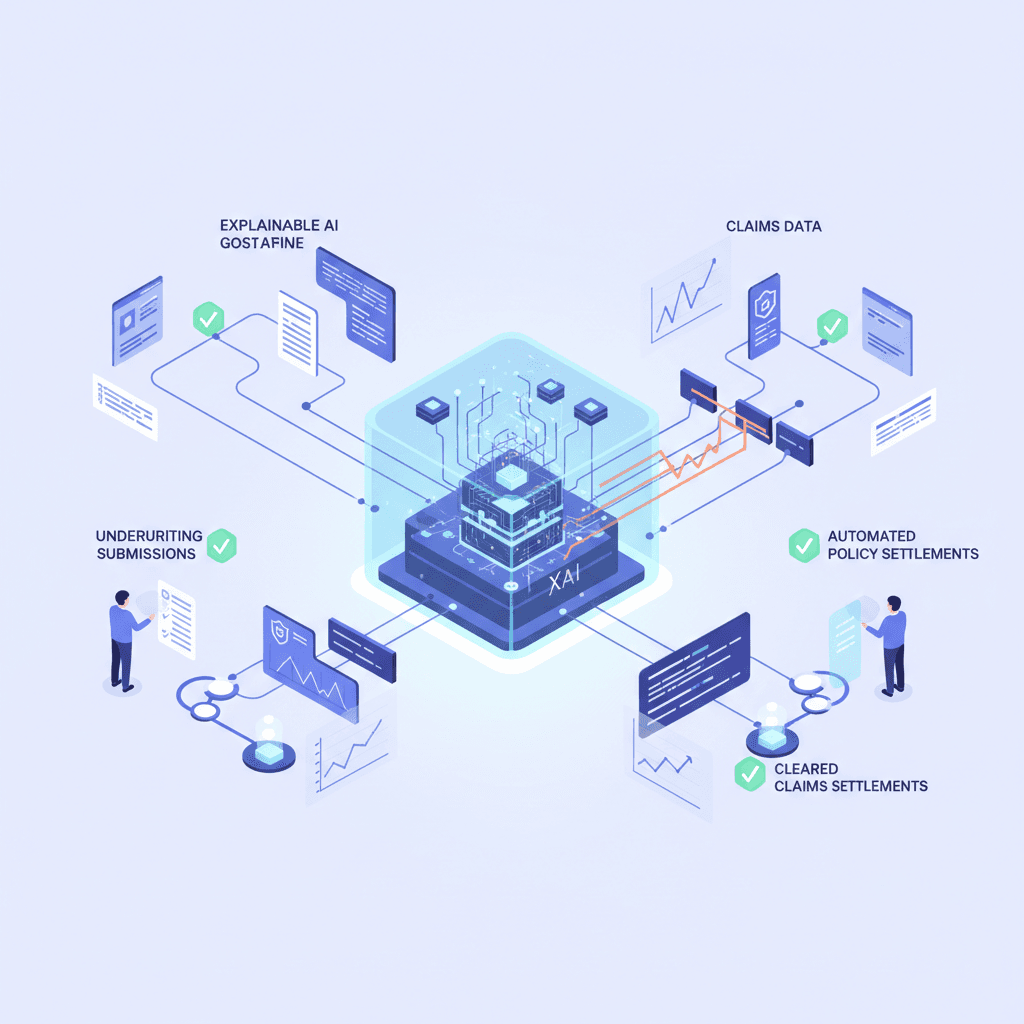

However, the depth of AI’s operational role necessitates a rigorous focus on responsible implementation, with regulatory and ethical considerations posing the most significant barriers to achieving effective, scalable systems. As AI models become more sophisticated, they can transform into opaque "black box" systems whose decision-making logic is difficult to trace and explain[7]. This lack of transparency directly conflicts with regulatory requirements that demand insurers be able to justify their decisions to customers and oversight bodies[8]. Consequently, the insurance industry is increasingly adopting Explainable AI (XAI), a set of techniques designed to make AI models transparent and interpretable by providing clear insights into how they arrive at their conclusions[7][9].

A failure to build in governance from the start creates a high risk of non-compliance, particularly concerning the insidious challenge of algorithmic bias[10][8]. If the input data used to train AI models is biased—unintentionally propagating historical racial, gender, or socioeconomic disparities—the decisions made by the AI will inevitably reflect and amplify those biases, risking discriminatory outcomes in underwriting and pricing[3][2]. Overcoming this requires more than just clean code; it demands a focus on data quality, ensuring training datasets are accurate, representative, and balanced, and that transparency protocols and clear audit trails are established before AI systems go live[11][10][7]. Experts are advocating for XAI to be a core test condition when new models are implemented, ensuring that explainability and transparency are integral from the development stage[12].

Ultimately, ensuring effective AI in insurance operations boils down to a strategic blend of technological investment and cultural change. Best practices dictate that successful adopters begin with clear, ROI-driven goals and prioritize use cases that are high-volume yet low-risk, such as automating the First Notice of Loss (FNOL) intake[13]. Crucially, the approach must be one of human-AI collaboration, not replacement. The technology is designed to augment and accelerate human decision-making, not fully replace the human claims professional or underwriter, especially in complex scenarios[1]. Insurers that successfully implement a systematic change management program, fostering a culture where employees trust and utilize AI tools in their daily tasks, will be the ones to translate AI potential into sustainable competitive advantage[14]. As the costs of AI implementation continue to fall and as the technology matures, deep integration of responsible, explainable AI is no longer optional but a necessary strategic asset that will define the leaders of the global insurance industry[3][15].

Sources

[5]

[6]

[7]

[8]

[9]

[10]

[11]

[13]

[14]

[15]